Author: Jordi Pages < jordi.pages@pal-robotics.com >

Maintainer: Jordi Pages < jordi.pages@pal-robotics.com >

Support: tiago-support@pal-robotics.com

Source: https://github.com/pal-robotics/tiago_tutorials.git

| |

Planar object detection and pose estimation (C++)

Description: Planar textured object detection based on feature matching between live video feed an a reference image of the object. Then, the pose of the object is determined by homography estimation and provided the size of the object.Keywords: OpenCV

Tutorial Level: ADVANCED

Purpose

This tutorial presents a ROS node that subscribes to the live video feed of TIAGo and looks for keypoints in order to detect a known planar textured object. When found, the homography between the current view and the reference view is estimated. Then, using the known width and height of the object its 3D pose is also estimated. OpenCV is used in order to extract and match keypoints and to estimate the homography.

Pre-Requisites

First, make sure that the tutorials are properly installed along with the TIAGo simulation, as shown in the Tutorials Installation Section.

Download texture detector

In order to execute the demo first we need to download the source code of the person detector in the public simulation workspace in a console

cd ~/tiago_public_ws/src git clone https://github.com/pal-robotics/pal_texture_detector.git

Building the workspace

Now we need to build the workspace

cd ~/tiago_public_ws catkin build

Execution

Open trhee consoles and in each one source the workspace

cd ~/tiago_public_ws source ./devel/setup.bash

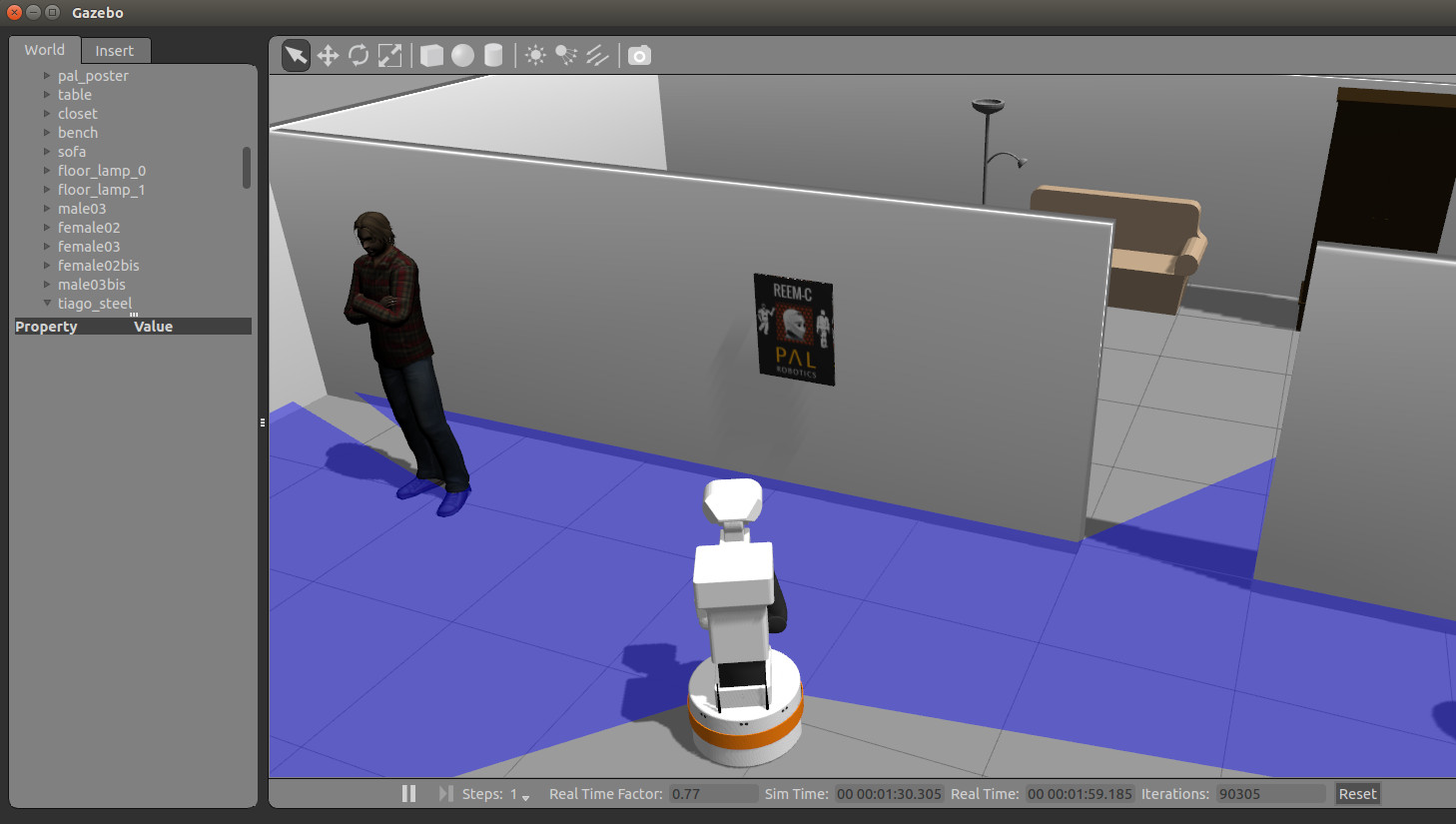

In the first console launch the simulation

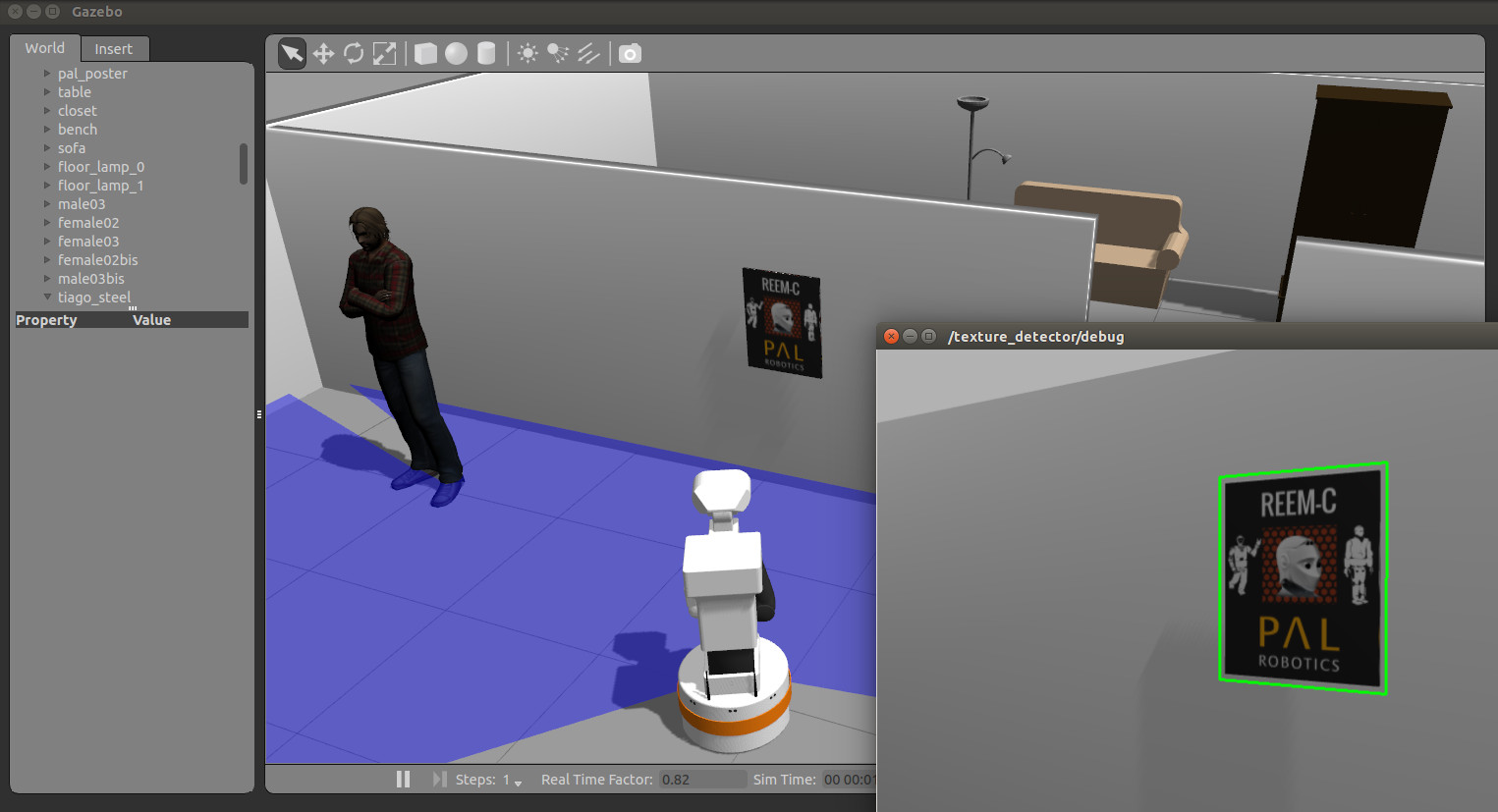

roslaunch tiago_gazebo tiago_gazebo.launch public_sim:=true end_effector:=pal-gripper world:=tutorial_office gzpose:="-x -0.13 -y 2.88 -z -0.003 -R 0 -P 0 -Y 2.05"

TIAGo will be spawn in front of a wall with a textured poster.

Then, in the second console run the following command

roslaunch pal_texture_detector texture_detector.launch rectified_image:=/xtion/rgb/image_rect_color camera_info:=/xtion/rgb/camera_info

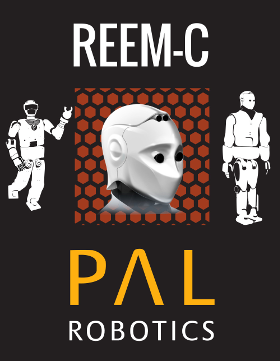

The texture detector will be inspecting the live video feed from TIAGo's RGBD camera looking for matches of the image in

`rospack find pal_texture_detector`/objects/pal_poster.png which is shown below

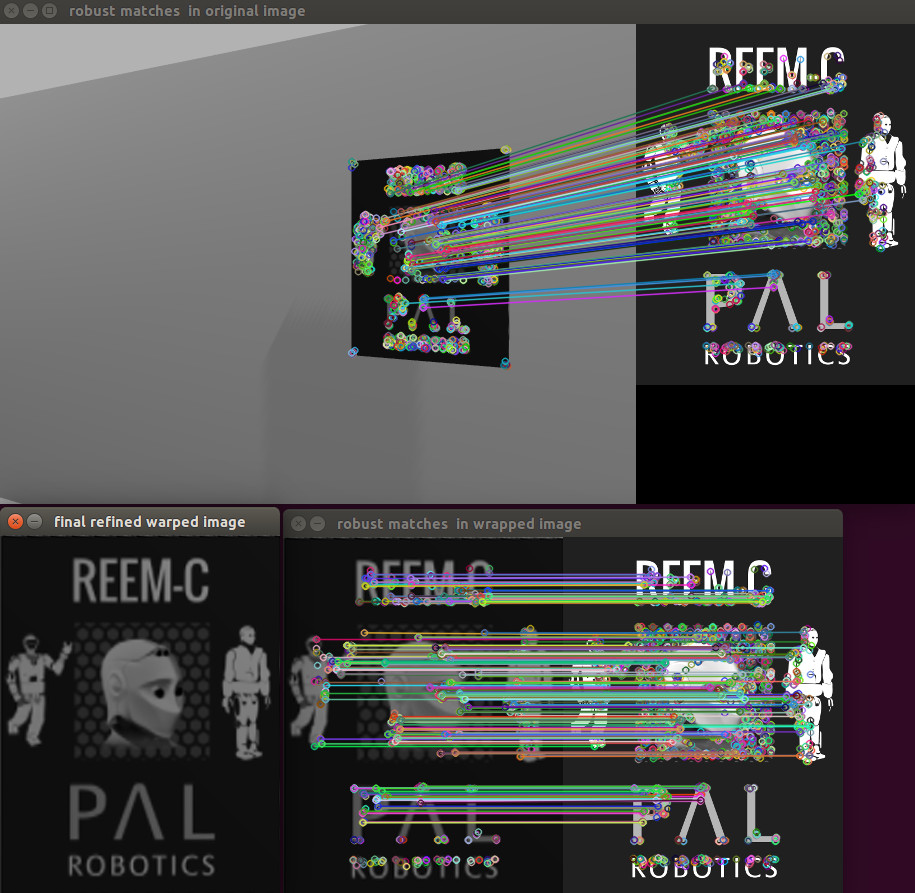

Three debug images will appear. One showing the kepoint matches found between the live video feed and the reference image; one with the current image wrapped according to the homography estimated in order to align it with the reference image; and the keypoint matches found between the wrapped and the reference image in order to validate the homography estimation.

In case that enough matches are found between both images the following topics will be published:

/texture_detector/debug /texture_detector/detection /texture_detector/pose

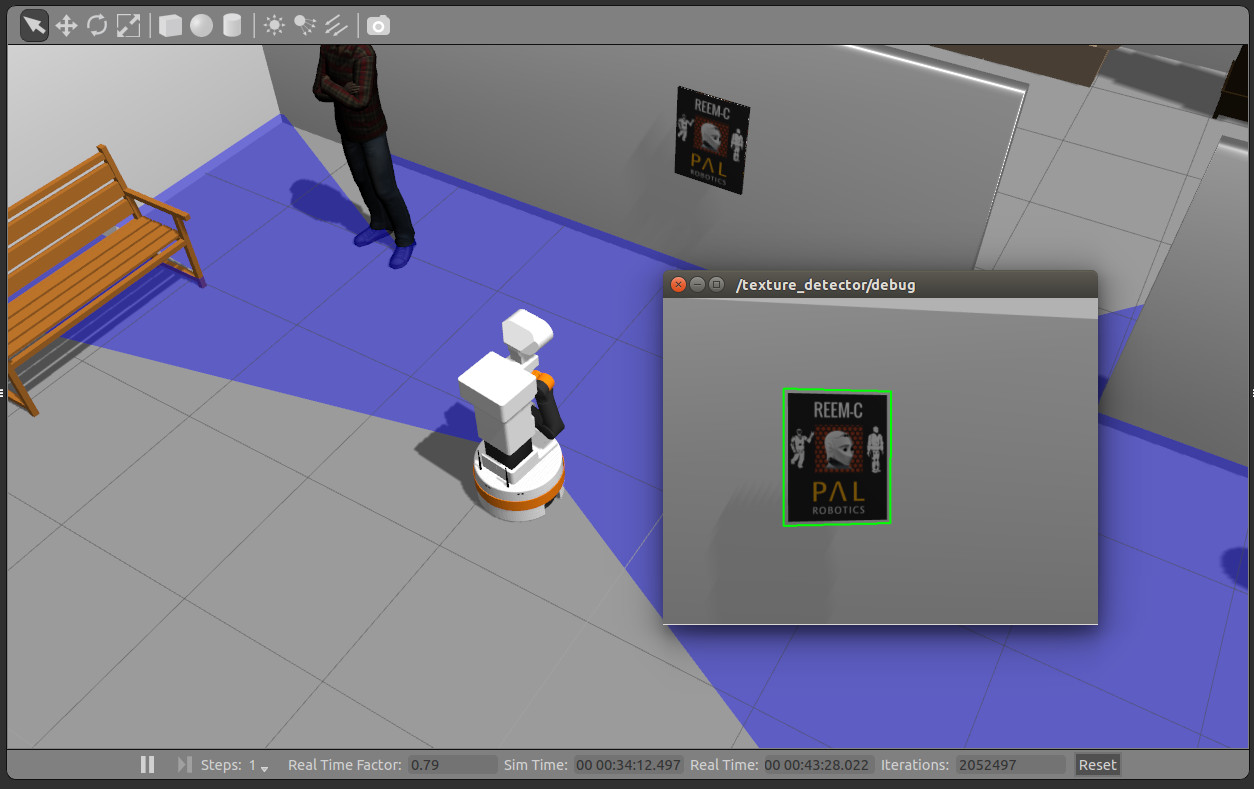

The /texture_detector/debug is an image with the detected object marked with a green rectangle. One way to inspect this image is:

rosrun image_view image_view image:=/texture_detector/debug

The /texture_detector/detection topic contains a copy of the image processed and the coordinates of the 4 pixels enclosing the detection.

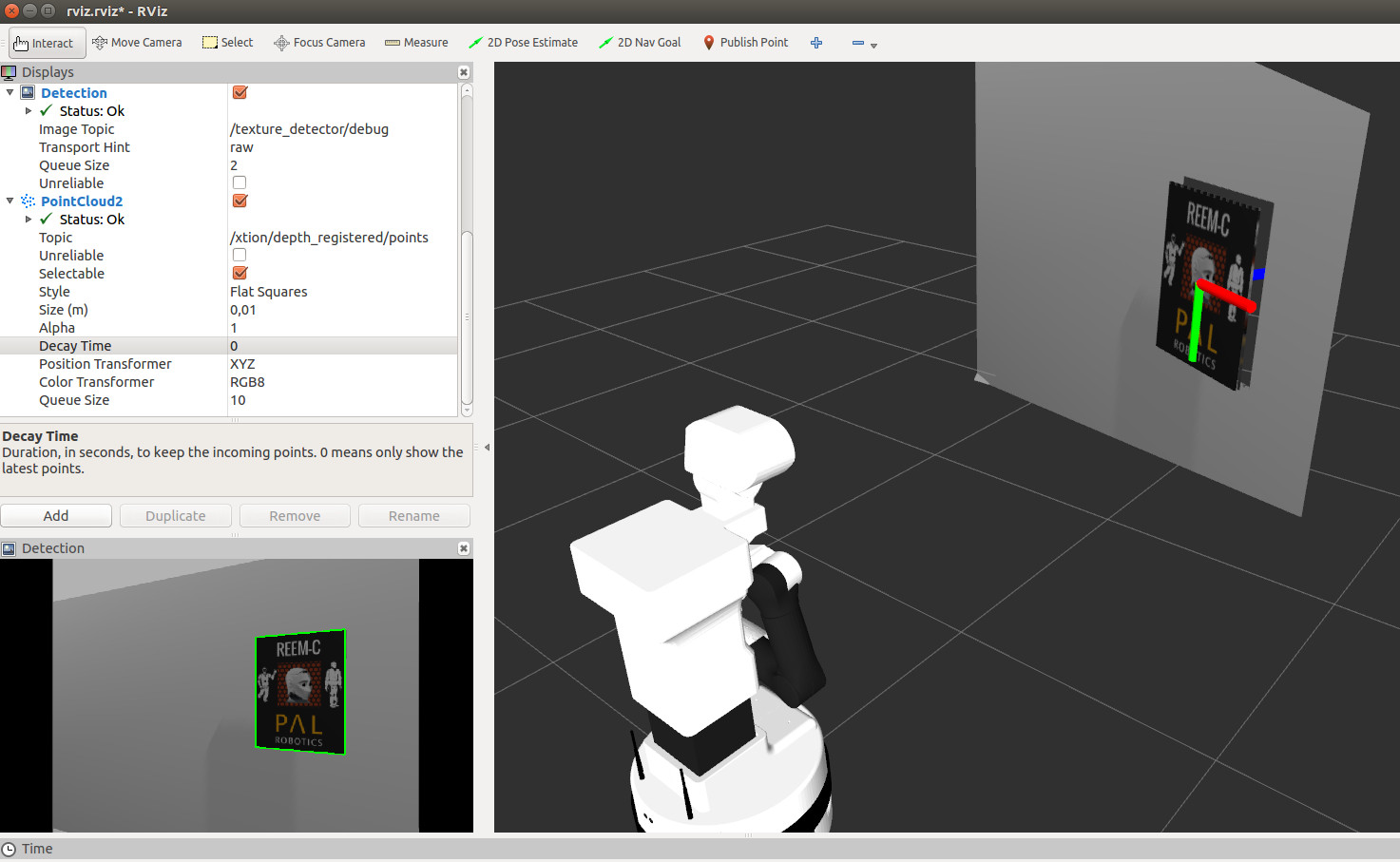

Finally, the /texture_detector/pose is a topic of type geometry_msgs/PoseStamped with the 3D pose of the object estimated by the homography. This pose can be visualized as a 3D frame in the rviz launched as follows

rosrun rviz rviz -d `rospack find pal_texture_detector`/config/rviz.rviz