| Note: We strongly recommend to read the ICRA12 paper or chapter 4.2 in the PhD Thesis. |

| |

Introductory tutorial for using ethzasl_sensor_fusion

Description: This tutorial is an introduction to ethzasl_sensor_fusion. Using an offline data-set you learn how the framework works.Tutorial Level: INTERMEDIATE

Next Tutorial: custom_sensor_design

Contents

Overview and Preparation

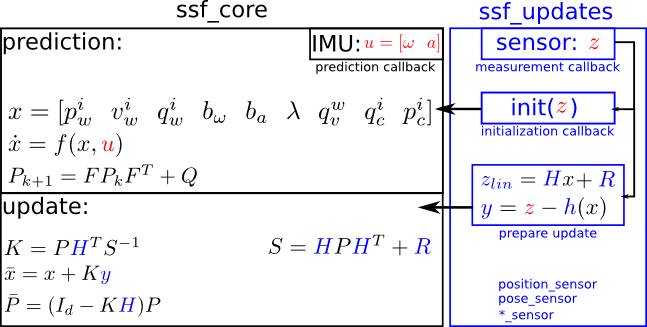

The ethzasl_sensor_fusion stack is a single-sensor fusion (ssf) framework based on an Extended Kalman Filter (EKF). Single sensor refers to one update sensor and the IMU as fix prediction sensor. The framework is essentially divided into the two EKF steps prediction and update. The prediction is made based on the system model (i.e. differential equations) and IMU readings. We do not take the IMU readings as update measurements since this would require matrix inversions and multiplications at IMU rate (which is in some cases up to 1kHz!).

In the above image, the red parts are sensor readings (from the IMU for prediction or from another sensor for the update). The blue parts are the parts which change if the update sensor type changes. The black parts are the constant (core) parts which stay analytically the same as long as we use an IMU for state propagation and the same state vector.

In the code, you will find:

p for pwi: the imu position in the world frame

v for vwi: the imu velocity in the world frame

q for qwi: the imu attitude in the world frame

b_w for bw: the gyro biases

b_a for ba: the accelerometer biases

L for λ: the visual scale factor with pmetric*λ = psensor

q_wv for q,,vw: the attitude between the update-sensor reference frame and the world reference frame

q_ci for qic: the attitude between IMU and the update-sensor

p_ci for pic: the distance between IMU and the update-sensor

As explained in this ICRA11 paper we use the error state representation in the EKF to facilitate computations with quaternions. An error quaternion is represented by δq=[1 θx θy θz]T where the 3-vector θ is used as state in the error state representation. This leads to a 25-states state-vetor.

From the software point of view, we wrap all constant (core) parts in the library ssf_core. All parts that depend on the update sensor can then be added by the user via a module in ssf_updates. More precisely, for each update sensor we have to write

- a sensor-value acquisition-routine (i.e. measurement callback)

- an initialization routine (initializing the state vector according to the information currently available from the update sensor)

- the linearized measurement Matrix (H), the computation of the residuals (y) and the measurement-covariance matrix (R).

For this tutorial, you do not need any particular preparation other than downloading this dataset (3.7MB). It contains IMU data (linear accelerations and angular velocities) for the EKF prediction step. For the update, it contains data from a 3DoF global position-sensor (in this case, it is the position reading from a Vicon system).

Exploring the Dataset

In the dataset, we use for this tutorial, you find the following messages:

/auk/fcu/imu (type: sensor_msgs::Imu):

IMU readings of a micro helicopter (or a robot in general). It contains the 3D linear accelerations and 3D angular velocities at 100Hz./vicon/auk/auk (type: geometry_msgs::TransformStamped):

Vicon 6DoF pose readings. Even though we have all 6DoF in the message, in this tutorial, we will only use the 3DoF position readings. The data is recorded at 100Hz. The update-rate should not be higher than the prediction rate.

You may use rxbag to visualize the data and have a look in particular at the recorded pose in order to get an intuition of the movement of the robot in this dataset.

As you see, the 3D trajectory plot shows significant motion of the system. This motion is crucial in order to make the system states converge. This is proved in this paper. As a rule of thumb you should always excite at least two axes in acceleration and 2 axis in angular rates. A more detailed discussion on the excitation levels needed for state convergence can be found in this PhD Thesis, chapter 4.1.

NOTE: The system is in an unobservable mode if it is stationary. It needs a certain motion to make the states converge.

The General Setup

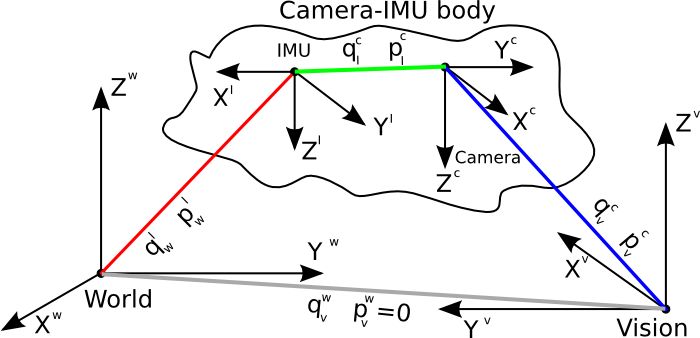

The core part of the framework (ssf_core) is designed for the most general update sensor: an arbitrarily scaled 6DoF pose measurement with respect to its own reference frame which drifts in position and attitude with respect to a (gravity aligned) fix navigation frame. For example, such a sensor may be represented by using the 6DoF pose from a monocular visual odometry framework such as ethzasl_ptam.

The above image depicts the frame setup of our framework: Each frame is connected with a certain translation p and rotation q (from quaternion) to another frame. The world frame is the frame in which our robot will navigate. The IMU and camera frame are centered in the respective sensors on the robot. The vision frame is the frame in which the visual part (e.g. ethzasl_ptam) triangulates the 3D features and with respect to which the visual part calculates the 6DoF camera pose. To represent the drifts occurring in every visual odometry framework (i.e. drift in visual scale, position and attitude) we introduce the so-called drift states in position pvw and attitude qvw.

The position drift is not observable since we do not have a global position measurement. Hence we do not use this state in this framework. The same is true for the attitude drift in yaw. This means that our "fix" world frame will drift in position and yaw together with the vision frame. However, roll and pitch drifts are observable and ensure that the world frame stays at least gravity aligned - a crucial property for MAV platforms. See a more detailed discussion on observability (also for multi-sensor systems) in this PhD Thesis.

Since the position drift is not observable we simply removed it in this framework. However, we are working with quaternions and the unobservable yaw cannot simply be set to zero by removing a state variable. Hence we introduce an artificial measurement keeping the yaw of the world frame aligned with the vision frame. Depending on the type of update sensor you use, additional such artificial measurements might be needed because other states might get unobservable. For example, in this tutorial on the example of a 3DoF position update sensor, the attitude between IMU and the "camera" (i.e. the position sensor) is irrelevant and hence unobservable. Furthermore, assuming the Vicon frame is gravity aligned and not drifting, the attitude drift state between the "vision" frame (i.e. Vicon frame) and the world frame is superfluous as well. The same is true for the "visual" scale state, however, we keep this state in the state vector for a sanity check - it should converge to 1.

A Position Sensor Example

Since the propagation step in this framework is always the same by using the IMU readings, we characterize the type of the single-sensor-fusion framework by the sensor used for the filter update. In this example, we use a 3DoF global position sensor for the EKF update step. This can be a GPS sensor, a Leica Totalstation or - in our case - the position readings from a Vicon system.

For every different update sensor, we need to incorporate the above mentioned steps (measurement callback, initialization and computation of H, y, and R) in a ssf_updates module.

The ssf_updates Module Files

Each update-sensor module consists of

the file for the initialization routine *_measurements.h

the header and source file for the measurement callback and H, y, R computation *_sensor.h and *_sensor.cpp

In the stack you already find position_measurements.h, position_sensor.cpp and position_sensor.h which represents a module prepared for using a 3DoF position sensor as update sensor. In this example, we use a position measurement from a Vicon system: copy the above 3 files to viconpos_measurements.h, viconpos_sensor.cpp and viconpos_sensor.h respectively. Make sure you adapt the #define entries in the *.h files and the #include entries accordingly.

Writing the Measurement Callback Function

open "viconpos_sensor.cpp" and go to PositionSensorHandler::measurementCallback. Our dataset publishes the Vicon-pose message in the geometry_msgs::TransformStamped format, rather than in ssf_updates::PositionWithCovarianceStamped format presently used in PositionSensorHandler::measurementCallback. Change it to the correct message format and add #include <geometry_msgs/TransformStamped.h> to viconpos_sensor.h.

Now, we read the position from the Vicon into the z_p_ (measurement in position) variable. Scroll down to the entry // get measurements and adjust the reading from the message received by the callback function.

Your function definition should now look like:

void PositionSensorHandler::measurementCallback(const geometry_msgs::TransformStampedConstPtr & msg)

and your position reading

z_p_ = Eigen::Matrix<double,3,1>(msg->transform.translation.x, msg->transform.translation.y, msg->transform.translation.z);

additionally, we artificialy slow down the vicon measurements to 20Hz. We add the lines

if (msg->header.seq%5!=0) return;

at the beginning of the measurement callback routine.

Computing the Measurement Noise Matrix R

We assume that the Vicon has a fix covariance in its position measurement. Furthermore, the geometry_msgs::TransformStamped message contains no place for covariance information. Therefor we will set the position measurement noise fix later in the dynamic reconfigure gui. Thus, you may delete all entries concerning the code block // take covariance from sensor in the PositionSensorHandler::measurementCallback routine.

A Note on the States in the System

As you remember, the system is originally designed to be used with a 6DoF arbitrarily scaled and drifting camera pose measurement. With this setup we require to consider states like

- 6DoF transformation between camera and IMU (translation and rotation)

- Visual scale (monocular system measure the position in arbitrary scale)

- Visual attitude drift with respect to a gravity aligned navigation frame

Depending on the sensor you use, not all these states are required - but they keep being in the core prediction model of the ssf_core library. Hence, for states not used, we introduce artificial measurements to keep them bounded. Since they are not observable in the given sensor setup, this does not influence the estimate of the other states.

In the current example we can omit:

- rotation between the IMU and the sensor since we measure only absolute position

- visual attitude drift with respect to a gravity aligned navigation frame. We are sure that the Vicon measurements do not drift. Furthermore, in this tutorial, the Vicon frame is gravity aligned.

In this example we keep the "visual" scale in the state vector as sanity check of the filter performance - of course this state should converge to 1 when using Vicon position measurements.

Because of the above reasons, the measurement noise matrix R has 6 fix entries of 1e-6 (3 for the rotation between sensor and IMU, 3 for the visual attitude drift) to reflect low noise on the artificial measurements.

Computing the Measurement Matrix H and the Residuals y

We leave this part to the next tutorial.

For this tutorial, no changes in the computation of the measurement matrix H and the residuals y are necessary since the original code is already made for a 3DoF position sensor. In the code, note the artificial measurements for the unobservable states mentioned above.

Writing the Initialization Routine

The file viconpos_measurements.h copied from position_measurements.h is already designed to use a 3DoF position sensor for the initialization. You may have a look at the file to familiarize yourself with the init() procedure. Independent states are set to specific values, whereas states such as the position and attitude of the IMU are initialized based on the most recent readings of the update sensor.

Compiling the New SSF Viconpos Sensor Framework

In order to run the framework, you need a main file that creates a class of the above new designed update-sensor module. Add in main.cpp of the ssf_updates package the lines

#ifdef VICONPOS_MEAS #include "viconpos_measurements.h" #endif

and in the main() routine:

#ifdef VICONPOS_MEAS

PositionMeasurements ViconPosMeas;

ROS_INFO_STREAM("Filter type: viconpos_sensor");

#endifIn CMakeLists.txt we have to define the new module as well. Add:

rosbuild_add_executable(viconpos_sensor src/main.cpp src/viconpos_sensor.cpp)

set_property(TARGET viconpos_sensor PROPERTY COMPILE_DEFINITIONS VICONPOS_MEAS)

rosbuild_add_compile_flags(viconpos_sensor "-O3")

target_link_libraries(viconpos_sensor ${catkin_LIBRARIES})Then call

catkin_make

Running the New SSF Viconpos Sensor Framework

Once the framework compiles with the new module viconpos_sensor, we test it with the dataset provided above.

Setting the Fix Parameters

Copy the file position_sensor_fix.yaml to viconpos_sensor_fix.yaml. This file contains some initialization parameters. Most of them can be changed during runtime in the dynamic reconfigure gui.

Do not change the noise parameters and the camera-IMU initialization parameters, they are OK for the dataset we provide for this tutorial. However, make sure the parameter data_playback is set to True because we use recorded data. Also, because the Vicon message does not contain any covariance entries nor has it any delay, make sure use_fixed_covariance is set to True and delay is set to zero.

Creating the Launch-File: Message Re-Mapping

In the launch directory of ssf_updates create the file viconpos_sensor.launch and put the following:

<launch>

<node name="viconpos_sensor" pkg="ssf_updates" type="viconpos_sensor" clear_params="true" output="screen">

<remap from="ssf_core/imu_state_input" to="/auk/fcu/imu" />

<remap from="ssf_core/position_measurement" to="vicon/auk/auk" />

<rosparam file="$(find ssf_updates)/viconpos_sensor_fix.yaml"/>

</node>

</launch>This maps the prediction sensor reading (i.e. IMU callback) ssf_core/imu_state_input to the IMU topic of the dataset. The measurement callback ssf_core/position_measurement is mapped to the Vicon readings. Also, the parameter file created above is loaded.

Launching the Framework

roslaunch ssf_updates viconpos_sensor.launch

should launch the viconpos_sensor framework. Then run the data playback in pause mode and from t=25s on - before the MAV was standing still and thus was in an unobservable mode.

rosbag play dataset.bag --pause -s 25

As you remember, we initialize the state based on the readings of the update sensor. Thus, let the playback run for about a second such that we get some update-sensor readings. Then pause it again to have time for the next steps.

Now we are ready to initialize the filter framework. Open a dynamic reconfigure gui and select /viconpos_sensor. You should see most of the parameter already seen in the viconpos_sensor_fix.yaml. Leave everything as it is and hit init_filter. The console running the filter should report

[ WARN] [[some time stamp]]: No measurements received yet to initialize attitude - using [1 0 0 0] [ INFO] [[some time stamp]]: filter initialized to: position: [some value, some value, some value] scale:1 attitude (w,x,y,z): [1, -0, -0, -0] p_ci: [0, 0, 0] q_ci: (w,x,y,z): [1, 0, 0, 0]

The warning is OK since we do not use any attitude update-sensor. This warning could be removed in the initialization routine in viconpos_measurements.h.

The framework is now ready. Before you hit play for the playback, read further to learn how to visualize the data. A rxgraph should look like the following

![]()

Visualizing the Data

We provide a couple of scripts to visualize the different states of the filter. For a quick sanity check you may call

rosrun ssf_core plot_relevant

This opens two plots. One shows the IMU acceleration biases (in any dataset values above 1 are suspicious) and the scale factor (this should be 1 in this tutorial using Vicon measurements). The second plot shows the 3D position and 3D velocity.

Now you can start the data replay and observe the behavior of the filter. You may notice that the visual scale factor is very sensitive to correct initialization and prone to get stuck in local minima.

Note that the plot scripts use the /ssf_core/state_out message. This message appears with an update measurement and contains the full state vector. You may also plot other messages:

* /ssf_core/pose: is a PoseWithCovarianceStamped published at prediction (i.e. IMU) rate and containing the 6DoF IMU pose w.r.t. the world frame. You may use this message for vehicle control.

* /ssf_core/ext_state: this is a legacy message to be used with the Luenberger observer with an AscTec platform. We discuss this in the asctec_hl_interface tutorial. It contains the 6DoF IMU pose and the 3DoF velocity without any covariance entries and is published at IMU rate

* /ssf_core/correction: contains the correction vector to be applied to the current state before the update. This is to be used with an AscTec platform discussed in sFly tutorial. It is published at update-sensor rate.