| |

Deploying MDM: Considerations for Specialized Scenarios

Description: This page discusses some of the non-standard use-cases of the MDM LibraryTutorial Level: INTERMEDIATE

The previous sections covered the internal organization of MDM and provided an overview of its implemantation in generic scenarios. This page discusses how MDM can be applied to scenarios with practical requirements which lie outside of the more common deployment schemes which have been presented so far.

POMDPs with External Belief States

In some scenarios, the probability distribution over the space state of the system can be handled indirectly, and continuously, by processes which can run independently of the agent’s decision-making loop. This may be the case, for example, if a robot is running a passive self-localization algorithm, which outputs a measure of uncertainty over the robot’s estimated pose, and if the user wants to use that estimate, at run-time, as a basis for the belief state of an associated POMDP.

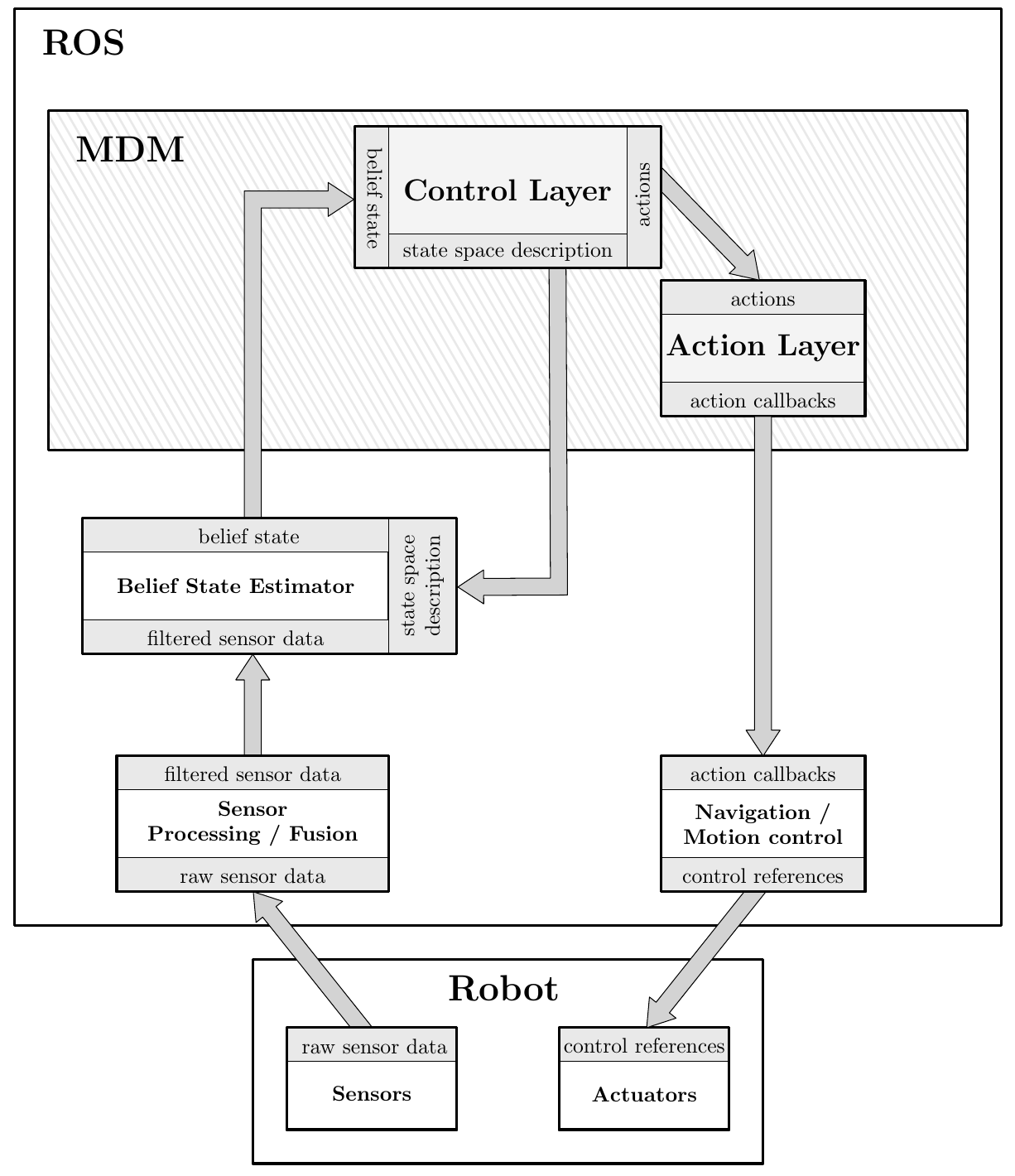

In MDM, this execution scheme can be implemented by omitting the Observation Layer of a POMDP agent, and instead passing the estimated belief state directly to its Control Layer. For this purpose, POMDP Control Layers subscribe, by default, to the ~/external_belief_estimate topic. Upon receiving a belief estimate, the Control Layer can output its associated action, according to the provided policy file, either asynchronously or at a fixed temporal rate. A representation of this deployment scheme is shown in Figure 1.

Figure 1: A deployment scheme for a POMDP-based agent where belief updates are carried out outside of MDM. The node that is responsible for the estimation of the belief state must have the same state space description as the Control Layer. The MDM ensemble is driven at the same rate as the belief state estimator, so it is implicitly synchronous with sensorial data.

There are, however, notable caveats to this approach:

- The description of the state space used by the Control Layer must be known by the belief state estimator. In particular, the notion of state that is assumed by the module which responsible for the belief estimation must be the same as that which is modeled in the POMDP. If this is not the case, then the probability distributions originating from the external estimator must first be projected onto the state space of the POMDP, which is not trivial. The system abstraction must be carried out within the belief state estimator;

- Algorithms which produce the belief state estimate directly from sensor data (e.g. self-localization) typically operate synchronously, at the same rate as that source data. This means that asynchronous, event-driven POMDP Control Layers are not well-defined in this case;

Planning is still assumed to be carried out prior to execution, and, during planning, the stochastic models of the POMDP are assumed to be an accurate representation of the system dynamics. If the external belief updates do not match the sequences of belief states predicted by the transition and observation models of the POMDP, then the agent can behave unexpectedly at run-time, since its policy was obtained using ill-defined models; if, on the other hand, the external belief updates are consistent with those which are predicted by the POMDP model, then a better option (in terms of the amount of work required for the deployment of the POMDP) is to simply carry out those belief updates internally in the Control Layer, as per the deployment scheme described originally in MDM Concepts.

Multiagent Decision Making with Managed Communication

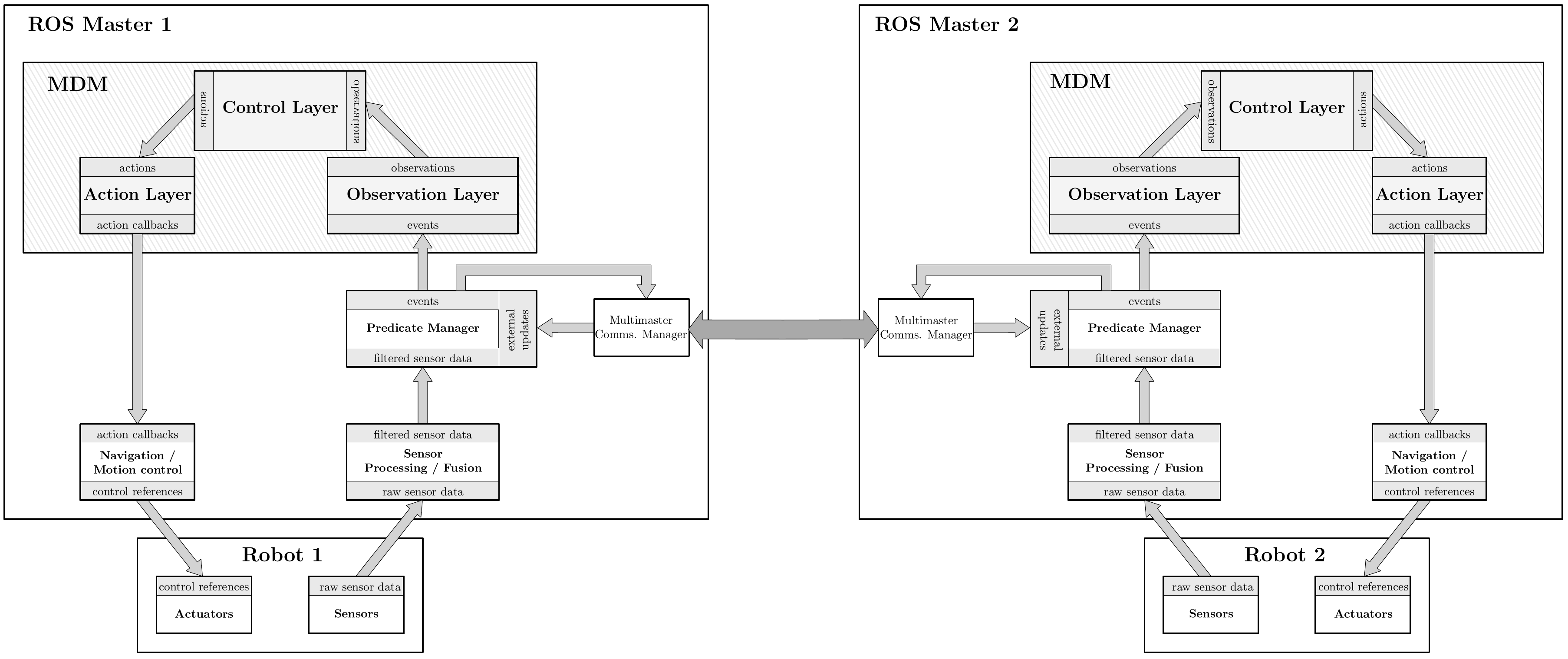

For multiagent systems, the standard operation of ROS assumes that a single ROS Master mediates the connection between the all of the nodes that are distributed across a network. After two nodes are connected, communication between them is peer-to-peer, and managed transparently by ROS, without providing the user the possibility of using custom communication protocols (for example, to limit the amount of network traffic). Therefore, in its default deployment scheme, the ROS Master behaves as a single point of failure for the distributed system, and more advanced communication protocols that attempt to remedy this issue cannot easily be used.

A more robust multiagent deployment scheme combines multiple ROS Masters, with managed communication between them (see Figure 2). Typically, each mobile robot in a multi-robot team will operate its own ROS Master. Topics which are meant to be shared between Masters through managed communication must be explicitly selected and configured by the user.

There are multiple ROS packages that provide multimaster support. Any of them can potentially be used together with MDM. For instance:

For MDM centralized multiagent controllers, which completely abstract inter-agent communication, using a multimaster deployment scheme simply means that a complete copy of its respective MDM ensemble must be running locally to each Master. Communication should be managed before the System Abstraction Layer, ideally by sharing Predicate Manager topics, so that each agent can maintain a coherent view of the system state, or of the team’s observations. Note that, since each agent has local access to the joint policy, it can execute its own actions when given the joint state or observation. For MDM controllers with explicit communication policies, communication should be managed at the output of the System Abstraction Layer, possibly with feedback from the Control Layer. The rationale in such a case is that states or observations are local to each agent (as opposed to being implicitly shared), and may not contain enough information for an agent to univocally determine its own action at the Control Layer. Consequently, the Control Layer should also be capable of fusing system information arriving from different sources.

Figure 2: A multiagent MDM deployment scheme with multiple ROS Masters, and managed communication. Predicate Manager topics are explicitly shared between ROS Masters, so each Observation outputs joint observations.