Contents

Overview

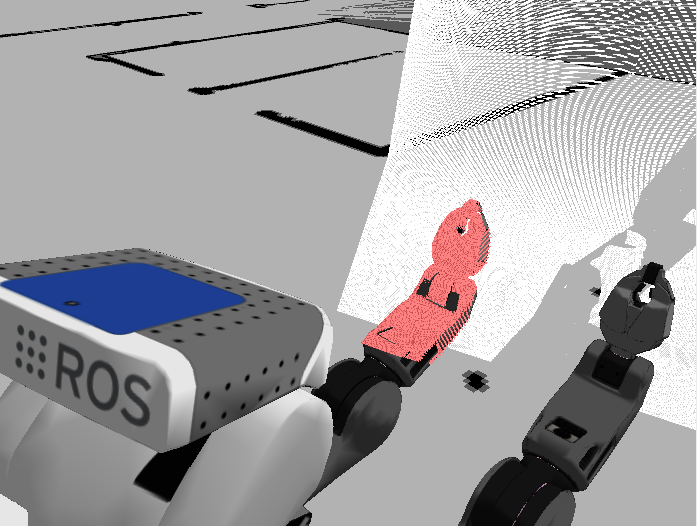

The rgbd_self_filter allows efficient GPU-based self filtering of a point cloud from an RGBD sensor (ex: Kinect) or stereo camera. The filter renders the robot from the perspective of the sensor, and subtracts any points that intersect with the robot's meshes.

Expected filter times are about 50-70ms for a 640x480 point cloud. This is significantly faster than the robot_self_filter.

The rgbd_self_filter is conceptually based on the camera_self_filter by Christian Bersch.

Code

Code is here: https://kforge.ros.org/rgbdselffilter/rgbd_self_filter

Setting Up RGBD Filter

To run the rgbd_self_filter with your robot, you will need:

- A URDF file of your robot with STL meshes for all joints

A sensor_msgs/CameraInfo topic that describes the RGBD camera.

Incoming sensor_msgs/PointCloud or sensor_msgs/PointCloud2 messages.

Incoming sensor_msgs/PointCloud2 messages must have the width and height fields set properly.

Incoming sensor_msgs/PointCloud messages must have the "u" and "v" channels filled out.

- Camera info and point cloud messages do not need to by sync'd.

- For stereo cameras, the camera info should come from the left, or primary, camera.

Running RGBD Filter

On the PR2, you will need to have a GPU installed on c2, and run "X" on that computer.

Install DVI loopback cable on the GPU: http://www.xtremesystems.org/forums/showthread.php?t=200444

- "sudo apt-get install xserver-xorg" and install nVidia drivers

- Run "sudo xinit" on c2

- Set "DISPLAY" environment variable to ":0" for node

For complete instructions, see pr2_camera_self_filter.

If the filter is unable to start up an X window, it will fall back to a passthrough filter.

Running the filter on a computer with a GPU running (like a desktop) requires only setting the environment variable.

How it Works

The RGBD filter renders the robot from the perspective of the given sensor. Meshes can be inflated by a uniform scale. Inflated meshes are inflated around the mesh barycenter.

Using the image coordinate (U/V) data from the incoming point cloud, the filter checks the expected depth of the robot mesh at that U/V coordinate. If the point cloud point is deeper, or if the point is within the "~close_threshold" of the depth, the point will be removed.

ROS API

rgbd_self_filter

Self-filtering for robot point cloudsSubscribed Topics

camera_info (sensor_msgs/CameraInfo)- Camera info for the RGBD sensor

- PointCloud data to filter. This will be converted to a PCL type, so "points2" is preferred.

- PointCloud2 data. If the data is dense packed (width and height correct), the filter is more efficient because it doesn't have to calculate the U/V indices.

Published Topics

points_mask (sensor_msgs/PointCloud2)- Filtered point cloud

- Only if "~publish_mask" param is true. Mask image for debugging.

- Only if "~pub_debug_cloud" param is true. Point cloud of robot model's rendering.

Parameters

~close_threshold (double, default: 0.02)- All points within this distance to robot meshes are removed.

- Near clip plane of rendering, all points closer than this removed.

- Valid URDF of your robot

- Robot meshes are "inflated" to remove points close to the meshes. Meshes are inflated by this scale.

- Meshes specified in this parameter will be inflated according to a custom scale.