Planet ROS

Planet ROS - http://planet.ros.org

Planet ROS - http://planet.ros.org![]() http://planet.ros.org

http://planet.ros.org

ROS Discourse General: RobotCAD integrates Dynamic World Generator

Added “Create custom world” tool based on Dynamic World Generator repo. It let you create Gazebo world with static and dynamic obstacles directly in RobotCAD.

https://vk.com/video-219386643_456239082 - video demo

1 post - 1 participant

ROS Discourse General: ManyMove integration with Isaac SIM 5.0

ManyMove, an open-source framework built on ROS 2, MoveIt 2, and BehaviorTree.CPP, is now enabled to interact with NVIDIA Isaac Sim 5.0.

With this integration, you can:

![]() Execute behavior-tree–based motion control directly to physics simulation.

Execute behavior-tree–based motion control directly to physics simulation.

![]() Prototype and validate manipulation tasks like pick-and-place in a high-fidelity digital twin.

Prototype and validate manipulation tasks like pick-and-place in a high-fidelity digital twin.

![]() Streamline the path from simulation to real-world deployment.

Streamline the path from simulation to real-world deployment.

ManyMove is designed for industrial applications and offers a wide range of examples with single or double robot configuration.

Its command structure is similar to industrial robot languages, easing the transition from classic robotics frameworks to ROS 2.

Behavior Trees power the logic cycles for flexibility and clarity.

![]() Demos:

Demos:

Combining ManyMove, Isaac Sim’s physics and rendering and Isaac ROS reference workflows aims to allow easier deployment of GPU-accelerated packages for perception-driven manipulation.

![]() How to run the examples

How to run the examples

-

Install & source the Humble or Jazzy version of ManyMove by following the instructions in the GitHub README.

-

Install Isaac Sim 5.0 and enable the ROS2 Simulation Control extension.

-

Launch the example:

ros2 launch manymove_bringup lite_isaac_sim_movegroup_fake_cpp_trees.launch.py -

Load

.../manymove/manymove_planner/config/cuMotion/example_scene_lite6_grf_ros2.usdand start the simulation. -

Press START on ManyMove’s HMI

Tested on Ubuntu 22.04 with ROS 2 Humble and on Ubuntu 24.04 with ROS2 Jazzy.

Feedback welcome!

1 post - 1 participant

ROS Discourse General: Anyone here going to SWITCH 2025 after ROSCon 2025?

Hey everyone!

I noticed that SWITCH Conference is happening the same week as ROSCon in Singapur. It is a major deep‑tech innovation and startup event that brings together founders, investors, corporates, and researchers focused on research commercialization…

I’d love to know if anyone in the ROS community has been there before, and if so, would you recommend me going (as an robotics enthusiasts or entrepreneurs wannabe)?

Also, is anyone here planning to attend this year?

Thanks in advance for any thoughts or advice! ![]()

1 post - 1 participant

ROS Discourse General: Guidance Nav2 for similar project: straight lines trajectory

Hi there,

I am currently working on a project which has similarities to something showcased on the Nav2 website landing page. I would be after recommendations of global planner and local planner/controller plugins to execute a trajectory made of parallel passes spaced by a given constant offset distance. It is similar to what is visible below (visible on Nav2) website:

I was not sure in the example above which type of localization method was employed (GPS based for outdoor application, as it seem close to an agricultural outdoor application?). Unsure also who or which company would be behind (I could have given them credit for the illustration above), so would be thankful if the community would know who or which company is behind this illustration.

On my case, at the end of each straight pass (in blue), the robot could reposition via a curved trajectory or pause and execute two turns in place for the repositioning pass. I do not need to maintain speed during the turns.

So far I have explored the straight line global planner plugin as shown in the Nav2 example and trialed the Regulated Pure Pursuit and Vector Pursuit as local planner / controller. Is there other local planner I should consider for this type of application (straight line then repositioning to next straight line)? The goal is to be able to maintain a precise trajectory for the straight passes while going on an acceleration, cruise, deceleration speed profile on the straight lines. I am currently trialing those in Gazebo simulating the environment where the robot will be deployed. I am able to generate the required waypoints via the simple commander API and using AMCL for now as my application is indoor.

Using Gazebo and AMCL, here are the kind of things I obtain via PlotJuggler:

First of, there are differences between ACML and groundtruth, so AMCL would require some improvements, but I can see the AMCL goes by each corner which are waypoints, so the robot from its perception goes to all waypoints. Second the trajectory followed (groundtruth) is within +/-10cm of the target. Not sure if other or better tuned controller can improve the order of magnitude of the accuracy, I would be aiming to about +/-1cm at 1m/s. This is a combination of localization + control method. Open to discuss if I need to improve localization first (AMCL or other, such as Visual + IMU odometry, UWB, …). I am also using Humble which does not have the python API driveOnHeading, to trial a pure straight line command (maybe similar to broadcasting in /cmd_vel a positive x component).

Thank you very much for your help and guidance,

Nicolas

PS: first post on my side, so apologies if this is the wrong platform, I was trying to find out about a specific Nav2 platform but could not join the Nav2 slack.

2 posts - 2 participants

ROS Discourse General: ROS 2 Desktop Apps with rclnodejs + Electron

Looking for an alternative to traditional ROS visualization tools? Want to create custom robotics interfaces that are modern, interactive, and cross-platform?

The rclnodejs + Electron combination offers a powerful solution for building ROS 2 desktop applications using familiar web technologies, giving you the flexibility to create exactly the interface your project needs.

Why Use This?

-

Cross-platform - Windows, macOS, Linux

-

Modern UI - HTML5, CSS3, Three.js, WebGL

-

Easy deployment - Standalone desktop apps

Architecture

Example: Turtle TF2 Demo

Real-time TF2 coordinate frame visualization with interactive 3D graphics:

Perfect for: Robot dashboards, monitoring tools, educational apps, research prototypes.

Learn more: https://github.com/RobotWebTools/rclnodejs

1 post - 1 participant

ROS Discourse General: Think Outside the Box: Controlling a Robotic Arm with a Mobile Phone Gyroscope

![]() Hi ROS Community,

Hi ROS Community,

We’d like to share an experimental project where we explored controlling a robotic arm using the gyroscope and IMU sensors of a mobile phone. By streaming the phone’s sensor data via WebSocket, performing attitude estimation with an EKF, and mapping the results to the robotic arm, we managed to achieve intuitive motion control without the need for traditional joysticks or external controllers.

This post documents the setup process, environment configuration, and code usage so you can try it out yourself or adapt it to your own robotic applications. ![]()

Abstract

This project implements robotic arm control using mobile phone sensor data (accelerometer, IMU, magnetometer).

The data is transmitted from the mobile phone to a local Python script in real time via WebSocket.

After attitude calculation, the script controls the movement of the robotic arm.

Tags

Mobile Phone Sensor, Attitude Remote Control, IMU, Attitude Calculation, EKF

Code Repository

GitHub: Agilex-College/piper/mobilePhoneCtl

Function Demonstration

Environment Configuration

- Operating System: Ubuntu 18.04+ (recommended)

- Python Environment: Python 3.6+

- Clone the project:

git clone https://github.com/agilexrobotics/Agilex-College.git

cd Agilex-College/piper/mobilePhoneCtl/

- Install dependencies:

pip install -r requirements.txt --upgrade

- Ensure that piper_sdk and its hardware dependencies are properly installed and configured.

Mobile Phone App Installation

We recommend using Sensor Stream IMU+ (a paid app) for mobile phone–side data collection and transmission:

- Install the app from the official website or app store.

- Supports both iOS and Android.

App Usage Instructions

- Open the Sensor Stream IMU+ app.

- Enter the IP address and port of the computer running this script (default port: 5000), e.g.,

192.168.1.100:5000. - Select sensors to transmit: Accelerometer, Gyroscope, Magnetometer.

- Set an update interval (e.g., 20 ms).

- Tap Start Streaming to begin data transmission.

Python Script Usage

- Connect the robotic arm and activate the CAN module:

sudo ip link set can0 up type can bitrate 1000000

- Run

main.pyin this directory:

python3 main.py

- The script will display the local IP address and port—fill this into the app.

- Once the app starts streaming, the script performs attitude calculation and sends the results to the robotic arm via the EndPoseCtrl interface of piper_sdk.

Data Transmission & Control Explanation

- The mobile phone sends 3-axis accelerometer, gyroscope, and magnetometer data in real time via WebSocket.

- The script employs an Extended Kalman Filter (EKF) to compute Euler angles (roll, pitch, yaw).

- Calculated attitudes are sent to the robotic arm via piper_sdk’s EndPoseCtrl for real-time motion control.

Precautions

- Ensure the mobile phone and computer are on the same LAN, and that port 5000 is allowed through the firewall.

- Safety first—be cautious of the robotic arm moving to avoid any collisions.

- To modify port or initial pose, edit the relevant parameters in

main.py.

Related Projects

Check out these previous tutorials on controlling the AgileX PIPER robotic arm:

Fixed Position Recording & Replay for AgileX PIPER Robotic Arm

Fixed Position Recording & Replay for AgileX PIPER Robotic Arm Continuous Trajectory Recording & Replay for AgileX PIPER Robotic Arm

Continuous Trajectory Recording & Replay for AgileX PIPER Robotic Arm

![]() That’s all for this demo!

That’s all for this demo!

If you’re interested in experimenting with mobile-sensor-based robot control, feel free to check out the GitHub repo (again) and give it a try.

We’d love to hear your thoughts, suggestions, or ideas for extending this approach—whether it’s integrating ROS2 teleoperation, improving the EKF pipeline, or adding gesture control.

Looking forward to your feedback and discussions! ![]()

1 post - 1 participant

ROS Discourse General: Don't trust LLM math with your rosbags? Bagel has a solution

Hi ROS Community,

Couple weeks ago, we launched an open source tool called Bagel. It allows you to troubleshoot rosbags using natural language, just like how you use ChatGPT.

We released a new feature today to address a common concern raised by the ROS community.

![]() “We don’t trust LLM math.”

“We don’t trust LLM math.”![]()

That’s feedback we kept hearing from the ROS community regarding Bagel.![]()

![]() We heard you, so we built a solution: whenever Bagel needs to do a mathematical calculation on a robotics log, it now creates a DuckDB table and runs a clear SQL query against the data.

We heard you, so we built a solution: whenever Bagel needs to do a mathematical calculation on a robotics log, it now creates a DuckDB table and runs a clear SQL query against the data.

![]() That makes Bagel’s math:

That makes Bagel’s math:

• Transparent – you see the query and verify it manually.

• Deterministic – no “LLM guesswork.”

We’re launching this today and would love community feedback ![]() . Love it

. Love it ![]() or hate it

or hate it ![]() ? Let us know! Or yell at us in our Discord

? Let us know! Or yell at us in our Discord ![]() .

.

https://github.com/Extelligence-ai/bagel

3 posts - 2 participants

ROS Discourse General: Image_transport and point_cloud_transport improvements

During the last months we have being improving point_cloud_transport and image_point_transport API, in particular to close some major ROS 2 feature gaps

- Be able to use lifecycle node

- replace rmw_qos_profile_t with rclcpp::QoS

Related PR: https://github.com/ros-perception/image_common/pull/364 Support lifecycle node - NodeInterfaces by ahcorde · Pull Request #352 · ros-perception/image_common · GitHub Removed deprecated code by ahcorde · Pull Request #356 · ros-perception/image_common · GitHub Update subscriber filter by elsayedelsheikh · Pull Request #126 · ros-perception/point_cloud_transport · GitHub Simplify NodeInterface API mehotd call by ahcorde · Pull Request #129 · ros-perception/point_cloud_transport · GitHub Deprecated rmw_qos_profile_t by ahcorde · Pull Request #125 · ros-perception/point_cloud_transport · GitHub Feat/Add LifecycleNode Support by elsayedelsheikh · Pull Request #109 · ros-perception/point_cloud_transport · GitHub

Some of these changes are breaking ABI (only on rolling). If you are maintaining a point_cloud_transport or image_transport plugins you should probably update a little bit your code to remove new deprecation warnings and avoid potencial segfaults due these new changes.

Thank you everyone involved in these changes and hope this help!

1 post - 1 participant

ROS Industrial: Industrial Calibration Refresh

A number of ease of use updates have been made to the ROS-Industrial Industrial Calibration repository. These updates seek to improve the ease of use and provides a significant update around documentation. The updates include:

- Cleaning up the intrinsic and extrinsic calibration widgets so they now share common infrastructure reducing total code.

- The widgets are now in the application main window such that the widgets may now more easily used in Rviz where the image display cannot or does not need to be in the main widget.

- The update also includes a widget that separates the calibration configuration/data viewer from the results page (also added to support usage in other contexts (like Rviz) that are more limited on vertical space, and it makes both pages more easily readable).

- An “instructions” action and tool bar button has been added to provide information on how to run the calibration apps.

A new documentation page has been created that includes information and examples to help users get the most out of the calibration and get the most accurate hand-eye calibration. This includes an example and unit test for the camera intrinsic calibration using the 10 x 10 modified circle grid data set.

The updated documentation page includes a primer on calibration that covers the basics of calibration and a “getting started” page that includes guidance on building the application and the ROS 1 and ROS 2 interfaces, as well as links to the GUI applications. A docker has also been provided for those that prefer to work from a Docker container.

We look forward to getting feedback on this latest update and hope that the community finds this update useful relative to having robust industrial calibration for your robotic perception systems and applications.

ROS Discourse General: Ros-python-wheels: pip-installable ROS 2 packages

Hello ROS community,

I’d like to share that I’ve been working on making ROS 2 packages pip-installable with a project called ros-python-wheels! Furthermore, a select number of ROS packages are made available on a Python package index which I’ve hosted at Cloudsmith.io

Getting started with ROS 2 in Python is as simple as running:

pip install --extra-index-url https://dl.cloudsmith.io/public/ros-python-wheels/kilted/python/simple ros-rclpy[fastrtps]

Key Benefits

This enable a first-class developer experience when working with ROS in Python projects:

-

Easy Project Integration: To include the ROS Python Client (rclpy) in a Python project, simply add

ros-rclpy[fastrtps]with its package repository to yourpyproject.tomlfile. -

Enable Modern Python Tooling: Easily manage Python ROS dependencies using modern Python tools like uv and Poetry.

-

Lightweight: The wheels of rclpy all of its dependencies have a total size of around 15MB.

-

Portable: Allows ROS to be run on different Linux distributions.

Comparison

This approach provides a distinct alternative to existing solutions:

- In comparison to RoboStack, wheels are more lightweight and flexible than conda packages.

- rospypi is a similar approach for ROS 1, whereas ros-python-wheels targets ROS 2.

I’d love to hear your thoughts on this project. I’d also appreciate a star on my project if you find this useful or if you think this is a good direction for ROS!

4 posts - 2 participants

ROS Discourse General: Announcement: rclrs 0.5.0 Release

We are thrilled to announce the latest official release of rclrs (0.5.0), the Rust client library for ROS 2! This latest version brings significant improvements and enhancements to rclrs, making it easier than ever to develop robotics applications in Rust.

Some of the highlights of this release include:

- Fully async API, compatible with any async runtime (tokio, async_std, etc.)

- Support for Windows

- Support for Jazzy, Kilted and Rolling

- Parameter services

In addition to the new release of rclrs, we’re also happy to announce that rosidl_generator_rs is now released on the ROS buildfarm ( ROS Package: rosidl_generator_rs ). Paving the way for shipping generated Rust messages alongside current messages for C++ and Python.

I’ll be giving a talk at ROSCon UK in Edinburgh and at ROSCon in Singapore about ros2-rust, rclrs and all the projects that we’ve been working on to bring Rust support to ROS. Happy to chat with anyone interested in our work, or in Rust in ROS in general ![]()

And if you want to be part of the development of the next release, you’re more than welcome to join us on our Matrix chat channel!

I can’t emphasize enough how much this release was a community effort, we’re happy to have people from so many different affiliations contributing to rclrs. This release wouldn’t have been possible without:

- Abhishek Kashyap

- Agustin Alba Chicar

- alluring-mushroom

- Arjo Chakravarty

- Javier Balloffet

- Jacob Hassold

- Kimberly N. McGuire

- Luca Della Vedova

- Lucas Chiesa

- Marco Boneberger

- Michael X. Grey

- Milan Vukov

- Nathan Wiebe Neufeldt

- Oscarchoi

- Romain Reignier

- Rufus Wong

- Sam Privett

- Saron Kim

- Xavier L’Heureux

1 post - 1 participant

ROS Discourse General: [Project] ROS 2 MediaPipe Suite — parameterized multi-model node with landmarks & gesture events

We’re releasing a small ROS 2 suite that turns Google MediaPipe Tasks into reusable components.

A single parameterized node switches between hand / pose / face, publishes landmarks + high-level gesture events, ships RViz viz (overlay + MarkerArray), and a turtlesim demo for perception → event → behavior.

Newcomer note: This is my first ROS 2 package—I kept the setup minimal so students and prototypers can plug MediaPipe into ROS 2 in minutes.

Turtlesim Demo:

Repo: https://github.com/PME26Elvis/mediapipe_ros2_suite · ROS 2: Humble (CI), Jazzy (experimental) · License: Apache-2.0

CI: Humble — passing (required) · Jazzy — experimental (non-blocking)

Quick start:

ros2 run v4l2_camera v4l2_camera_node

ros2 launch mediapipe_ros2_py mp_node.launch.py model:=hand image_topic:=/image_raw start_rviz:=true

ros2 run turtlesim turtlesim_node & ros2 run mediapipe_ros2_py gesture_to_turtlesim

Feedback & contributions welcome.

1 post - 1 participant

ROS Discourse General: New packages and patch release for Jazzy Jalisco 2025-08-20

We’re happy to announce 82 new packages and 705 updates are now available on Ubuntu Noble on amd64 for Jazzy Jalisco.

This sync was tagged as jazzy/2025-08-20 .

![]()

![]()

![]()

Package Updates for jazzy

Note that package counts include dbgsym packages which have been filtered out from the list below

Added Packages [82]:

- ros-jazzy-automatika-embodied-agents: 0.4.1-1

- ros-jazzy-automatika-embodied-agents-dbgsym: 0.4.1-1

- ros-jazzy-crazyflie: 1.0.3-1

- ros-jazzy-crazyflie-dbgsym: 1.0.3-1

- ros-jazzy-crazyflie-examples: 1.0.3-1

- ros-jazzy-crazyflie-interfaces: 1.0.3-1

- ros-jazzy-crazyflie-interfaces-dbgsym: 1.0.3-1

- ros-jazzy-crazyflie-py: 1.0.3-1

- ros-jazzy-crazyflie-sim: 1.0.3-1

- ros-jazzy-cx-ament-index-plugin: 0.1.2-1

- ros-jazzy-cx-ament-index-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-clips-env-manager: 0.1.2-1

- ros-jazzy-cx-clips-env-manager-dbgsym: 0.1.2-1

- ros-jazzy-cx-config-plugin: 0.1.2-1

- ros-jazzy-cx-config-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-example-plugin: 0.1.2-1

- ros-jazzy-cx-example-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-executive-plugin: 0.1.2-1

- ros-jazzy-cx-executive-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-file-load-plugin: 0.1.2-1

- ros-jazzy-cx-file-load-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-msgs: 0.1.2-1

- ros-jazzy-cx-msgs-dbgsym: 0.1.2-1

- ros-jazzy-cx-plugin: 0.1.2-1

- ros-jazzy-cx-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-protobuf-plugin: 0.1.2-1

- ros-jazzy-cx-protobuf-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-ros-comm-gen: 0.1.2-1

- ros-jazzy-cx-ros-msgs-plugin: 0.1.2-1

- ros-jazzy-cx-ros-msgs-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-tf2-pose-tracker-plugin: 0.1.2-1

- ros-jazzy-cx-tf2-pose-tracker-plugin-dbgsym: 0.1.2-1

- ros-jazzy-cx-utils: 0.1.2-1

- ros-jazzy-cx-utils-dbgsym: 0.1.2-1

- ros-jazzy-ffw-robot-manager: 1.1.9-1

- ros-jazzy-ffw-robot-manager-dbgsym: 1.1.9-1

- ros-jazzy-ffw-swerve-drive-controller: 1.1.9-1

- ros-jazzy-ffw-swerve-drive-controller-dbgsym: 1.1.9-1

- ros-jazzy-husarion-components-description: 0.0.2-2

- ros-jazzy-live555-vendor: 0.20250719.0-1

- ros-jazzy-live555-vendor-dbgsym: 0.20250719.0-1

- ros-jazzy-omni-wheel-drive-controller: 4.30.1-1

- ros-jazzy-omni-wheel-drive-controller-dbgsym: 4.30.1-1

- ros-jazzy-protobuf-comm: 0.9.3-1

- ros-jazzy-protobuf-comm-dbgsym: 0.9.3-1

- ros-jazzy-reductstore-agent: 0.2.0-1

- ros-jazzy-rosbag2-performance-benchmarking-dbgsym: 0.26.9-1

- ros-jazzy-rosbag2-performance-benchmarking-msgs-dbgsym: 0.26.9-1

- ros-jazzy-rosidlcpp: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-c: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-c-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-core: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-core-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-cpp: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-cpp-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-py: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-py-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-type-description: 0.3.0-1

- ros-jazzy-rosidlcpp-generator-type-description-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-parser: 0.3.0-1

- ros-jazzy-rosidlcpp-parser-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-c: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-c-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-cpp: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-cpp-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-fastrtps-c: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-fastrtps-c-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-fastrtps-cpp: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-fastrtps-cpp-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-introspection-c: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-introspection-c-dbgsym: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-introspection-cpp: 0.3.0-1

- ros-jazzy-rosidlcpp-typesupport-introspection-cpp-dbgsym: 0.3.0-1

- ros-jazzy-turtlebot3-home-service-challenge: 1.0.5-1

- ros-jazzy-turtlebot3-home-service-challenge-aruco: 1.0.5-1

- ros-jazzy-turtlebot3-home-service-challenge-core: 1.0.5-1

- ros-jazzy-turtlebot3-home-service-challenge-manipulator: 1.0.5-1

- ros-jazzy-turtlebot3-home-service-challenge-manipulator-dbgsym: 1.0.5-1

- ros-jazzy-turtlebot3-home-service-challenge-tools: 1.0.5-1

- ros-jazzy-yasmin-dbgsym: 3.3.0-1

- ros-jazzy-yasmin-ros-dbgsym: 3.3.0-1

- ros-jazzy-yasmin-viewer-dbgsym: 3.3.0-1

Updated Packages [705]:

- ros-jazzy-ackermann-steering-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-ackermann-steering-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-action-msgs: 2.0.2-2 → 2.0.3-1

- ros-jazzy-action-msgs-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-action-tutorials-cpp: 0.33.5-1 → 0.33.6-1

- ros-jazzy-action-tutorials-cpp-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-action-tutorials-interfaces: 0.33.5-1 → 0.33.6-1

- ros-jazzy-action-tutorials-interfaces-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-action-tutorials-py: 0.33.5-1 → 0.33.6-1

- ros-jazzy-admittance-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-admittance-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-ament-clang-format: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-clang-tidy: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-clang-format: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-clang-tidy: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-copyright: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-cppcheck: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-cpplint: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-flake8: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-lint-cmake: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-mypy: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-pclint: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-pep257: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-pycodestyle: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-pyflakes: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-uncrustify: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cmake-xmllint: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-copyright: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cppcheck: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-cpplint: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-flake8: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-lint: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-lint-auto: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-lint-cmake: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-lint-common: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-mypy: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-pclint: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-pep257: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-pycodestyle: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-pyflakes: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-uncrustify: 0.17.2-1 → 0.17.3-1

- ros-jazzy-ament-xmllint: 0.17.2-1 → 0.17.3-1

- ros-jazzy-apriltag: 3.4.3-1 → 3.4.4-1

- ros-jazzy-apriltag-dbgsym: 3.4.3-1 → 3.4.4-1

- ros-jazzy-apriltag-detector: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-detector-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-detector-mit: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-detector-mit-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-detector-umich: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-detector-umich-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-draw: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-draw-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-tools: 3.0.2-1 → 3.0.3-1

- ros-jazzy-apriltag-tools-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-automatika-ros-sugar: 0.2.9-1 → 0.3.1-1

- ros-jazzy-automatika-ros-sugar-dbgsym: 0.2.9-1 → 0.3.1-1

- ros-jazzy-axis-camera: 3.0.1-1 → 3.0.2-1

- ros-jazzy-axis-description: 3.0.1-1 → 3.0.2-1

- ros-jazzy-axis-msgs: 3.0.1-1 → 3.0.2-1

- ros-jazzy-axis-msgs-dbgsym: 3.0.1-1 → 3.0.2-1

- ros-jazzy-backward-ros: 1.0.7-1 → 1.0.8-1

- ros-jazzy-backward-ros-dbgsym: 1.0.7-1 → 1.0.8-1

- ros-jazzy-bicycle-steering-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-bicycle-steering-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-builtin-interfaces: 2.0.2-2 → 2.0.3-1

- ros-jazzy-builtin-interfaces-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-canboat-vendor: 0.0.3-1 → 0.0.5-1

- ros-jazzy-canboat-vendor-dbgsym: 0.0.3-1 → 0.0.5-1

- ros-jazzy-clearpath-bt-joy: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-common: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-config: 2.5.0-1 → 2.6.2-1

- ros-jazzy-clearpath-control: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-customization: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-description: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-diagnostics: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-diagnostics-dbgsym: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-generator-common: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-generator-common-dbgsym: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-manipulators: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-manipulators-description: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-motor-msgs: 2.4.0-1 → 2.6.0-1

- ros-jazzy-clearpath-motor-msgs-dbgsym: 2.4.0-1 → 2.6.0-1

- ros-jazzy-clearpath-mounts-description: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-msgs: 2.4.0-1 → 2.6.0-1

- ros-jazzy-clearpath-platform-description: 2.5.1-1 → 2.6.4-1

- ros-jazzy-clearpath-platform-msgs: 2.4.0-1 → 2.6.0-1

- ros-jazzy-clearpath-platform-msgs-dbgsym: 2.4.0-1 → 2.6.0-1

- ros-jazzy-clearpath-sensors-description: 2.5.1-1 → 2.6.4-1

- ros-jazzy-coin-d4-driver: 1.0.0-1 → 1.0.1-1

- ros-jazzy-coin-d4-driver-dbgsym: 1.0.0-1 → 1.0.1-1

- ros-jazzy-composition: 0.33.5-1 → 0.33.6-1

- ros-jazzy-composition-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-composition-interfaces: 2.0.2-2 → 2.0.3-1

- ros-jazzy-composition-interfaces-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-compressed-depth-image-transport: 4.0.4-1 → 4.0.5-1

- ros-jazzy-compressed-depth-image-transport-dbgsym: 4.0.4-1 → 4.0.5-1

- ros-jazzy-compressed-image-transport: 4.0.4-1 → 4.0.5-1

- ros-jazzy-compressed-image-transport-dbgsym: 4.0.4-1 → 4.0.5-1

- ros-jazzy-control-msgs: 5.4.1-1 → 5.5.0-1

- ros-jazzy-control-msgs-dbgsym: 5.4.1-1 → 5.5.0-1

- ros-jazzy-control-toolbox: 4.5.0-1 → 4.6.0-1

- ros-jazzy-control-toolbox-dbgsym: 4.5.0-1 → 4.6.0-1

- ros-jazzy-controller-interface: 4.32.0-1 → 4.35.0-1

- ros-jazzy-controller-interface-dbgsym: 4.32.0-1 → 4.35.0-1

- ros-jazzy-controller-manager: 4.32.0-1 → 4.35.0-1

- ros-jazzy-controller-manager-dbgsym: 4.32.0-1 → 4.35.0-1

- ros-jazzy-controller-manager-msgs: 4.32.0-1 → 4.35.0-1

- ros-jazzy-controller-manager-msgs-dbgsym: 4.32.0-1 → 4.35.0-1

- ros-jazzy-demo-nodes-cpp: 0.33.5-1 → 0.33.6-1

- ros-jazzy-demo-nodes-cpp-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-demo-nodes-cpp-native: 0.33.5-1 → 0.33.6-1

- ros-jazzy-demo-nodes-cpp-native-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-demo-nodes-py: 0.33.5-1 → 0.33.6-1

- ros-jazzy-diff-drive-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-diff-drive-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-ds-dbw: 2.3.3-1 → 2.3.5-1

- ros-jazzy-ds-dbw-can: 2.3.3-1 → 2.3.5-1

- ros-jazzy-ds-dbw-can-dbgsym: 2.3.3-1 → 2.3.5-1

- ros-jazzy-ds-dbw-joystick-demo: 2.3.3-1 → 2.3.5-1

- ros-jazzy-ds-dbw-joystick-demo-dbgsym: 2.3.3-1 → 2.3.5-1

- ros-jazzy-ds-dbw-msgs: 2.3.3-1 → 2.3.5-1

- ros-jazzy-ds-dbw-msgs-dbgsym: 2.3.3-1 → 2.3.5-1

- ros-jazzy-dummy-map-server: 0.33.5-1 → 0.33.6-1

- ros-jazzy-dummy-map-server-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-dummy-robot-bringup: 0.33.5-1 → 0.33.6-1

- ros-jazzy-dummy-sensors: 0.33.5-1 → 0.33.6-1

- ros-jazzy-dummy-sensors-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-dynamixel-hardware-interface: 1.4.8-1 → 1.4.11-1

- ros-jazzy-dynamixel-hardware-interface-dbgsym: 1.4.8-1 → 1.4.11-1

- ros-jazzy-effort-controllers: 4.27.0-1 → 4.30.1-1

- ros-jazzy-effort-controllers-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-etsi-its-cam-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-ts-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-ts-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-ts-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-ts-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cam-ts-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-conversion-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cpm-ts-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cpm-ts-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cpm-ts-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cpm-ts-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-cpm-ts-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-ts-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-ts-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-ts-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-ts-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-denm-ts-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mapem-ts-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mapem-ts-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mapem-ts-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mapem-ts-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mapem-ts-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mcm-uulm-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mcm-uulm-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mcm-uulm-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mcm-uulm-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-mcm-uulm-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-messages: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-msgs-utils: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-primitives-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-rviz-plugins: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-rviz-plugins-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-spatem-ts-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-spatem-ts-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-spatem-ts-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-spatem-ts-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-spatem-ts-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-vam-ts-coding: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-vam-ts-coding-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-vam-ts-conversion: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-vam-ts-msgs: 3.2.1-1 → 3.3.0-1

- ros-jazzy-etsi-its-vam-ts-msgs-dbgsym: 3.2.1-1 → 3.3.0-1

- ros-jazzy-event-camera-renderer: 2.0.0-1 → 2.0.1-2

- ros-jazzy-event-camera-renderer-dbgsym: 2.0.0-1 → 2.0.1-2

- ros-jazzy-examples-rclcpp-async-client: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-async-client-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-cbg-executor: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-cbg-executor-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-action-client: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-action-client-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-action-server: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-action-server-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-client: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-client-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-composition: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-composition-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-publisher: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-publisher-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-service: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-service-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-subscriber: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-subscriber-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-timer: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-minimal-timer-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-multithreaded-executor: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-multithreaded-executor-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-wait-set: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclcpp-wait-set-dbgsym: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-executors: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-guard-conditions: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-minimal-action-client: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-minimal-action-server: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-minimal-client: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-minimal-publisher: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-minimal-service: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-minimal-subscriber: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-rclpy-pointcloud-publisher: 0.19.5-1 → 0.19.6-1

- ros-jazzy-examples-tf2-py: 0.36.12-1 → 0.36.14-1

- ros-jazzy-fastrtps: 2.14.4-1 → 2.14.5-2

- ros-jazzy-fastrtps-cmake-module: 3.6.1-1 → 3.6.2-1

- ros-jazzy-fastrtps-dbgsym: 2.14.4-1 → 2.14.5-2

- ros-jazzy-feetech-ros2-driver: 0.1.0-3 → 0.2.0-1

- ros-jazzy-feetech-ros2-driver-dbgsym: 0.1.0-3 → 0.2.0-1

- ros-jazzy-ffw: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-bringup: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-description: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-joint-trajectory-command-broadcaster: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-joint-trajectory-command-broadcaster-dbgsym: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-joystick-controller: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-joystick-controller-dbgsym: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-moveit-config: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-spring-actuator-controller: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-spring-actuator-controller-dbgsym: 1.0.9-1 → 1.1.9-1

- ros-jazzy-ffw-teleop: 1.0.9-1 → 1.1.9-1

- ros-jazzy-filters: 2.2.1-1 → 2.2.2-1

- ros-jazzy-filters-dbgsym: 2.2.1-1 → 2.2.2-1

- ros-jazzy-flir-camera-description: 3.0.2-1 → 3.0.3-1

- ros-jazzy-flir-camera-msgs: 3.0.2-1 → 3.0.3-1

- ros-jazzy-flir-camera-msgs-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-force-torque-sensor-broadcaster: 4.27.0-1 → 4.30.1-1

- ros-jazzy-force-torque-sensor-broadcaster-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-forward-command-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-forward-command-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-fuse: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-constraints: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-constraints-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-core: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-core-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-doc: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-graphs: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-graphs-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-loss: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-loss-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-models: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-models-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-msgs: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-msgs-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-optimizers: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-optimizers-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-publishers: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-publishers-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-tutorials: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-tutorials-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-variables: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-variables-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-viz: 1.1.1-1 → 1.1.3-1

- ros-jazzy-fuse-viz-dbgsym: 1.1.1-1 → 1.1.3-1

- ros-jazzy-geometry2: 0.36.12-1 → 0.36.14-1

- ros-jazzy-gpio-controllers: 4.27.0-1 → 4.30.1-1

- ros-jazzy-gpio-controllers-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-gps-msgs: 2.1.0-1 → 2.1.1-1

- ros-jazzy-gps-msgs-dbgsym: 2.1.0-1 → 2.1.1-1

- ros-jazzy-gps-sensor-broadcaster: 4.27.0-1 → 4.30.1-1

- ros-jazzy-gps-sensor-broadcaster-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-gps-tools: 2.1.0-1 → 2.1.1-1

- ros-jazzy-gps-tools-dbgsym: 2.1.0-1 → 2.1.1-1

- ros-jazzy-gps-umd: 2.1.0-1 → 2.1.1-1

- ros-jazzy-gpsd-client: 2.1.0-1 → 2.1.1-1

- ros-jazzy-gpsd-client-dbgsym: 2.1.0-1 → 2.1.1-1

- ros-jazzy-gripper-controllers: 4.27.0-1 → 4.30.1-1

- ros-jazzy-gripper-controllers-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-gz-ros2-control: 1.2.13-1 → 1.2.14-1

- ros-jazzy-gz-ros2-control-dbgsym: 1.2.13-1 → 1.2.14-1

- ros-jazzy-gz-ros2-control-demos: 1.2.13-1 → 1.2.14-1

- ros-jazzy-gz-ros2-control-demos-dbgsym: 1.2.13-1 → 1.2.14-1

- ros-jazzy-hardware-interface: 4.32.0-1 → 4.35.0-1

- ros-jazzy-hardware-interface-dbgsym: 4.32.0-1 → 4.35.0-1

- ros-jazzy-hardware-interface-testing: 4.32.0-1 → 4.35.0-1

- ros-jazzy-hardware-interface-testing-dbgsym: 4.32.0-1 → 4.35.0-1

- ros-jazzy-hebi-cpp-api: 3.12.3-1 → 3.13.0-3

- ros-jazzy-hebi-cpp-api-dbgsym: 3.12.3-1 → 3.13.0-3

- ros-jazzy-image-tools: 0.33.5-1 → 0.33.6-1

- ros-jazzy-image-tools-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-image-transport-plugins: 4.0.4-1 → 4.0.5-1

- ros-jazzy-imu-sensor-broadcaster: 4.27.0-1 → 4.30.1-1

- ros-jazzy-imu-sensor-broadcaster-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-intra-process-demo: 0.33.5-1 → 0.33.6-1

- ros-jazzy-intra-process-demo-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-joint-limits: 4.32.0-1 → 4.35.0-1

- ros-jazzy-joint-limits-dbgsym: 4.32.0-1 → 4.35.0-1

- ros-jazzy-joint-state-broadcaster: 4.27.0-1 → 4.30.1-1

- ros-jazzy-joint-state-broadcaster-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-joint-trajectory-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-joint-trajectory-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-kompass: 0.2.1-1 → 0.3.0-1

- ros-jazzy-kompass-interfaces: 0.2.1-1 → 0.3.0-1

- ros-jazzy-kompass-interfaces-dbgsym: 0.2.1-1 → 0.3.0-1

- ros-jazzy-laser-filters: 2.0.8-1 → 2.0.9-1

- ros-jazzy-laser-filters-dbgsym: 2.0.8-1 → 2.0.9-1

- ros-jazzy-launch: 3.4.5-1 → 3.4.6-1

- ros-jazzy-launch-pytest: 3.4.5-1 → 3.4.6-1

- ros-jazzy-launch-testing: 3.4.5-1 → 3.4.6-1

- ros-jazzy-launch-testing-ament-cmake: 3.4.5-1 → 3.4.6-1

- ros-jazzy-launch-testing-examples: 0.19.5-1 → 0.19.6-1

- ros-jazzy-launch-xml: 3.4.5-1 → 3.4.6-1

- ros-jazzy-launch-yaml: 3.4.5-1 → 3.4.6-1

- ros-jazzy-leo-bringup: 2.3.0-1 → 2.4.0-1

- ros-jazzy-leo-filters: 2.3.0-1 → 2.4.0-1

- ros-jazzy-leo-filters-dbgsym: 2.3.0-1 → 2.4.0-1

- ros-jazzy-leo-fw: 2.3.0-1 → 2.4.0-1

- ros-jazzy-leo-fw-dbgsym: 2.3.0-1 → 2.4.0-1

- ros-jazzy-leo-robot: 2.3.0-1 → 2.4.0-1

- ros-jazzy-libcaer-driver: 1.5.1-1 → 1.5.2-1

- ros-jazzy-libcaer-driver-dbgsym: 1.5.1-1 → 1.5.2-1

- ros-jazzy-liblz4-vendor: 0.26.7-1 → 0.26.9-1

- ros-jazzy-librealsense2: 2.55.1-1 → 2.56.4-1

- ros-jazzy-librealsense2-dbgsym: 2.55.1-1 → 2.56.4-1

- ros-jazzy-lifecycle: 0.33.5-1 → 0.33.6-1

- ros-jazzy-lifecycle-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-lifecycle-msgs: 2.0.2-2 → 2.0.3-1

- ros-jazzy-lifecycle-msgs-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-lifecycle-py: 0.33.5-1 → 0.33.6-1

- ros-jazzy-logging-demo: 0.33.5-1 → 0.33.6-1

- ros-jazzy-logging-demo-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-lttngpy: 8.2.3-1 → 8.2.4-1

- ros-jazzy-mapviz: 2.5.6-1 → 2.5.8-1

- ros-jazzy-mapviz-dbgsym: 2.5.6-1 → 2.5.8-1

- ros-jazzy-mapviz-interfaces: 2.5.6-1 → 2.5.8-1

- ros-jazzy-mapviz-interfaces-dbgsym: 2.5.6-1 → 2.5.8-1

- ros-jazzy-mapviz-plugins: 2.5.6-1 → 2.5.8-1

- ros-jazzy-mapviz-plugins-dbgsym: 2.5.6-1 → 2.5.8-1

- ros-jazzy-mcap-vendor: 0.26.7-1 → 0.26.9-1

- ros-jazzy-mcap-vendor-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-mecanum-drive-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-mecanum-drive-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-message-filters: 4.11.6-1 → 4.11.7-1

- ros-jazzy-message-filters-dbgsym: 4.11.6-1 → 4.11.7-1

- ros-jazzy-mola-test-datasets: 0.4.1-1 → 0.4.2-1

- ros-jazzy-mrpt-map-server: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-map-server-dbgsym: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-msgs-bridge: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-nav-interfaces: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-nav-interfaces-dbgsym: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-navigation: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-path-planning: 0.2.1-1 → 0.2.2-1

- ros-jazzy-mrpt-path-planning-dbgsym: 0.2.1-1 → 0.2.2-1

- ros-jazzy-mrpt-pf-localization: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-pf-localization-dbgsym: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-pointcloud-pipeline: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-pointcloud-pipeline-dbgsym: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-rawlog: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-rawlog-dbgsym: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-reactivenav2d: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-reactivenav2d-dbgsym: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-tps-astar-planner: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-tps-astar-planner-dbgsym: 2.2.1-1 → 2.2.3-1

- ros-jazzy-mrpt-tutorials: 2.2.1-1 → 2.2.3-1

- ros-jazzy-multires-image: 2.5.6-1 → 2.5.8-1

- ros-jazzy-multires-image-dbgsym: 2.5.6-1 → 2.5.8-1

- ros-jazzy-mvsim: 0.13.3-1 → 0.14.0-1

- ros-jazzy-mvsim-dbgsym: 0.13.3-1 → 0.14.0-1

- ros-jazzy-om-gravity-compensation-controller: 4.0.1-1 → 4.0.6-1

- ros-jazzy-om-gravity-compensation-controller-dbgsym: 4.0.1-1 → 4.0.6-1

- ros-jazzy-om-joint-trajectory-command-broadcaster: 4.0.1-1 → 4.0.6-1

- ros-jazzy-om-joint-trajectory-command-broadcaster-dbgsym: 4.0.1-1 → 4.0.6-1

- ros-jazzy-om-spring-actuator-controller: 4.0.1-1 → 4.0.6-1

- ros-jazzy-om-spring-actuator-controller-dbgsym: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-bringup: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-collision: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-collision-dbgsym: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-description: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-gui: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-gui-dbgsym: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-moveit-config: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-playground: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-playground-dbgsym: 4.0.1-1 → 4.0.6-1

- ros-jazzy-open-manipulator-teleop: 4.0.1-1 → 4.0.6-1

- ros-jazzy-osrf-pycommon: 2.1.6-1 → 2.1.7-1

- ros-jazzy-pal-statistics: 2.6.2-1 → 2.6.4-1

- ros-jazzy-pal-statistics-dbgsym: 2.6.2-1 → 2.6.4-1

- ros-jazzy-pal-statistics-msgs: 2.6.2-1 → 2.6.4-1

- ros-jazzy-pal-statistics-msgs-dbgsym: 2.6.2-1 → 2.6.4-1

- ros-jazzy-parallel-gripper-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-parallel-gripper-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-pendulum-control: 0.33.5-1 → 0.33.6-1

- ros-jazzy-pendulum-control-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-pendulum-msgs: 0.33.5-1 → 0.33.6-1

- ros-jazzy-pendulum-msgs-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-pid-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-pid-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-plotjuggler: 3.9.2-1 → 3.10.11-1

- ros-jazzy-plotjuggler-dbgsym: 3.9.2-1 → 3.10.11-1

- ros-jazzy-plotjuggler-ros: 2.1.2-2 → 2.3.0-1

- ros-jazzy-plotjuggler-ros-dbgsym: 2.1.2-2 → 2.3.0-1

- ros-jazzy-pose-broadcaster: 4.27.0-1 → 4.30.1-1

- ros-jazzy-pose-broadcaster-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-pose-cov-ops: 0.3.13-1 → 0.4.0-1

- ros-jazzy-pose-cov-ops-dbgsym: 0.3.13-1 → 0.4.0-1

- ros-jazzy-position-controllers: 4.27.0-1 → 4.30.1-1

- ros-jazzy-position-controllers-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-quality-of-service-demo-cpp: 0.33.5-1 → 0.33.6-1

- ros-jazzy-quality-of-service-demo-cpp-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-quality-of-service-demo-py: 0.33.5-1 → 0.33.6-1

- ros-jazzy-range-sensor-broadcaster: 4.27.0-1 → 4.30.1-1

- ros-jazzy-range-sensor-broadcaster-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-rc-genicam-api: 2.6.5-2 → 2.8.1-1

- ros-jazzy-rc-genicam-api-dbgsym: 2.6.5-2 → 2.8.1-1

- ros-jazzy-rc-genicam-driver: 0.3.1-1 → 0.3.2-1

- ros-jazzy-rc-genicam-driver-dbgsym: 0.3.1-1 → 0.3.2-1

- ros-jazzy-rc-reason-clients: 0.4.0-2 → 0.5.0-1

- ros-jazzy-rc-reason-msgs: 0.4.0-2 → 0.5.0-1

- ros-jazzy-rc-reason-msgs-dbgsym: 0.4.0-2 → 0.5.0-1

- ros-jazzy-rcl-interfaces: 2.0.2-2 → 2.0.3-1

- ros-jazzy-rcl-interfaces-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-rclcpp: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclcpp-action: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclcpp-action-dbgsym: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclcpp-components: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclcpp-components-dbgsym: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclcpp-dbgsym: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclcpp-lifecycle: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclcpp-lifecycle-dbgsym: 28.1.10-1 → 28.1.11-1

- ros-jazzy-rclpy: 7.1.4-1 → 7.1.5-1

- ros-jazzy-rcutils: 6.7.2-1 → 6.7.3-1

- ros-jazzy-rcutils-dbgsym: 6.7.2-1 → 6.7.3-1

- ros-jazzy-realsense2-camera: 4.55.1-3 → 4.56.4-1

- ros-jazzy-realsense2-camera-dbgsym: 4.55.1-3 → 4.56.4-1

- ros-jazzy-realsense2-camera-msgs: 4.55.1-3 → 4.56.4-1

- ros-jazzy-realsense2-camera-msgs-dbgsym: 4.55.1-3 → 4.56.4-1

- ros-jazzy-realsense2-description: 4.55.1-3 → 4.56.4-1

- ros-jazzy-rmf-building-sim-gz-plugins: 2.3.2-1 → 2.3.3-1

- ros-jazzy-rmf-building-sim-gz-plugins-dbgsym: 2.3.2-1 → 2.3.3-1

- ros-jazzy-rmf-robot-sim-common: 2.3.2-1 → 2.3.3-1

- ros-jazzy-rmf-robot-sim-common-dbgsym: 2.3.2-1 → 2.3.3-1

- ros-jazzy-rmf-robot-sim-gz-plugins: 2.3.2-1 → 2.3.3-1

- ros-jazzy-rmf-robot-sim-gz-plugins-dbgsym: 2.3.2-1 → 2.3.3-1

- ros-jazzy-rmw-fastrtps-cpp: 8.4.2-1 → 8.4.3-1

- ros-jazzy-rmw-fastrtps-cpp-dbgsym: 8.4.2-1 → 8.4.3-1

- ros-jazzy-rmw-fastrtps-dynamic-cpp: 8.4.2-1 → 8.4.3-1

- ros-jazzy-rmw-fastrtps-dynamic-cpp-dbgsym: 8.4.2-1 → 8.4.3-1

- ros-jazzy-rmw-fastrtps-shared-cpp: 8.4.2-1 → 8.4.3-1

- ros-jazzy-rmw-fastrtps-shared-cpp-dbgsym: 8.4.2-1 → 8.4.3-1

- ros-jazzy-rmw-implementation: 2.15.5-1 → 2.15.6-1

- ros-jazzy-rmw-implementation-dbgsym: 2.15.5-1 → 2.15.6-1

- ros-jazzy-ros-gz: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-bridge: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-bridge-dbgsym: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-image: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-image-dbgsym: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-interfaces: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-interfaces-dbgsym: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-sim: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-sim-dbgsym: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros-gz-sim-demos: 1.0.15-1 → 1.0.16-1

- ros-jazzy-ros2-control: 4.32.0-1 → 4.35.0-1

- ros-jazzy-ros2-control-test-assets: 4.32.0-1 → 4.35.0-1

- ros-jazzy-ros2-controllers: 4.27.0-1 → 4.30.1-1

- ros-jazzy-ros2-controllers-test-nodes: 4.27.0-1 → 4.30.1-1

- ros-jazzy-ros2action: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2bag: 0.26.7-1 → 0.26.9-1

- ros-jazzy-ros2cli: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2cli-test-interfaces: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2cli-test-interfaces-dbgsym: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2component: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2controlcli: 4.32.0-1 → 4.35.0-1

- ros-jazzy-ros2doctor: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2interface: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2lifecycle: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2lifecycle-test-fixtures: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2lifecycle-test-fixtures-dbgsym: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2multicast: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2node: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2param: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2pkg: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2run: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2service: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2topic: 0.32.4-1 → 0.32.5-1

- ros-jazzy-ros2trace: 8.2.3-1 → 8.2.4-1

- ros-jazzy-rosbag2: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-compression: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-compression-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-compression-zstd: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-compression-zstd-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-cpp: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-cpp-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-examples-cpp: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-examples-cpp-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-examples-py: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-interfaces: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-interfaces-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-performance-benchmarking: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-performance-benchmarking-msgs: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-py: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-storage: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-storage-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-storage-default-plugins: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-storage-mcap: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-storage-mcap-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-storage-sqlite3: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-storage-sqlite3-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-test-common: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-test-msgdefs: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-test-msgdefs-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-tests: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-transport: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosbag2-transport-dbgsym: 0.26.7-1 → 0.26.9-1

- ros-jazzy-rosgraph-msgs: 2.0.2-2 → 2.0.3-1

- ros-jazzy-rosgraph-msgs-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-rosidl-adapter: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-cli: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-cmake: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-generator-c: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-generator-cpp: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-generator-type-description: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-parser: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-pycommon: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-runtime-c: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-runtime-c-dbgsym: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-runtime-cpp: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-typesupport-fastrtps-c: 3.6.1-1 → 3.6.2-1

- ros-jazzy-rosidl-typesupport-fastrtps-c-dbgsym: 3.6.1-1 → 3.6.2-1

- ros-jazzy-rosidl-typesupport-fastrtps-cpp: 3.6.1-1 → 3.6.2-1

- ros-jazzy-rosidl-typesupport-fastrtps-cpp-dbgsym: 3.6.1-1 → 3.6.2-1

- ros-jazzy-rosidl-typesupport-interface: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-typesupport-introspection-c: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-typesupport-introspection-c-dbgsym: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-typesupport-introspection-cpp: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rosidl-typesupport-introspection-cpp-dbgsym: 4.6.5-1 → 4.6.6-1

- ros-jazzy-rqt-bag: 1.5.4-1 → 1.5.5-1

- ros-jazzy-rqt-bag-plugins: 1.5.4-1 → 1.5.5-1

- ros-jazzy-rqt-controller-manager: 4.32.0-1 → 4.35.0-1

- ros-jazzy-rqt-graph: 1.5.4-1 → 1.5.5-1

- ros-jazzy-rqt-joint-trajectory-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-rqt-plot: 1.4.3-1 → 1.4.4-1

- ros-jazzy-rqt-robot-steering: 1.0.1-2 → 2.0.0-1

- ros-jazzy-rtabmap: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-conversions: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-conversions-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-demos: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-examples: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-launch: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-msgs: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-msgs-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-odom: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-odom-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-python: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-ros: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-rviz-plugins: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-rviz-plugins-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-slam: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-slam-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-sync: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-sync-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-util: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-util-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-viz: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rtabmap-viz-dbgsym: 0.22.0-1 → 0.22.1-1

- ros-jazzy-rviz-assimp-vendor: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-common: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-common-dbgsym: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-default-plugins: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-default-plugins-dbgsym: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-ogre-vendor: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-ogre-vendor-dbgsym: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-rendering: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-rendering-dbgsym: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-rendering-tests: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz-visual-testing-framework: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz2: 14.1.12-1 → 14.1.13-1

- ros-jazzy-rviz2-dbgsym: 14.1.12-1 → 14.1.13-1

- ros-jazzy-sdformat-vendor: 0.0.9-1 → 0.0.10-1

- ros-jazzy-sdformat-vendor-dbgsym: 0.0.9-1 → 0.0.10-1

- ros-jazzy-service-msgs: 2.0.2-2 → 2.0.3-1

- ros-jazzy-service-msgs-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-shared-queues-vendor: 0.26.7-1 → 0.26.9-1

- ros-jazzy-spinnaker-camera-driver: 3.0.2-1 → 3.0.3-1

- ros-jazzy-spinnaker-camera-driver-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-spinnaker-synchronized-camera-driver: 3.0.2-1 → 3.0.3-1

- ros-jazzy-spinnaker-synchronized-camera-driver-dbgsym: 3.0.2-1 → 3.0.3-1

- ros-jazzy-sqlite3-vendor: 0.26.7-1 → 0.26.9-1

- ros-jazzy-sros2: 0.13.3-1 → 0.13.4-1

- ros-jazzy-sros2-cmake: 0.13.3-1 → 0.13.4-1

- ros-jazzy-statistics-msgs: 2.0.2-2 → 2.0.3-1

- ros-jazzy-statistics-msgs-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-steering-controllers-library: 4.27.0-1 → 4.30.1-1

- ros-jazzy-steering-controllers-library-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-swri-cli-tools: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-console-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-console-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-dbw-interface: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-geometry-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-geometry-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-image-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-image-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-math-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-math-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-opencv-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-opencv-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-roscpp: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-roscpp-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-route-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-route-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-serial-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-serial-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-transform-util: 3.8.4-1 → 3.8.7-1

- ros-jazzy-swri-transform-util-dbgsym: 3.8.4-1 → 3.8.7-1

- ros-jazzy-teleop-twist-joy: 2.6.3-1 → 2.6.5-1

- ros-jazzy-teleop-twist-joy-dbgsym: 2.6.3-1 → 2.6.5-1

- ros-jazzy-test-msgs: 2.0.2-2 → 2.0.3-1

- ros-jazzy-test-msgs-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-tf2: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-2d: 1.0.1-4 → 1.4.0-1

- ros-jazzy-tf2-bullet: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-dbgsym: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-eigen: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-eigen-kdl: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-eigen-kdl-dbgsym: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-geometry-msgs: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-kdl: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-msgs: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-msgs-dbgsym: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-py: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-py-dbgsym: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-ros: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-ros-dbgsym: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-ros-py: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-sensor-msgs: 0.36.12-1 → 0.36.14-1

- ros-jazzy-tf2-tools: 0.36.12-1 → 0.36.14-1

- ros-jazzy-theora-image-transport: 4.0.4-1 → 4.0.5-1

- ros-jazzy-theora-image-transport-dbgsym: 4.0.4-1 → 4.0.5-1

- ros-jazzy-tile-map: 2.5.6-1 → 2.5.8-1

- ros-jazzy-tile-map-dbgsym: 2.5.6-1 → 2.5.8-1

- ros-jazzy-topic-based-ros2-control: 0.2.0-3 → 0.3.0-4

- ros-jazzy-topic-based-ros2-control-dbgsym: 0.2.0-3 → 0.3.0-4

- ros-jazzy-topic-monitor: 0.33.5-1 → 0.33.6-1

- ros-jazzy-topic-statistics-demo: 0.33.5-1 → 0.33.6-1

- ros-jazzy-topic-statistics-demo-dbgsym: 0.33.5-1 → 0.33.6-1

- ros-jazzy-trac-ik: 2.0.1-1 → 2.0.2-1

- ros-jazzy-trac-ik-kinematics-plugin: 2.0.1-1 → 2.0.2-1

- ros-jazzy-trac-ik-kinematics-plugin-dbgsym: 2.0.1-1 → 2.0.2-1

- ros-jazzy-trac-ik-lib: 2.0.1-1 → 2.0.2-1

- ros-jazzy-trac-ik-lib-dbgsym: 2.0.1-1 → 2.0.2-1

- ros-jazzy-tracetools: 8.2.3-1 → 8.2.4-1

- ros-jazzy-tracetools-dbgsym: 8.2.3-1 → 8.2.4-1

- ros-jazzy-tracetools-launch: 8.2.3-1 → 8.2.4-1

- ros-jazzy-tracetools-read: 8.2.3-1 → 8.2.4-1

- ros-jazzy-tracetools-test: 8.2.3-1 → 8.2.4-1

- ros-jazzy-tracetools-trace: 8.2.3-1 → 8.2.4-1

- ros-jazzy-transmission-interface: 4.32.0-1 → 4.35.0-1

- ros-jazzy-transmission-interface-dbgsym: 4.32.0-1 → 4.35.0-1

- ros-jazzy-tricycle-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-tricycle-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-tricycle-steering-controller: 4.27.0-1 → 4.30.1-1

- ros-jazzy-tricycle-steering-controller-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-turtle-nest: 1.0.2-1 → 1.1.0-1

- ros-jazzy-turtle-nest-dbgsym: 1.0.2-1 → 1.1.0-1

- ros-jazzy-turtlebot3: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-bringup: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-cartographer: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-description: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-example: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-fake-node: 2.3.5-1 → 2.3.7-1

- ros-jazzy-turtlebot3-fake-node-dbgsym: 2.3.5-1 → 2.3.7-1

- ros-jazzy-turtlebot3-gazebo: 2.3.5-1 → 2.3.7-1

- ros-jazzy-turtlebot3-gazebo-dbgsym: 2.3.5-1 → 2.3.7-1

- ros-jazzy-turtlebot3-navigation2: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-node: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-node-dbgsym: 2.3.1-1 → 2.3.3-1

- ros-jazzy-turtlebot3-simulations: 2.3.5-1 → 2.3.7-1

- ros-jazzy-turtlebot3-teleop: 2.3.1-1 → 2.3.3-1

- ros-jazzy-type-description-interfaces: 2.0.2-2 → 2.0.3-1

- ros-jazzy-type-description-interfaces-dbgsym: 2.0.2-2 → 2.0.3-1

- ros-jazzy-ur-client-library: 2.1.0-1 → 2.2.0-1

- ros-jazzy-ur-client-library-dbgsym: 2.1.0-1 → 2.2.0-1

- ros-jazzy-ur-msgs: 2.2.0-1 → 2.3.0-1

- ros-jazzy-ur-msgs-dbgsym: 2.2.0-1 → 2.3.0-1

- ros-jazzy-velocity-controllers: 4.27.0-1 → 4.30.1-1

- ros-jazzy-velocity-controllers-dbgsym: 4.27.0-1 → 4.30.1-1

- ros-jazzy-yasmin: 3.2.0-1 → 3.3.0-1

- ros-jazzy-yasmin-demos: 3.2.0-1 → 3.3.0-1

- ros-jazzy-yasmin-demos-dbgsym: 3.2.0-1 → 3.3.0-1

- ros-jazzy-yasmin-msgs: 3.2.0-1 → 3.3.0-1

- ros-jazzy-yasmin-msgs-dbgsym: 3.2.0-1 → 3.3.0-1

- ros-jazzy-yasmin-ros: 3.2.0-1 → 3.3.0-1

- ros-jazzy-yasmin-viewer: 3.2.0-1 → 3.3.0-1

- ros-jazzy-zed-msgs: 5.0.1-1 → 5.0.1-2

- ros-jazzy-zed-msgs-dbgsym: 5.0.1-1 → 5.0.1-2

- ros-jazzy-zstd-image-transport: 4.0.4-1 → 4.0.5-1

- ros-jazzy-zstd-image-transport-dbgsym: 4.0.4-1 → 4.0.5-1

- ros-jazzy-zstd-vendor: 0.26.7-1 → 0.26.9-1

Removed Packages [1]:

- ros-jazzy-test-ros-gz-bridge

Thanks to all ROS maintainers who make packages available to the ROS community. The above list of packages was made possible by the work of the following maintainers:

- Aarav Gupta

- Addisu Z. Taddese

- Aditya Pande

- Alejandro Hernandez Cordero

- Anthony Cavin (@anthonycvn)

- Anthony Welte

- Audrow Nash

- Automatika Robotics

- Bence Magyar

- Bernd Pfrommer

- Brandon Ong

- Chris Bollinger

- Chris Iverach-Brereton

- Chris Lalancette

- Christophe Bedard

- Davide Faconti

- Dirk Thomas

- Emerson Knapp

- Enrique Fernandez

- Felix Exner

- Felix Ruess

- Fictionlab

- Foxglove

- G.A. vd. Hoorn

- Geoffrey Biggs

- Hilary Luo

- Husarion

- Ivan Paunovic

- Jacob Perron

- Jafar Uruc

- Janne Karttunen

- Jean-Pierre Busch

- Jon Binney

- Jordan Palacios

- Jose Luis Blanco-Claraco

- Jose-Luis Blanco-Claraco

- José Luis Blanco-Claraco

- Kenji Brameld

- Kevin Hallenbeck

- LibRealSense ROS Team

- Luca Della Vedova

- Luis Camero

- Markus Bader

- Marq Rasmussen

- Mathieu Labbe

- Max Krogius

- Michael Orlov

- Miguel Company

- Miguel Ángel González Santamarta

- Pyo

- ROS Security Working Group

- ROS Tooling Working Group

- Roni Kreinin

- STEREOLABS

- Severn Lortie

- Shane Loretz

- Southwest Research Institute

- Stephen Williams

- TRACLabs Robotics

- Tarik Viehmann

- Timo Röhling

- Tom Moore

- Tully Foote

- Victor López

- William Woodall

- Wolfgang Hönig

- geoff

- miguel

- pyo

- ruess

4 posts - 4 participants

ROS Discourse General: Continuous Trajectory Recording and Replay for AgileX PIPER Robotic Arm

Hi ROS Community,

I’m excited to share details about implementing continuous trajectory recording and replay for the AgileX PIPER robotic arm. This solution leverages time-series data to accurately replicate complex motion trajectories, with full code, usage guides, and step-by-step demos included. It’s designed to support teaching demonstrations and automated operations, and I hope it brings value to your projects.

Abstract

This paper achieves continuous trajectory recording and replay based on the AgileX PIPER robotic arm. Through the recording and reproduction of time-series data, it achieves the perfect replication of the complex motion trajectories of the robotic arm. In this paper, we will analyze the code implementation and provide complete code, usage guidelines, and step-by-step demonstrations.

Keywords

Trajectory control; Continuous motion; Time series; Motion reproduction; AgileX PIPER

Code Repository

github link: https://github.com/agilexrobotics/Agilex-College.git

Function Demonstration

From Code → to Motion 🤖 The Magic of Robotic Arm

1. Preparation Before Use

1.1. Preparation Work

- There are no obstacles in the workspace to provide sufficient movement space for the robotic arm.

- Confirm that the power supply of the robotic arm is normal and all indicator lights are in a normal state.

- The lighting conditions are good for the observation of the position and state of the robotic arm.

- If equipped with a gripper, check whether the gripper actions are normal. The ground is stable to avoid vibration affecting the recording accuracy.

- Verify that the teach button functions normally.

1.2. Environment Configuration

- Operating system: Ubuntu (Ubuntu 18.04 or higher is recommended).

- Python environment: Python 3.6 or higher.

- Git code management tool: Used to clone remote code repositories.

sudo apt install git - Pip package manager: Used to install Python dependency packages.

sudo apt install python3-pip - Install CAN tools.

sudo apt install can-utils ethtool - Install the official Python SDK package, among which 1_0_0_beta is the version with API.

git clone -b 1_0_0_beta https://github.com/agilexrobotics/piper_sdk.git cd piper_sdk pip3 install . - Reference document: https://github.com/agilexrobotics/piper_sdk/blob/1_0_0_beta/README(ZH).MD

2. Operation Steps for Continuous Trajectory Recording and Replay Function

2.1. Operation Steps

-

Power on the robotic arm and connect the USB-to-CAN module to the computer (ensure that only one CAN module is connected).

-

Open the terminal and activate the CAN module.

sudo ip link set can0 up type can bitrate 1000000 -

Clone the remote code repository.

git clone https://github.com/agilexrobotics/Agilex-College.git -

Switch to the recordAndPlayTraj directory.

cd Agilex-College/piper/recordAndPlayTraj/ -

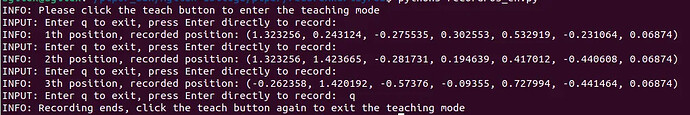

Run the recording program.

python3 recordTrajectory_en.py -

Short-press the teach button to enter the teaching mode.

-

Set the initial position of the robotic arm. After pressing Enter in the terminal, drag the robotic arm to record the trajectory.

-

After recording, short-press the teach button again to exit the teaching mode.

-

Precautions before replay:

When exiting the teaching mode for the first time, a specific initialization process is required to switch from the teaching mode to the CAN mode. Therefore, the replay program will automatically perform a reset operation to return joints 2, 3, and 5 to safe positions (zero points) to prevent the robotic arm from suddenly falling due to gravity and causing damage. In special cases, manual assistance may be needed to return joints 2, 3, and 5 to zero points. -

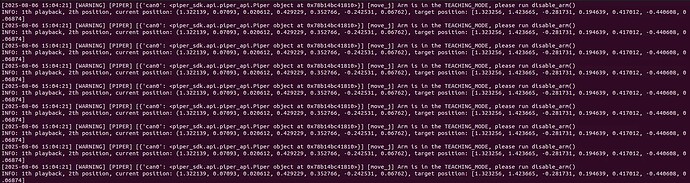

Run the replay program.

python3 playTrajectory_en.py -

After successful enabling, press Enter in the terminal to play the trajectory.

2.2. Recording Techniques and Strategies

Motion Planning Strategies:

Before starting the recording, the trajectory to be recorded should be planned:

-

Starting Position Selection:

- Select a safe position of the robotic arm as the starting point.

- Ensure that the starting position is convenient for initialization during subsequent replay.

- Avoid choosing a position close to the joint limit.

-

Trajectory Path Design:

- Plan a smooth motion path to avoid sharp direction changes.

- Consider the kinematic constraints of the robotic arm to avoid singular positions.

- Reserve sufficient safety margins to prevent collisions.

-

Speed Control:

- Maintain a moderate movement speed to ensure both recording quality and avoid being too slow.

- Appropriately slow down at key positions to improve accuracy.

- Avoid sudden acceleration or deceleration.

3. Problems and Solutions

Problem 1: No Piper Class

![]()

Reason: The currently installed SDK is not the version with API.

Solution: Execute pip3 uninstall piper_sdk to uninstall the current SDK, and then install the 1_0_0_beta version of the SDK according to the method in 1.2. Environment Configuration.

Problem 2: The Robotic Arm Does Not Move, and the Terminal Outputs as Follows

Reason: The teach button was short-pressed during the program operation.

Solution: Check whether the indicator light of the teach button is off. If it is, re-run the program. If not, short-press the teach button to exit the teaching mode first and then run the program.

4. Implementation of Trajectory Recording Program

The trajectory recording program is the data collection module of the system, responsible for capturing the position information of the continuous joint movements of the robotic arm in the teaching mode.

4.1. Program Initialization and Configuration

4.1.1. Parameter Configuration Design

# Whether there is a gripper

have_gripper = True

# Maximum recording time in seconds (0 = unlimited, stop by terminating program)

record_time = 10.0

# Teach mode detection timeout in seconds

timeout = 10.0

# CSV file path for saving trajectory

CSV_path = os.path.join(os.path.dirname(__file__), "trajectory.csv")

Analysis of Configuration Parameters:

- The

have_gripperparameter is of boolean type, and True indicates the presence of a gripper. - The

record_timeparameter sets the maximum recording time. The default value is 10s, that is, it records a 10-second trajectory. When this parameter is set to 0, the system enters the infinite recording mode. - The

timeoutparameter sets the timeout for teaching mode detection. After starting the program, if the teaching mode is not entered within 10s, the program will exit. - The

CSV_pathparameter sets the save path of the trajectory file. By default, it is in the same path as the program, and the file name istrajectory.csv.

4.1.2. Robotic Arm Connection and Initialization

# Initialize and connect to robotic arm

piper = Piper("can0")

piper.connect()

interface = piper.init()

time.sleep(0.1)

Analysis of Connection Mechanism:

Piper()is the core class of the API, which simplifies some common methods based on the interface.init()creates and returns an interface instance, which can be used to call some special methods of Piper.connect()starts a thread to connect to the CAN port and process CAN data.- The addition of

time.sleep(0.1)ensures that the connection is fully established. In embedded systems, hardware initialization usually takes a certain amount of time, and this short delay ensures the reliability of subsequent operations.

4.1.3. Position Acquisition and Data Storage

4.1.3.1. Position Acquisition Function

def get_pos():

joint_state = piper.get_joint_states()[0]

if have_gripper:

'''Get current joint angles and gripper opening distance'''

return joint_state + (piper.get_gripper_states()[0][0], )

return joint_state

4.1.3.2. Position Change Detection

if current_pos != last_pos: # Record only when position changes

current_pos = get_pos()

wait_time = round(time.time() - last_time, 4)

print(f"INFO: Wait time: {wait_time:0.4f}s, current position: {current_pos}")

last_pos = current_pos

last_time = time.time()

csv.write(f"{wait_time}," + ",".join(map(str, current_pos)) + "\n")

Position Processing:

current_pos != last_pos: When the robotic arm is stationary or the positions obtained in two consecutive times are the same, the position change processing can greatly reduce the recorded data.

Time Processing:

round(time.time() - last_time, 4):- A precision of 0.1 milliseconds is sufficient for robotic arm control.

- Shortening the length of time can reduce the occupied storage space.

4.1.4. Mode Detection and Switching

print("step 1: Press teach button to enter teach mode")

while interface.GetArmStatus().arm_status.ctrl_mode != 2:

over_time = time.time() + timeout

if over_time < time.time():

print("ERROR: Teach mode detection timeout. Please check if teach mode is enabled")

exit()

time.sleep(0.01)

Status Polling Strategy:

The program uses the polling method to detect the control mode. This method has the following characteristics:

- Simple implementation and clear logic.

- Low requirements for system resources.

Timeout Protection Mechanism:

The 10-second timeout setting takes into account the needs of actual operations:

- Time for users to understand the prompt information.

- Time to find and press the teach button.

- Time for system response and state switching.

- Fault-tolerance handling in abnormal situations.

Safety Features of Teaching Mode: