| |

Examples for asr_recognizer_prediction_psm

Description: Several short examples showing how to start and use asr_recognizer_prediction_psm in different modesTutorial Level: BEGINNER

Contents

Description

Several short examples showing how to start and use asr_recognizer_prediction_psm in different modes

Startup

- roscore

- start kinematic chain: roslaunch asr_kinematic_chain_dome transformation_publishers_for_kinect_left.launch

Tutorial

with inference, python:

- start the server: roslaunch asr_recognizer_prediction_psm psm_node.launch

- call the service: roscd asr_recognizer_prediction_psm

- and then python python/psm_node_test.py

Start RViz (rviz) and open the config file doc/recognizer_prediction_psm. rviz

psm_node_test.py generates two AsrObjects, one of type 'Cup', one of type 'PlateDeep':

obj = AsrObject ()

...

objects.append(obj)

obj2 = AsrObject ()

It sets up and calls the service with the list of these objects:

service_proxy = rospy.ServiceProxy('/psm_node', psm_node)

response = service_proxy(objects)

Parameters are set in the launch file here, like the path to the .xml file containing the scene models

scene_model_filename (in this tutorial, model/scene_dome1.xml is used)

and the number of hypotheses to be created as well as the frame:

num_hypotheses (100), base_frame_id (\map)

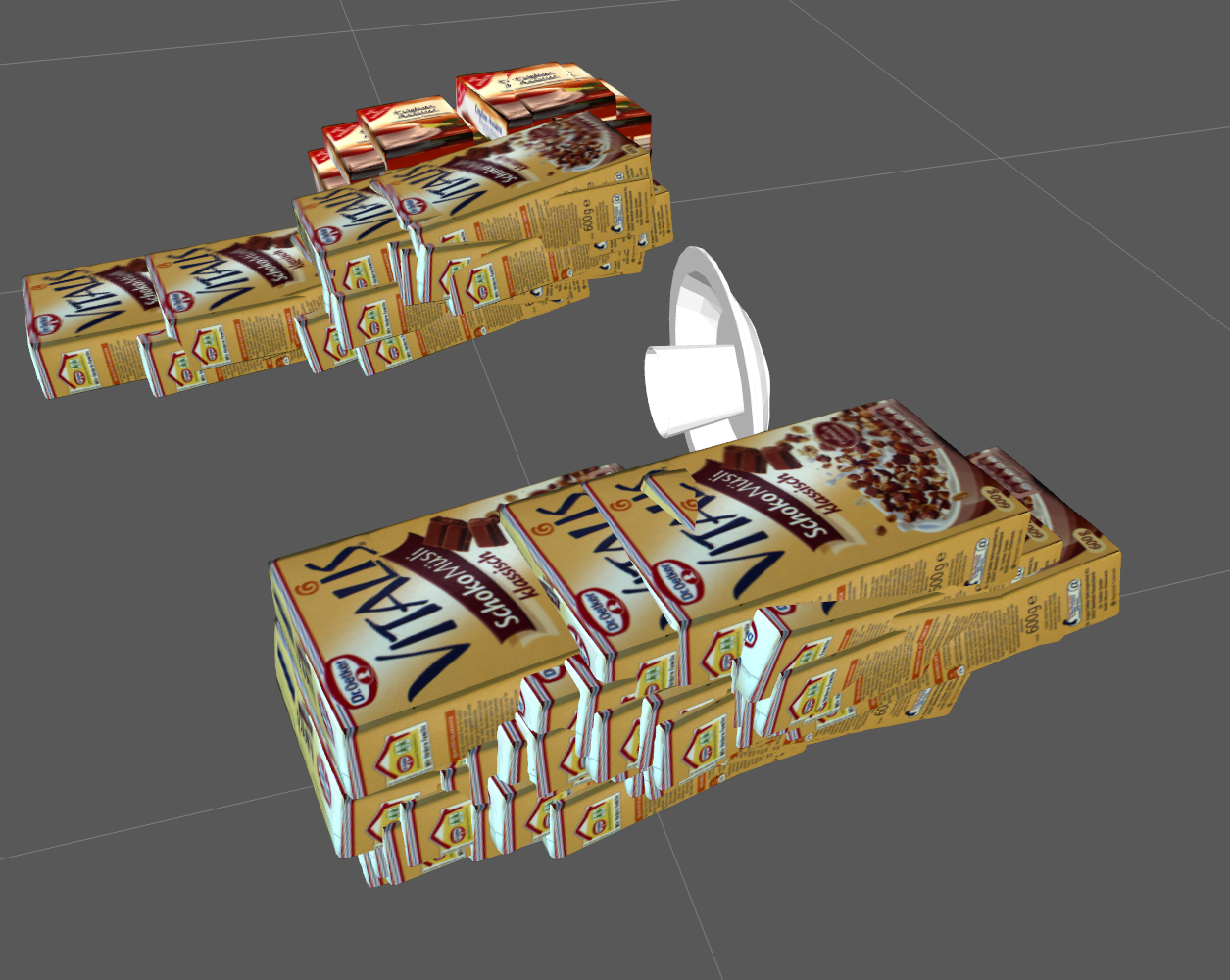

Not all scene objects are found. Because of this, the poses of the other objects in the two scenes specified in the .xml file are predicted based on the poses of the given two objects (in the center of the image below):

If the comment before adding another object is removed, i.e. another object gets added, nothing changes (except for some influence of randomness). That is because the third object is of type 'Smacks', which does not appear in any of the scenes of the .xml file.

without inference, python:

- roslaunch asr_recognizer_prediction_psm recognizer_prediction_psm.launch

- roscd asr_recognizer_prediction_psm

- Python: python python/recognizer_prediction_psm_test.py

Start RViz (rviz) and open the config file doc/recognizer_prediction_psm. rviz

recognizer_prediction_psm_test.py generates an AsrObject:

obj = AsrObject ()

...

objects.append(obj)

It needs the path to the .xml file where the model of the scenes are stored:

path = rospkg.RosPack.get_path('asr_recognizer_prediction_psm') + '/models/dome_scene_1.xml'

The scene names of the different scenes in the model file are put in a list:

scenes.append('background')

scenes.append('marker_scene1')

scenes.append('marker_scene2')

Another list stores the probabilities of each scene:

scene_probabilities.append(0.25)

Finally, the service call needs the amount of hypotheses to be generated and the name of the base frame to transform objects and hypotheses into:

num_overall_hypothesis = 10

base_frame = '/map'

Finally, the service can be set up and then called:

generate_hypothesis = rospy.ServiceProxy('/recognizer_prediction_psm'. recognizer_prediction_psm)

response = generate_hypothesis(path, scenes, scene_probabilities, num_overall_hypothesis, objects, base_frame)

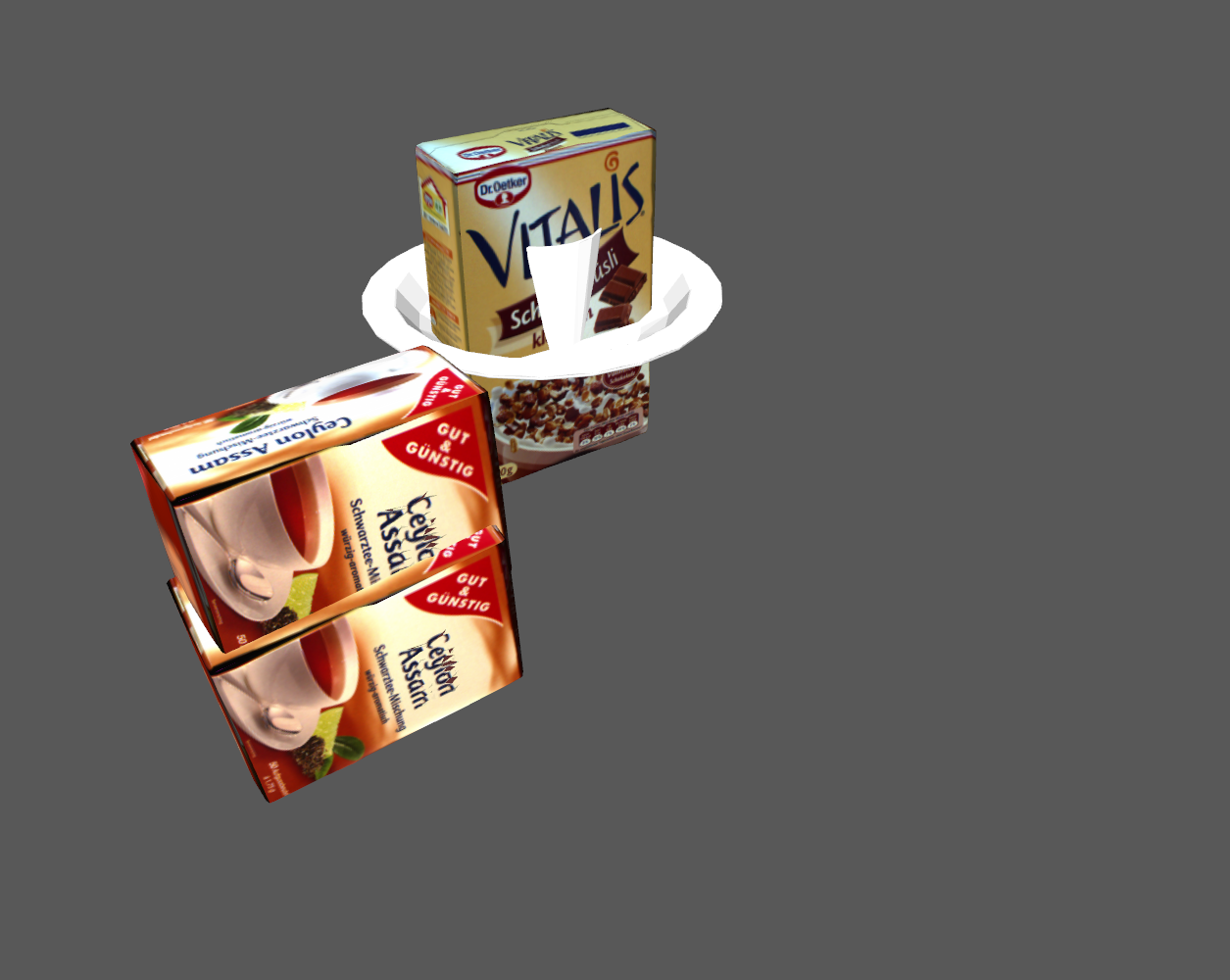

When running this script, not all scene objects will be found. Hypotheses for the missing ones will be generated based on the observed object of type 'VitalisSchoko' (note that the requested number of overall hypotheses was 10 here instead of 100 above):

If the comment # before objects.append(obj2) is removed, that is, another object is added to the observations, you can see in the window running the ROS node that the new object !PlateDeep was found and integrated, same if you include the last object Cup. After this, the only predicted object is 'CeylonTea', which takes more hypotheses for itself andexhibits a broader range of pose predictions than above:

without inference, C++:

- roslaunch asr_recognizer_prediction_psm recognizer_prediction_psm.launch

- rosrun asr_recognizer_prediction_psm asr_recognizer_prediction_psm_client 10

This sets the same parameters (but different objects) in C++, takes the number of hypotheses as input (here: 10) and generates the hypotheses.

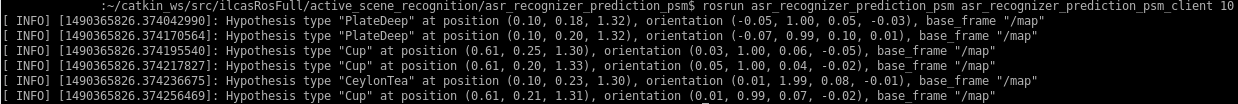

Output for asr_recognizer_prediction_psm_client:

Only an object of type 'VitalisSchoko' was found. The number of hypotheses (10) was spread between the remaining objects in the scene, scaled with the scene probability, which leads to the inequal number of hypotheses for each missing object and the fact that there were less than 10 hypotheses generated.