| Note: This tutorial assumes that you have completed the previous tutorials: Stereo calibration. |

| |

Technical description of Kinect calibration

Description: Technical aspects of the Kinect device and its calibrationTutorial Level: ADVANCED

Authors: Kurt Konolige, Patrick Mihelich

Kinect operation

Imager and projector placement

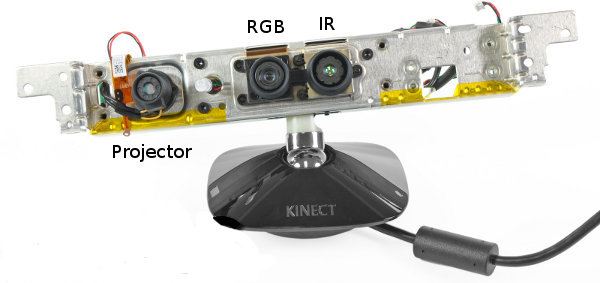

The Kinect device has two cameras and one laser-based IR projector. The figure below shows their placement on the device. Each lens is associated with a camera or a projector.

This image is provided by iFixit.

All the calibrations done below are based on IR and RGB images of chessboard patterns, using OpenCV's calibration routines. See the calibration tutorial for how to get these images.

Depth calculation

Note: this is the authors' best guess at the depth calculation method, based on examining the output of the device and the calibration algorithms we developed. |

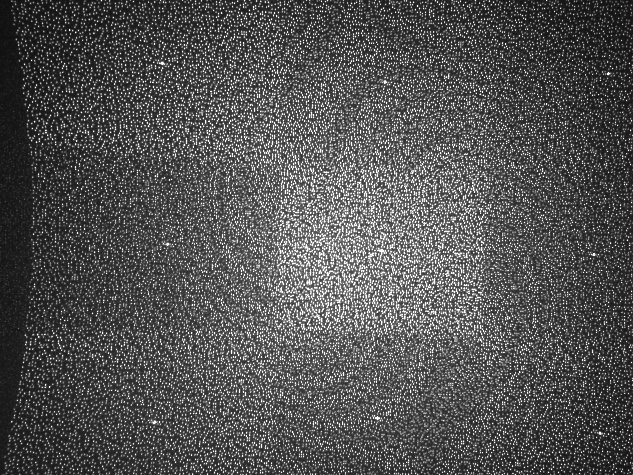

The IR camera and the IR projector form a stereo pair with a baseline of approximately 7.5 cm. The IR projector sends out a fixed pattern of light and dark speckles, shown below. The pattern is generated from a set of diffraction gratings, with special care to lessen the effect of zero-order propagation of a center bright dot (see the PrimeSense patent).

Depth is calculated by triangulation against a known pattern from the projector. The pattern is memorized at a known depth. For a new image, we want to calculate the depth at each pixel. For each pixel in the IR image, a small correlation window (9x9 or 9x7, see below) is used to compare the local pattern at that pixel with the memorized pattern at that pixel and 64 neighboring pixels in a horizontal window (see below for how we estimate the 64-pixel search). The best match gives an offset from the known depth, in terms of pixels: this is called disparity. The Kinect device performs a further interpolation of the best match to get sub-pixel accuracy of 1/8 pixel (again, see below for how this is estimated). Given the known depth of the memorized plane, and the disparity, an estimated depth for each pixel can be calculated by triangulation.

Disparity to depth relationship

For a normal stereo system, the cameras are calibrated so that the rectified images are parallel and have corresponding horizontal lines. In this case, the relationship between disparity and depth is given by:

z = b*f / d,

where z is the depth (in meters), b is the horizontal baseline between the cameras (in meters), f is the (common) focal length of the cameras (in pixels), and d is the disparity (in pixels). At zero disparity, the rays from each camera are parallel, and the depth is infinite. Larger values for the disparity mean shorter distances.

The Kinect returns a raw disparity that is not normalized in this way, that is, a zero Kinect disparity does not correspond to infinite distance. The Kinect disparity is related to a normalized disparity by the relation

d = 1/8 * (doff - kd),

where d is a normalized disparity, kd is the Kinect disparity, and doff is an offset value particular to a given Kinect device. The factor 1/8 appears because the values of kd are in 1/8 pixel units.

Calculating baseline and disparity offset

A monocular calibration of the IR camera finds the focal length, distortion parameters, and lens center of the camera (see Section 4 below). It also provides estimates for the 3D position of the chessboard target corners. From these, the measured projections of the corners in the IR image, and the corresponding raw disparity values, we do a least-square fit to the equation

z = b*f / (1/8 * (doff - kd))

to find b and doff. The value for b is always about 7.5 cm, which is consistent with the measured distance between the IR and projector lenses. doff is typically around 1090.

IR camera to depth offset

The Kinect device can return the IR image, as well as a depth image created from the IR image. There is a small, fixed offset between the two, which appears to be a consequence of the correlation window size. Looking at the raw disparity image below, there is a small black band, 8 pixels wide, on the right of the image.

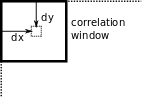

The null band is exactly what would be expected if the Kinect used a correlation window 9 pixels wide. To see this, consider the diagram below. Assume a 9x9 correlation window; then, the first pixel that could have an associated depth would be at 5,5 in the upper left corner. Similarly, at the right edge, the last pixel to get a depth would be at N-5,5, where N is the width of the image. Thus, there are a total of eight horizontal pixels at which depth cannot be calculated. The Kinect appears to send the raw disparity image starting at the first pixel calculated; hence, the offset in horizontal and vertical directions.

In the horizontal direction, the size of the correlation window is given by the null band size of 8 pixels. In the vertical direction, there is no such band. That's because the IR imager, an Aptina MT9M001, has resolution 1280x1024. If the Kinect uses 2x2 binning, then the reduced resolution is 640x512. The Kinect returns a 640x480 raw disparity image. There is no room on the imager in the horizontal direction to calculate more depth information, but there is in the vertical direction. Hence, we don't know directly from the disparity image the value of the vertical component of the correlation window. We have performed calibration tests between the disparity image and the IR image, using a target with a transparent background, and get a constant offset on the order of -4.8 x -3.9. This offset is approximate, due to the difficulty of finding crisp edges on the disparity image target. It is consistent with a 9x9 or 9x7 correlation window. It does not vary with depth or Kinect device.

Lens distortion and focal length

For accurate work in many computer vision fields, such as visual SLAM, it is important to have cameras that are as close to the ideal pinhole projection model as possible. The two primary culprits in real cameras are lens distortion and de-centering.

Typical calibration procedures with a planar target, such as those in OpenCV, can effectively model lens distortion and de-centering. After calibration, the reprojection error of a camera is typically about 0.1 to 0.2 pixels. The reprojection error is a measure of the deviation of the camera from the ideal model: given known 3D points, how does the projection onto the image differ from that of the ideal pinhole camera? The reprojection error is given by the RMS error of all the calibration target points.

We calibrated the IR and RGB cameras of several Kinect devices. Typical reprojection errors are given in the following table.

original

calibrated

IR

0.34

0.17

RGB

0.53

0.16

What can we tell from these values? First, it is likely that neither the IR nor RGB camera has been calibrated for distortion correction in the images that are sent from the device. If this is the case, then the second conclusion is that the lenses are very good already, since the original reprojection error is low (compared to, for example, typical webcams).

For computer vision purposes, we often try to estimate image features to sub-pixel precision. Hence, it is useful to calibrate the RGB camera to reduce reprojection error, typically by a factor of 3. The case for the IR camera is less certain. The depth image is already smoothed over a neighborhood of pixels by the correlation window, so being off by a third of a pixel won't change the depth result by very much. Depth boundaries are also very uncertain, as can be seen by the ragged edges of foreground objects in a typical Kinect disparity image.

Focal lengths

The focal length of the RGB camera is somewhat smaller than the IR camera, giving a larger field of view. Focal lengths and field of view for a typical Kinect camera are given in this table.

focal length (pixels)

FOV (degrees)

IR

580

57.8

RGB

525

62.7

The RGB has a somewhat wider FOV than the IR camera.

IR to RGB camera calibration

The IR and RGB cameras are separated by a small baseline. Using chessboard target data and OpenCV's stereo calibration algorithm, we can determine the 6 DOF transform between them. To do this, we first calibrate the individual cameras, using a zero distortion model for the IR camera, and a distortion and de-centering model for the RGB camera. Then, with all internal camera parameters fixed (including focal length), we calibrate the external transform between the two (OpenCV calibrateStereo function). Typical translation values are

-0.0254 -0.00013 -0.00218

The measured distance between IR and RGB lens centers is about 2.5 cm, which corresponds to the X distance above. The Y and Z offsets are very small.

In the three devices we tested, the rotation component of the transform was also very small. Typical offsets were about 0.5 degrees, which translates to a 1 cm offset at 1.5 m.

Putting it all together

At this point all the individual steps necessary for a good calibration between depth and the RGB image are in place. The device-specific parameters are:

parameter

description

doff

raw disparity offset

fir

focal length of IR camera

b

baseline between IR camera and projector

frgb

focal length of RGB camera

k1, k2, cx, cy

distortion and de-centering parameters of the RGB camera

R,t

transform from IR to RGB frame

Here are the steps to transform from the raw disparities of the depth image to the rectified RGB image:

[u,v,kd]ir -- (doff, fir, b) --> [XYZ]ir -- (R,t) --> [XYZ]rgb -- (frgb) --> [u,v]rgb

The parameters in parentheses indicate which ones are necessary for the transformation. In the kinect_camera package, the intermediate steps are combined into one 4x3 matrix D that operates on homogeneous coordinates:

[u,v,w]rgb = D*[u,v,kd,1]ir

This equation maps from u,v,kd coordinates of the IR image to u,v coordinates of the RGB image. The transform can be used in two ways:

- map the depth image to the rectified RGB image

- map RGB values to the depth image

Z-buffer

Necessary to prevent parallax-blocked pixels from overlaying good pixels.

kinect accuracy evaluation

http://www.ros.org/wiki/openni_kinect/kinect_accuracy

kinect with zoom lens calibration

http://www.ros.org/doc/api/kinect_near_mode_calibration/html

We create packages to calibrate kinect with zoom lens calibration.