| Note: This tutorial assumes that you have completed the previous tutorial: ROS/Tutorials. For more information about the laser drivers, see the laser_drivers stack. |

| |

Introduction to Working With Laser Scanner Data

Description: This tutorial guides you through the basics of working with the data produced by a planar laser scanner (such as a Hokuyo URG or SICK laser). To learn how to actually produce or change data from laser scanners, please see the laser_drivers stack.Keywords: lasers

Tutorial Level: INTERMEDIATE

Next Tutorial: Applying filters to laser scans Assembling (aggregating) single scan lines into a composite cloud

Contents

When you're done with this tutorial you should be able to:

- Visualize the laser scan in rviz.

Understand the data that the laser scan (laser_scan message) includes.

- Understand how to convert the laser scan into a more intuitive and useful point cloud (point_cloud message).

Generating Laser Scan Data

To try the tools described in this tutorial, you'll need a source of laser scanner data. If you have a laser scanner available with a driver, you can go ahead and use it. Otherwise, we've provided a source of laser data for you. ROS can record sensor data (actually any ROS system data) into a bag file. You can download a bag file containing laser data here (save link as. That file is no longer accessible, but http://classes.engineering.wustl.edu/cse550/a02.php links to http://classes.engineering.wustl.edu/cse550/data/Mapping1.bag which has a LaserScan and may be a good substitute).

To play back the bag, first run a roscore:

$ roscore

Then play back the bag:

$ rosbag play laser.bag

The bag contains four topics, two of which you'll need for this tutorial:

/tf [tf/tfMessage]

/tilt_scan [sensor_msgs/LaserScan]

The /tilt_scan topic was recorded from a planar laser mounted on a tilting platform on the PR2 robot. The /tf topic contains the robot transforms.

Visualizing Your Laser Scan

Your laser scan will be published as a sensor_msgs/LaserScan.msg on a hopefully well-named topic (like /tilt_scan above). You can use rostopic to find which topic your laser is publishing on:

rostopic list -v | grep sensor_msgs/LaserScan

In laser.bag, there is one scanner data stream, so the output is:

* /tilt_scan [sensor_msgs/LaserScan] 1 publisher

To visualize a scan, start rviz and add a new display panel (follow the rviz instructions here) subscribed to your scanner topic and the message type sensor_msgs/LaserScan.

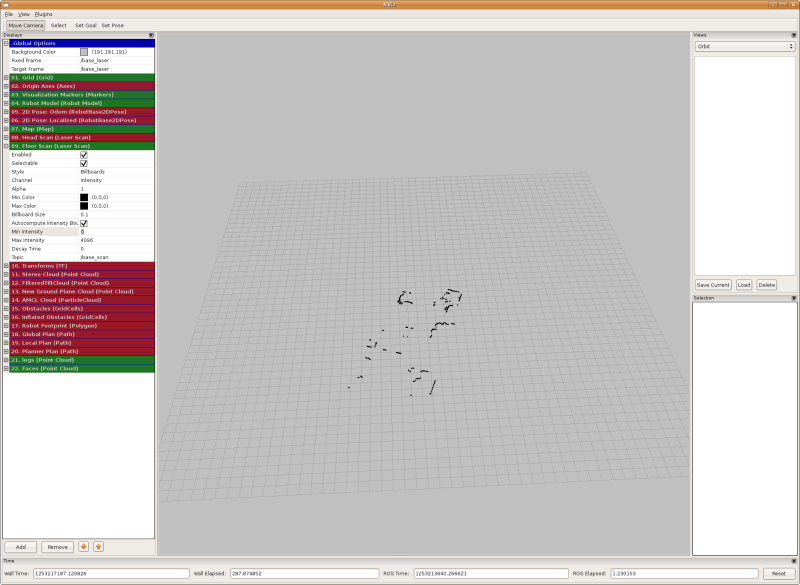

If you visualize the /tilt_scan topic above, the display should look something like this:

The Laser Scan Message

Each laser scan is a single scan line. The sensor_msgs/LaserScan message contains the following information:

# Single scan from a planar laser range-finder Header header # stamp: The acquisition time of the first ray in the scan. # frame_id: The laser is assumed to spin around the positive Z axis # (counterclockwise, if Z is up) with the zero angle forward along the x axis float32 angle_min # start angle of the scan [rad] float32 angle_max # end angle of the scan [rad] float32 angle_increment # angular distance between measurements [rad] float32 time_increment # time between measurements [seconds] - if your scanner # is moving, this will be used in interpolating position of 3d points float32 scan_time # time between scans [seconds] float32 range_min # minimum range value [m] float32 range_max # maximum range value [m] float32[] ranges # range data [m] (Note: values < range_min or > range_max should be discarded) float32[] intensities # intensity data [device-specific units]. If your # device does not provide intensities, please leave the array empty.

The above message tells you everything you need to know about a scan. Most importantly, you have the angle of each hit and its distance (range) from the scanner. If you want to work with raw range data, then the above message is all you need. However, it might be more convenient to work with points in 3D Cartesian (x,y,z) format instead. Luckily, there are simple ways to convert a laser scan to a point cloud.

Converting a Laser Scan to a Point Cloud

To get your laser scan as a set of 3D Cartesian (x,y,z) points, you'll need to convert it to a point cloud message. The sensor_msgs/PointCloud message looks like this:

Header header geometry_msgs/Point32[] points # Vector of 3d points (x,y,z) ChannelFloat32[] channels # Space for extra information like color.

The points array contains the point cloud in the format we want. To convert the laser scan to a point cloud (in a different frame), we'll use the laser_geometry::LaserProjector class (from the laser_geometry package). The laser_geometry package provides two functions to convert a scan into a point cloud: projectLaser and transformLaserScanToPointCloud.

projectLaser does a straight projection from range-angle to 3D (x,y,z), without using tf. This means two things: 1) your point cloud will be in the same frame as the scan, and 2) your point cloud will look very strange if the laser or robot were moving while the scan was being taken. The advantage of projectLaser, however, is its speed.

The function you'll want to use the majority of the time is the handy transformLaserScanToPointCloud function, which uses tf to transform your laser scan into a point cloud in another (preferably fixed) frame. transformLaserScanToPointCloud uses tf to get a transform for the first and last hits in the laser scan and then interpolates to get all the transforms inbetween. This is much more accurate (although not absolutely perfect) than using projectLaser and tf::transformPointCloud() if the laser was moving in the world.

You'll likely want the frame for the point cloud to be a fixed frame, that is a frame that's not moving over time. If your robot is stationary, something like the base_link might be a good choice. If your robot is moving, choose a stationary map frame. That way, point clouds from one time are comparable to point clouds at another time (or any other data in your system).

Here's a code snippet that converts a laser scan into a point cloud in the base_link frame:

1 laser_geometry::LaserProjection projector;

2 tf::TransformListener listener;

3

4 void scanCallback (const sensor_msgs::LaserScan::ConstPtr& scan_in)

5 {

6 sensor_msgs::PointCloud cloud;

7 projector.transformLaserScanToPointCloud("base_link",*scan_in, cloud,listener);

8

9 // Do something with cloud.

10 }

Notice that you need to use tf to transform the scan into a point cloud in another frame. As you learned in the tf message filters tutorial, you should always use a tf::MessageFilter when using tf with sensor data. Why? Despite the fact that your node has received the laser scan message, tf might not have received all of the information it needs to transform it. By using a tf::MessageFilter, you are assured that the laser scan can be converted into the desired frame when the callback starts.

Despite using the tf::MessageFilter, it is recommended practice to wrap your usage of tf in a try-catch loop. Although the transform between the laser frame and the desired point cloud frame existed at the beginning of the callback, there is no guarantee that it still exists when you call transformLaserScanToPointCloud.

Here is a more complete version of the code snippet above that uses a message filter and a try-catch loop, and publishes the resulting cloud:

1 #include "ros/ros.h"

2 #include "tf/transform_listener.h"

3 #include "sensor_msgs/PointCloud.h"

4 #include "tf/message_filter.h"

5 #include "message_filters/subscriber.h"

6 #include "laser_geometry/laser_geometry.h"

7

8 class LaserScanToPointCloud{

9

10 public:

11

12 ros::NodeHandle n_;

13 laser_geometry::LaserProjection projector_;

14 tf::TransformListener listener_;

15 message_filters::Subscriber<sensor_msgs::LaserScan> laser_sub_;

16 tf::MessageFilter<sensor_msgs::LaserScan> laser_notifier_;

17 ros::Publisher scan_pub_;

18

19 LaserScanToPointCloud(ros::NodeHandle n) :

20 n_(n),

21 laser_sub_(n_, "base_scan", 10),

22 laser_notifier_(laser_sub_,listener_, "base_link", 10)

23 {

24 laser_notifier_.registerCallback(

25 boost::bind(&LaserScanToPointCloud::scanCallback, this, _1));

26 laser_notifier_.setTolerance(ros::Duration(0.01));

27 scan_pub_ = n_.advertise<sensor_msgs::PointCloud>("/my_cloud",1);

28 }

29

30 void scanCallback (const sensor_msgs::LaserScan::ConstPtr& scan_in)

31 {

32 sensor_msgs::PointCloud cloud;

33 try

34 {

35 projector_.transformLaserScanToPointCloud(

36 "base_link",*scan_in, cloud,listener_);

37 }

38 catch (tf::TransformException& e)

39 {

40 std::cout << e.what();

41 return;

42 }

43

44 // Do something with cloud.

45

46 scan_pub_.publish(cloud);

47

48 }

49 };

50

51 int main(int argc, char** argv)

52 {

53

54 ros::init(argc, argv, "my_scan_to_cloud");

55 ros::NodeHandle n;

56 LaserScanToPointCloud lstopc(n);

57

58 ros::spin();

59

60 return 0;

61 }

Try creating the node above, publishing the point cloud and visualizing the point cloud in rviz. In rviz, this new cloud will look exactly the same as the original laser scan.

You might note that you're catching a lot of tf errors. This reinforces the fact that despite the use of message filters you still need to use a try-catch loop. Don't give up on message filters, though, without them you would never successfully transform the cloud! You can increase the odds of correctly transforming the cloud by giving the message filter a tolerance:

laser_notifier_->setTolerance(ros::Duration(0.01));

In this way, the transformation will still exist 0.01seconds after the callback starts.

Conclusions

You now know how to listen to data produced by a laser scanner, visualize the data, and transform that data into a point cloud of 3D Cartesian points (x,y,z).

Video Tutorials

The following video presents a small tutorials explaining sone background information about laser scanners in ROS.