| |

MDM Concepts

Description: This page provides an overview of the concepts underlying MDMKeywords: MDM, concepts

Tutorial Level: INTERMEDIATE

Next Tutorial: Running the MDM Demo

At its core, MDM is organized into a set of Layers, which embody the components that are involved in the decision making loop of a generic MDP-based agent:

- The System Abstraction Layer – This is the part of MDM that constantly maps the sensorial information of an agent to the constituent abstract representations of a (PO)MDP model. This layer can be implemented, in turn, by either a State Layer or an Observation Layer (or both), depending on the observability of the system;

- The Control Layer – Contains the implementation of the policy of an agent, and performs essential updates after a decision is taken (e.g. belief updates);

- The Action Layer – Interprets (PO)MDP actions. It associates each action with a user-definable callback function. MDM provides users with the tools to define each of these layers in a way which suits a particular decision making problem, and manages the interface between these different components within ROS.

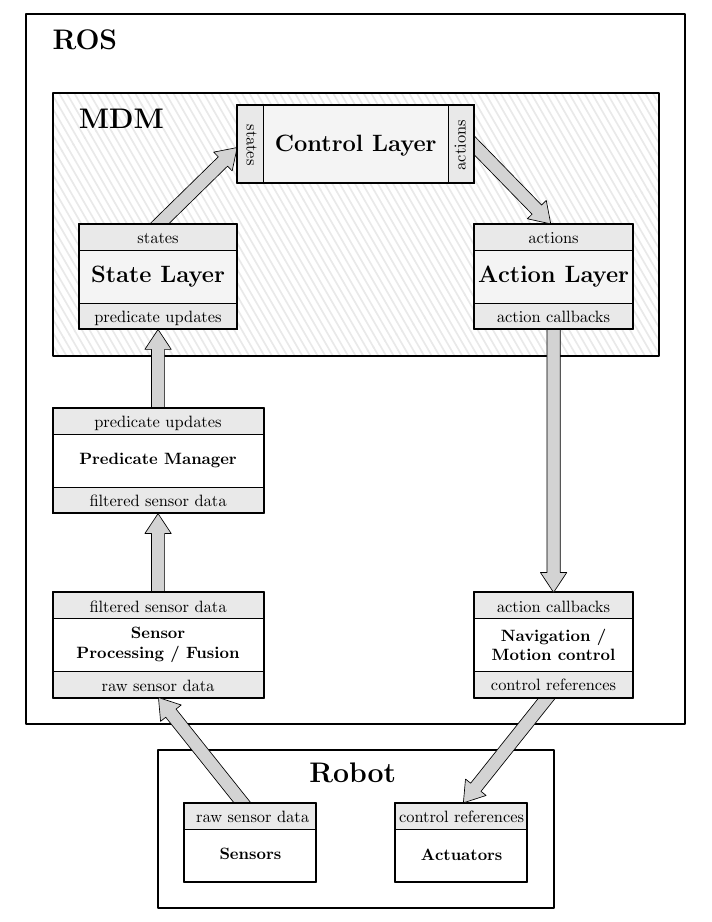

An MDM ensemble is an instantiation of a System Abstraction Layer, a Control Layer, and an Action Layer which, together, functionally implement an MDP, POMDP, or any other related decision-theoretic model. A basic MDM control loop is shown in Figure 1, which embodies a single-agent MDP. The inputs and outputs to each of the various ROS components are also made explicit.

Figure 1: The control loop for an MDP-based agent using MDM.

In the following subsections, the operation of each of these core components is described in greater detail.

The State Layer

From the perspective of MDM and for the rest of this document, it is assumed that a robotic agent is processing or filtering its sensor data through ROS, inorder to estimate relevant characteristics of the system as a whole (e.g. its localization, atmospheric conditions, etc.). This information can be mapped to a space of discrete factored states by a State Layer, if and only if there is no perceptual aliasing and there aren’t any unobservable state factors. The State Layer constitutes one of the two possible System Abstraction Layers in MDM (the other being the Observation Layer).

Functionally, State Layers translate sets of logical predicates into factored, integer-valued state representations. For this purpose, MDM makes use of the concurrently developed Predicate Manager ROS package . In its essence, Predicate Manager allows the user to easily define semantics for arbitrary logical conditions, which can either be directly grounded on sensor data, or defined over other predicates through propositional calculus. Predicate Manager operates asynchronously, and outputs a set of predicate updates whenever one or more predicates change their logical value (since predicates can depend on each other, multiple predicates can change simultaneously).

From the perspective of ROS/MDM, predicates are seen as named logical-valued structures. Predicates can be mapped onto discrete state factors by a State Layer, in one of the following ways:

- A binary state factor can be defined by binding it directly to the value of a particular predicate.

- An integer-valued factor can be defined by an ordered set of mutually exclusive predicates, under the condition that one (and only one) predicate in the set is true at any given time. The index of the true predicate in the set is taken as the integer value of the state factor.

Also see Implementing a State Layer.

The Observation Layer

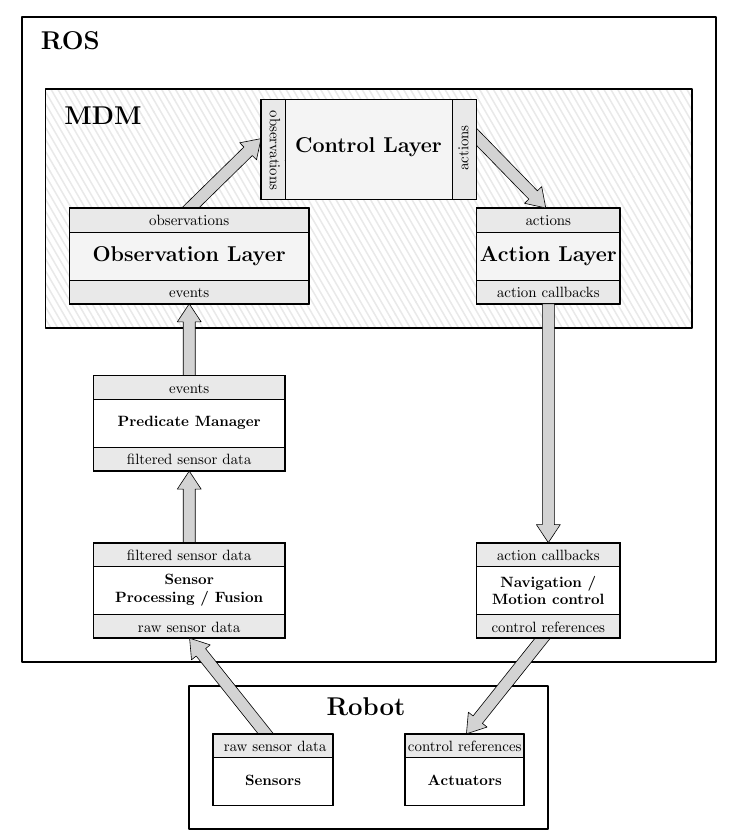

In the partially observable case, sensorial information should be mapped to a space of discrete factored observations, by an Observation Layer. The resulting decision making loop, which can be used for POMDP-driven agents, is shown in Figure 2.

Figure 2: The basic control loop for a POMDP-based agent using MDM.

The most significant difference of this abstraction layer with respect to a fully-observable State Layer is that, while in the latter case states are mapped directly from assignments of persistent predicate values; in an Observation Layer, observations are mapped from instantaneous events. The rationale is that an observation symbolizes a relevant occurrence in the system, typically associated with an underlying (possibly hidden) state transition. This event is typically captured by the system as a change in some conditional statement. The semantics of events, therefore, are defined over instantaneous conditional changes, as opposed to persistent conditional values.

The Predicate Manager package also makes it possible to define named events. These can be defined either as propositional formulas over existing predicates, in which case the respective event is triggered whenever that formula becomes true; or directly as conditions over other sources of data (e.g. a touch sensor signal).

Observation spaces can also be factored. Observation factors are always associated with at least two events, and so their definition is similar to that of integer-valued state factors.

Note that, although the definition of event which is here used implies a natural asynchrony (events occur randomly in time), this does not mean that synchronous decision making under partially observability cannot be implemented through MDM. As it will be seen, only the Control Layer is responsible for defining the execution strategy (synchronous or asynchronous) of a given implementation.

As in the case of the State Layer, an Observation Layer always outputs the joint value of the observation factors that it contains.

Also see Implementing an Observation Layer.

The Control Layer

The Control Layer is responsible for parsing the policy of an agent or team of agents, and providing the appropriate response to an input state or observation, in the form of a symbolic action. The internal operation of the Control Layer depends on the decision theoretic framework which is selected by the user as a model for a particular system. For each of these frameworks, the Control Layer functionality is implemented by a suitably defined (multi)agent controller.

Currently, MDM provides ready-to-use controllers for MDPs and POMDPs operating according to deterministic policies, which are assumed to be computed outside of ROS/MDM (using, for example, MADP). For POMDPs, the Control Layer also performs belief updates internally, if desired. Consequently, in such a case, the stochastic model of the problem (its transition and observation probabilities) must typically also be passed as an input to the controller (an exception is discussed in the special use cases of MDM). The system model is also used to validate the number of states/actions/observations defined in the System Abstraction and Action Layers.

MDM Control Layers use the MADP Toolbox extensively to support their functionality. Their input files (policies, and the model specification if needed), can be defined in all MADP-compatible file types, and all decision-theoretic frameworks which are supported by MADP can potentially be implemented as MDM Control Layers. The MADP documentation describes the supported file types for the description of MDP-based models, and the corresponding policy representation for each respective framework.

The Control Layer of an agent also defines its real-time execution scheme. This can be either synchronous or asynchronous. Synchronous execution forces an agent to take actions at a fixed, pre-defined rate; in asynchronous execution, actions are selected immediately after an input state or observation is received by the controller. All agent controllers can be remotely started or stopped at run-time through ROS service calls. This allows the execution of an MDM ensemble to be itselft abstracted as an “action”, which in turn can be used to establish hierarchical dependencies between decision-theoretic processes.

Also see Implementing a Control Layer.

The Action Layer

Symbolic actions can be associated with task-specific functions through an MDM Action Layer. In this layer, the user univocally associates each action of a given (PO)MDP with a target function (an action callback ). That callback is triggered whenever a Control Layer publishes its respective action. An Action Layer can also interpret commands from a Control Layer as joint actions, and execute them under the scope of a particular agent.

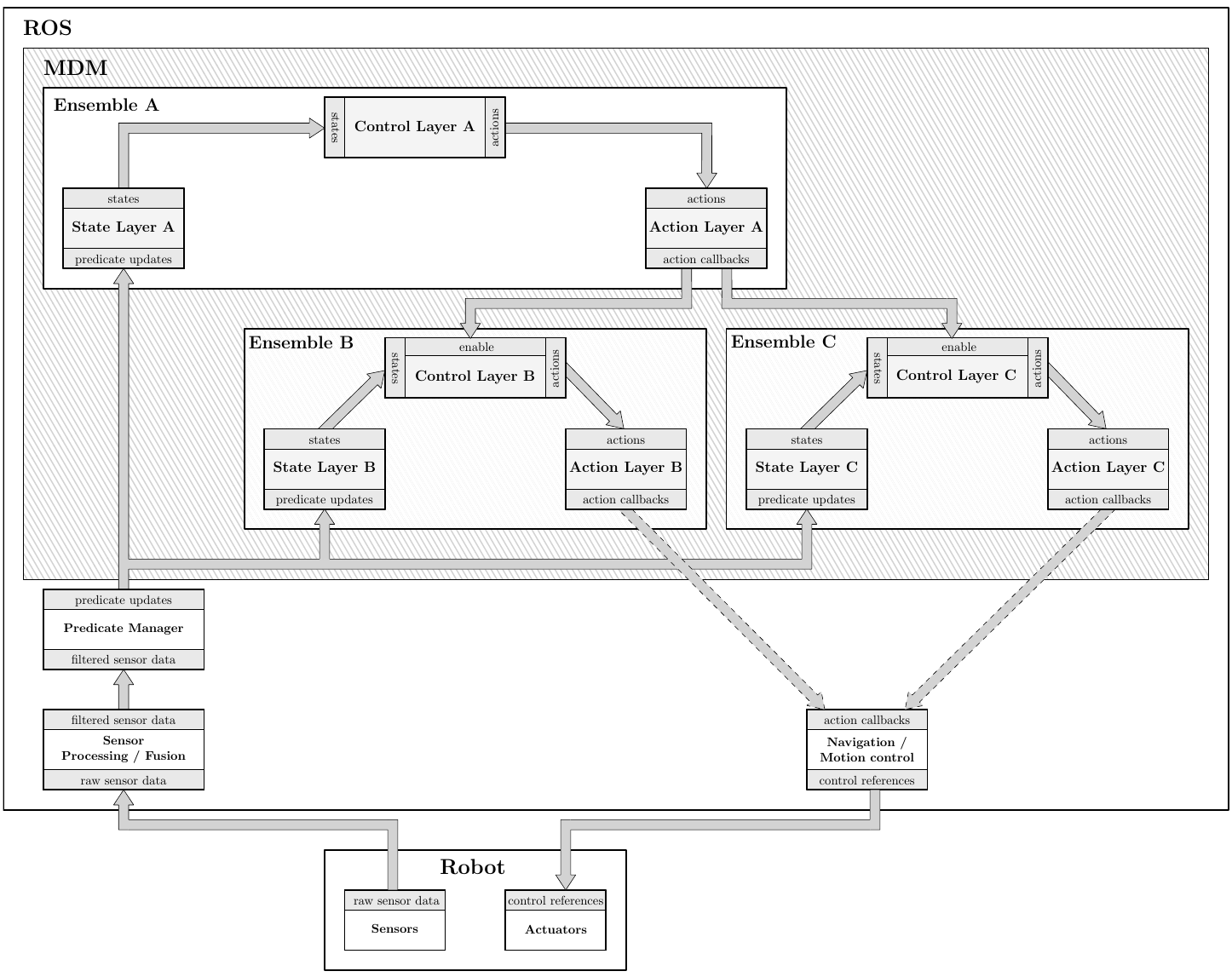

The general purpose of an action callback is to delegate the control of the robotic agent to other ROS modules outside of MDM, operating at a lower level of abstraction. For example, action callbacks can be used to send goal poses to ROS native navigation modules; or to execute scripted sets of commands for human-robot interaction. However, the Action Layer also makes it possible to abstract other MDM ensembles as actions. This feature allows users to model arbitrarily complex hierarchical dependencies between MDPs/POMDPs (see Figure 3 for an example of the resulting node layout and respective dependencies).

Figure 3: An example of the organization of a hierachical MDP, as seen by MDM. The actions from Action Layer A enable / disable the controllers in ensembles B and C. When a controller is disabled, its respective State Layer still updates its information, but the controller does not relay any actions to its Action Layer.

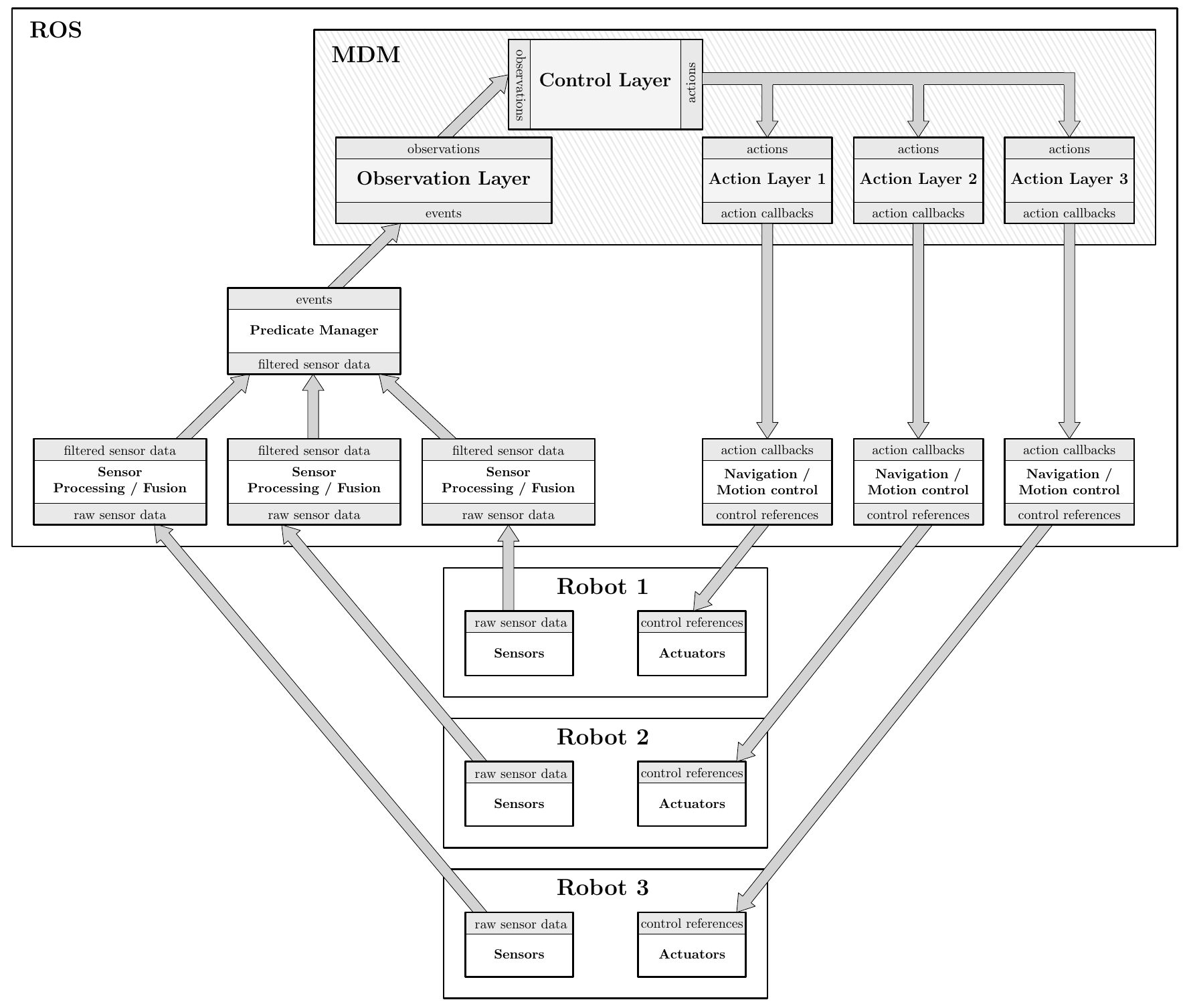

The layered organization of MDM, and the “peer-to-peer” networked paradigm of ROS, allow action execution and action selection to be decoupled across different systems in a network, if that is desired. This can be accomplished by running an agent’s Action Layer on a different network location than its respective Control Layer. For mobile robots, this means that the components of their decision making which can be computationally expensive (sensor processing / abstraction, and stochastic model parsing, for example), can be run off-board. For teams of robots, this also implies that a single Control Layer, implementing a centralized multiagent MDP/POMDP policy, can be readily connected to multiple Action Layers, one for each agent in the system (see Figure 4).

Figure 4: An MPOMDP implemented by a single MDM ensemble – in this case, there are multiple Action Layers. Each one interprets the joint actions originating from the centralized controller under the scope of a single agent. There is a single Observation Layer which provides joint observations, and a single Predicate Manager instantiation which fuses sensor data from all agents.

For the implementation of typical topological navigation actions (which are prevalent in applications of decision theory to robotics), MDM includes Topological Tools, an auxiliary ROS package. This package allows the user to easily define states and observations over a map of the robot’s environment, such as those obtained through ROS mapping utilities. It also allows the abstraction of that map as a labeled graph, which in turn makes it possible to easily implement context-dependent navigation actions (e.g. “Move Up”, “Move Down”) in an MDM Action Layer.

Also see Implementing an Action Layer.