Overview

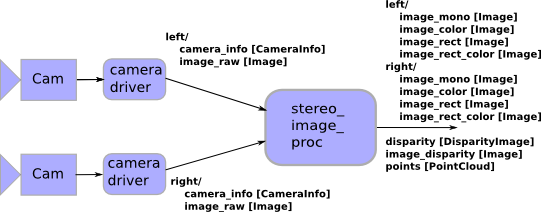

This package contains the stereo_image_proc node, which sits between the stereo camera drivers and vision processing nodes.

stereo_image_proc performs the duties of image_proc for both cameras, undistorting and colorizing the raw images. Note that for properly calibrated stereo cameras, undistortion is actually combined with rectification, transforming the images so that their scanlines line up for fast stereo processing.

stereo_image_proc will also compute disparity images from incoming stereo pairs using OpenCV's block matching algorithm. These are best inspected using stereo_view.

The node will also produce point clouds, which you can view in rviz, and process with pcl.

Stereo processing example |

|

|

|

left/image_raw, right/image_raw: The raw images from each camera. |

|

|

|

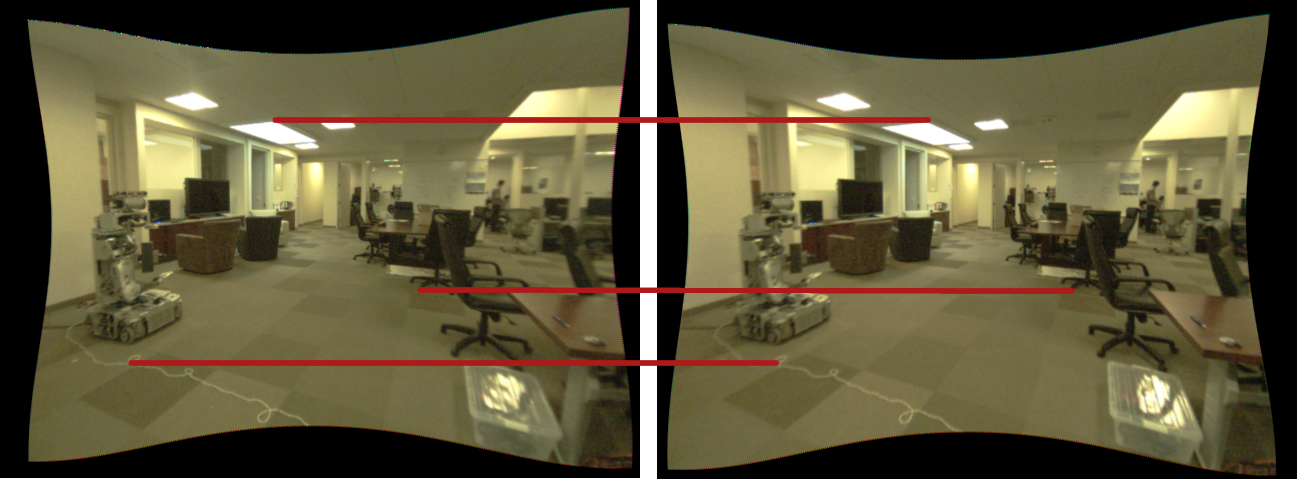

left/image_rect_color, right/image_rect_color: The rectified images from each camera. The red lines show that the same point in the real world lies on the same horizontal line in each rectified image. |

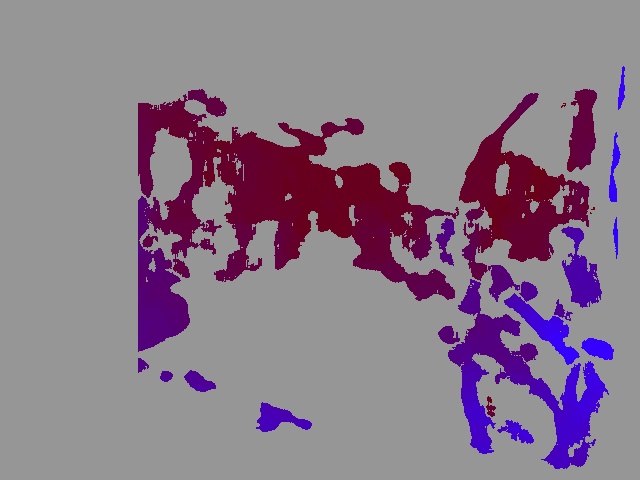

disparity: The resulting disparity map, as shown by stereo_view. |

Tutorials

- Choosing Good Stereo Parameters

This tutorial walks you through a real-world example of tuning a running stereo_image_proc instance to get the best stereo results, using stereo_view and rviz for visual feedback.

- Using OpenCV with Image Processing

A setup and initialization guide to using an OpenCV node with image_pipeline.

Also see dynamic_reconfigure to set camera and stereo parameters on line.

Quick Start

Make sure your stereo camera driver(s) are running. You can check rostopic list | grep image_raw to see the available raw image topics from compatible drivers.

To get rectified and/or colorized image streams, you need to launch an instance of stereo_image_proc. If you are running on a robot or a cart, it's probably best to run stereo_image_proc there. For example, if your stereo camera driver(s) are publishing:

/stereo/left/image_raw /stereo/left/camera_info /stereo/right/image_raw /stereo/right/camera_info

you could do:

$ ROS_NAMESPACE=stereo rosrun stereo_image_proc stereo_image_proc

Notice that we push stereo_image_proc down into the stereo namespace, in which it subscribes to the camera topics in left and right. This is functionally equivalent to running two image_proc instances in the stereo/left and stereo/right namespaces, with the addition of stereo outputs: disparity images (stereo/disparity) and point clouds (stereo/points2).

In a separate terminal (on your home machine, if you are running on a robot or cart):

$ rosrun image_view image_view image:=/stereo/left/image_rect_color

This will display a rectified color image from the left stereo camera. Alternatively, stereo_view will show you both the left and right images as well as a color-mapped disparity image:

$ rosrun image_view stereo_view stereo:=/stereo image:=image_rect_color

To view point clouds, you can use rviz.

Nodes

stereo_image_proc

Performs rectification and de-mosaicing of raw stereo camera image pairs. May also perform stereo processing to generate disparity images and point clouds. In topic names below, left and right are explicitly resolved by the node, so remapping those names effectively remaps all topics left/* and right/*.

All processing is on demand. Color processing is performed only if there is a subscriber to a color topic. Rectification is performed only if there is a subscriber to a rectified topic. Disparities and point clouds are generated only if there is a subscriber to a relevant topic. While there are no subscribers to output topics, stereo_image_proc unsubscribes from the image_raw and camera_info topics.

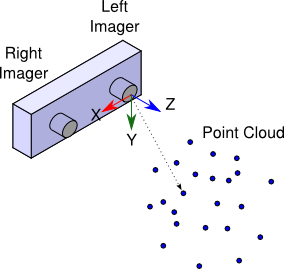

Point clouds are generated in the optical frame of the left imager (X Right, Y Down, Z out).

image_transport is used for all image publications and subscriptions.

Subscribed Topics

Left camera

- Raw image stream from the left camera.

- Left camera metadata.

Right camera

- Raw image stream from the right camera.

- Right camera metadata.

Published Topics

Left camera processed images

- Left monochrome unrectified image.

- Left monochrome rectified image.

- Left color unrectified image.

- Left color rectified image.

Right camera processed images

- Right monochrome unrectified image.

- Right monochrome rectified image.

- Right color unrectified image.

- Right color rectified image.

3D processing

- Floating point disparity image with metadata.

- Stereo point cloud with RGB color.

- Stereo point cloud with RGB color. This message type is obsoleted by sensor_msgs/PointCloud2; if possible, use the points2 topic instead.

Parameters

Disparity pre-filtering

- Normalization window size, pixels.

- Bound on normalized pixel values.

Disparity correlation

- Edge size (pixels) of the correlation window for matching. Values must be odd, in the range 5 to 255 (but with an extra performance hit for values larger than 21). Larger values have smoother disparity results, but smear out small features and depth discontinuities.

- Minimum disparity, or the offset to the disparity search window. By setting to a positive value, the cameras become more "cross-eyed" and will find objects closer to the cameras. If the cameras are "verged" (inclined toward each other), it may be appropriate to set min_disparity to a negative value. When min_disparity is greater than 0, objects at large distances will not be found.

- The size of the disparity search window (pixels). Together with min_disparity, this defines the "horopter," the 3D volume that is visible to the stereo algorithm.

Disparity post-filtering

- Filters disparity readings based on a comparison to the next-best correlation along the epipolar line. Matches where uniqueness_ratio > (best_match - next_match) / next_match are filtered out.

- Filters disparity readings based on the amount of texture in the correlation window.

- Filters disparity regions that are less than this number of pixels. For example, a value of 100 means that all regions with fewer than 100 pixels will be rejected.

- Groups disparity regions based on their connectedness. Disparities are grouped together in the same region if they are within this distance in pixels.

Synchronization

- Whether to use approximate synchronization. Set to true if the left and right cameras do not produce exactly synced timestamps.

- Size of message queue for each synchronized topic. You may need to raise this if images take significantly longer to travel over the network than camera info and/or the delay from disparity processing is too long.

Nodelets

This package contains nodelets for creating disparity images and point clouds from stereo. This allows stereo_image_proc functions to be easily combined with other nodelets, for example camera drivers or higher-level vision processing. In fact, the stereo_image_proc node simply loads a combination of nodelets.

stereo_image_proc/disparity

Performs block matching on a pair of rectified stereo images, producing a disparity image.Subscribed Topics

left/image_rect (sensor_msgs/Image)- Left monochrome rectified image stream.

- Left camera metadata.

- Right monochrome rectified image stream.

- Right camera metadata.

Published Topics

disparity (stereo_msgs/DisparityImage)- Floating point disparity image with metadata.

Parameters

~approximate_sync (bool, default: false)- Whether to use approximate synchronization. Set to true if the left and right cameras do not produce exactly synced timestamps.

- Size of message queue for each synchronized topic. You may need to raise this if images take significantly longer to travel over the network than camera info and/or the delay from disparity processing is too long.

Parameters

Disparity pre-filtering

- Normalization window size, pixels.

- Bound on normalized pixel values.

Disparity correlation

- Edge size (pixels) of the correlation window for matching. Values must be odd, in the range 5 to 255 (but with an extra performance hit for values larger than 21). Larger values have smoother disparity results, but smear out small features and depth discontinuities.

- Minimum disparity, or the offset to the disparity search window. By setting to a positive value, the cameras become more "cross-eyed" and will find objects closer to the cameras. If the cameras are "verged" (inclined toward each other), it may be appropriate to set min_disparity to a negative value. When min_disparity is greater than 0, objects at large distances will not be found.

- The size of the disparity search window (pixels). Together with min_disparity, this defines the "horopter," the 3D volume that is visible to the stereo algorithm.

Disparity post-filtering

- Filters disparity readings based on a comparison to the next-best correlation along the epipolar line. Matches where uniqueness_ratio > (best_match - next_match) / next_match are filtered out.

- Filters disparity readings based on the amount of texture in the correlation window.

- Filters disparity regions that are less than this number of pixels. For example, a value of 100 means that all regions with fewer than 100 pixels will be rejected.

- Groups disparity regions based on their connectedness. Disparities are grouped together in the same region if they are within this distance in pixels.

Synchronization

- Whether to use approximate synchronization. Set to true if the left and right cameras do not produce exactly synced timestamps.

- Size of message queue for each synchronized topic. You may need to raise this if images take significantly longer to travel over the network than camera info and/or the delay from disparity processing is too long.

stereo_image_proc/point_cloud2

Combines a rectified color image and disparity image to produce a point cloud.Subscribed Topics

left/image_rect_color (sensor_msgs/Image)- Left color rectified image stream.

- Left camera metadata.

- Right camera metadata.

- Floating point disparity image with metadata.

Published Topics

points2 (sensor_msgs/PointCloud2)- Stereo point cloud with RGB color.

Parameters

~approximate_sync (bool, default: false)- Whether to use approximate synchronization. Set to true if the left and right cameras do not produce exactly synced timestamps.

- Size of message queue for each synchronized topic. You may need to raise this if images take significantly longer to travel over the network than camera info and/or the delay from disparity processing is too long.

stereo_image_proc/point_cloud

Combines a rectified color image and disparity image to produce a sensor_msgs/PointCloud. Provided for backwards compatibility; this message type is obsoleted by sensor_msgs/PointCloud2.Subscribed Topics

left/image_rect_color (sensor_msgs/Image)- Left color rectified image stream.

- Left camera metadata.

- Right camera metadata.

- Floating point disparity image with metadata.

Published Topics

points (sensor_msgs/PointCloud)- Stereo point cloud with RGB color.

Parameters

~approximate_sync (bool, default: false)- Whether to use approximate synchronization. Set to true if the left and right cameras do not produce exactly synced timestamps.

- Size of message queue for each synchronized topic. You may need to raise this if images take significantly longer to travel over the network than camera info and/or the delay from disparity processing is too long.

Launch files

New in ROS Fuerte

stereo_image_proc.launch

Loads the full constellation of image_proc/stereo_image_proc nodelets for one stereo camera pair into a user-provided nodelet manager. The in-process equivalent of running the stereo_image_proc node. Launch (or include in a higher-level launch file) in the camera namespace, just as you would run stereo_image_proc.

Arguments

manager (string)

- The name of the target nodelet manager. It will need to be globally qualified (e.g. /my_manager), unless the manager is already in the stereo namespace.

- If true, enables bond topics and respawns any nodelet loaders that die. If the manager also respawns, the system is robust to any node dying. However, the proliferation of bond topics can overwhelm introspection tools like rqt_graph.

Remapping

- Remap the 'left' camera namespace.

- Remap the 'right' camera namespace.

Subscribed and Published Topics

Identical to the stereo_image_proc node.