| |

Calibration of an external camera using the ViSP library

Description: This tutorials is a step by step guide which shows how to define your calibration pattern, model. Then it discusses camera calibration using the ViSP library. Finally, it will briefly walk you through the calibration results.Keywords: visp camera calibration

Tutorial Level: INTERMEDIATE

Contents

Before starting

Make sure you have the following:

- A ROS-compatible camera driver. You can use the experimental visp_camera_calibration_camera node for this.

A calibration pattern. You can print the pattern used in the lagadic laboratory.

- A 3D model of your grid.

- A reasonably well-lit space to display the calibration pattern from various angles.

Compiling

Start by getting the dependencies and compiling the package.

rosdep install visp_camera_calibration catkin_make -DCMAKE_BUILD_TYPE=Release --pkg visp_camera_calibration

rosdep install visp_camera_calibration rosmake visp_camera_calibration

Setting up

Camera

Plug in your camera and install the corresponding ROS package to be able to grab images.

If your camera is firewire install the camera1394 package. For simplicity sake, start creating a roslaunch file: calib-live-firewire.launch. To grab and display 640x480 images in mono8 format from this camera it should have the following structure:

<launch> <!-- % rxconsole --> <node pkg="rqt_console" name="rqt_console" type="rqt_console"/> <!-- % rosrun camera1394 camera1394_node --> <node pkg="camera1394" type="camera1394_node" name="my_camera1394_node" args="_video_mode:=640x480_mono8"/> <!-- % rosrun image_view image_view image:=/camera/image_raw --> <node pkg="image_view" type="image_view" name="my_image_raw_viewer" args="image:=/camera/image_raw"/> </launch>

Make sure your camera is publishing on the topic image_raw. This is a standard ROS topic for cameras. You can check this by doing:

rostopic list

Image processing

Modify the roslaunch file calib-live-firewire.launch to add the following lines:

<launch>

...

<arg name="calibration_path" default="calibration.ini" />

<group ns="visp_camera_calibration">

<node pkg="visp_camera_calibration" name="visp_camera_calibration_calibrator" type="visp_camera_calibration_calibrator"/>

<node pkg="visp_camera_calibration" name="visp_camera_calibration_image_processing" type="visp_camera_calibration_image_processing" args="camera_prefix:=/camera">

<param name="gray_level_precision" value="0.7" />

<param name="size_precision" value="0.5" />

<param name="pause_at_each_frame" value="False" />

<param name="calibration_path" type="string" value="$(arg calibration_path)" />

</node>

</group>

</launch>The camera_prefix argument used in the previous lines indicates /camera as the base path for camera services and topics. Consequently, the calibrator will subscribe to /camera/image_raw and will call the service /camera/set_camera_info.

Calibration grid pattern

Print your pattern on way you have the biggest contrast to make tracking easier. Your pattern should be set of points. Measure and/or enter the 3D coordinates of your points in the object frame. Chances are you printed the pattern on a piece of paper; if so, all your points will have the same Z coordinate.

Fill the model definition into the lauch file. To do so, you'll have to set define three parameters, each one corresponding to a point coordinate (X,Y or Z). You need to do that for each point:

<launch>

...

<group ns="visp_camera_calibration">

<node pkg="visp_camera_calibration" ... >

...

<!-- 3D coordinates of all points on the calibration pattern. In this example, points are planar -->

<rosparam param="model_points_x">[0.0, 0.03, 0.06, 0.09, 0.12, 0.15, 0.0, 0.03, 0.06, 0.09, 0.12, 0.15, 0.0, 0.03, 0.06, 0.09, 0.12, 0.15, 0.0, 0.03, 0.06, 0.09, 0.12, 0.15, 0.0, 0.03, 0.06, 0.09, 0.12, 0.15, 0.0, 0.03, 0.06, 0.09, 0.12, 0.15]</rosparam>

<rosparam param="model_points_y">[0.0, 0.00, 0.00, 0.00, 0.00, 0.00, .03, 0.03, 0.03, 0.03, 0.03, 0.03, .06, 0.06, 0.06, 0.06, 0.06, 0.06, .09, 0.09, 0.09, 0.09, 0.09, 0.09, 0.12,0.12, 0.12, 0.12, 0.12, 0.12, 0.15,0.15, 0.15, 0.15, 0.15, 0.15]</rosparam>

<rosparam param="model_points_z">[0.0, 0.00, 0.00, 0.00, 0.00, 0.00, 0.0, 0.00, 0.00, 0.00, 0.00, 0.00, 0.0, 0.00, 0.00, 0.00, 0.00, 0.00, 0.0, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.00, 0.0, 0.00, 0.00, 0.00, 0.00,0.00]</rosparam>

</node>

</group>

</launch>

3D coordinates of the points used for initialisation

You also need to select a smaller number (4 for example) of 3D points on your model. These points will be the starting point for pose estimations. Add these points to the launch file the same way you wrote the model definition:

<launch>

...

<group ns="visp_camera_calibration">

<node pkg="visp_camera_calibration" ... >

...

<!-- 3D coordinates of 4 points the user has to select to initialise the calibration process -->

<rosparam param="selected_points_x">[0.03, 0.03, 0.09, 0.12]</rosparam>

<rosparam param="selected_points_y">[0.03, 0.12, 0.12, 0.03]</rosparam>

<rosparam param="selected_points_z">[0.00, 0.00, 0.00, 0.00]</rosparam>

</node>

</group>

</launch>Note that the matching between these 3D coordinates of the points and the 2D pixel coordinates is done by the user when he is asked to click on the points.

Running the calibration

Doing the image processing

To start the calibration, just type:

roslaunch calib-live-firewire.launch

The full calib-live-firewire.launch file is available here.

Three nodes will be launched (besides the camera):

- The console

- The calibrator node which will stay silent

- The image processing interface node with which you will interact.

In the rqt_console, you will see instructions about how to proceed and information about the calibration process. Some information will also be shown as red text over the images.

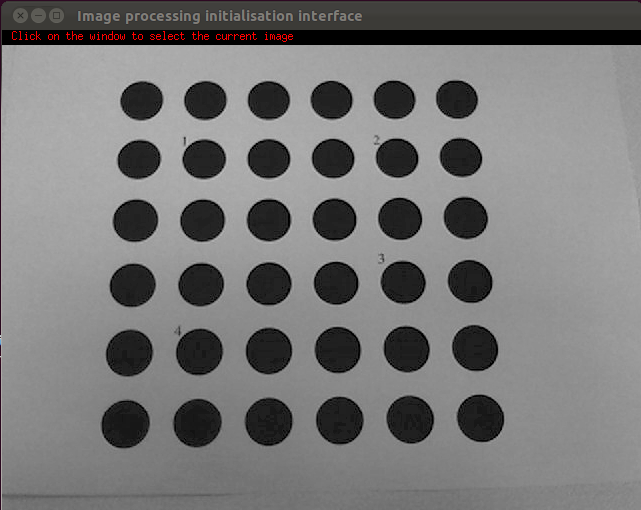

Move the printed pattern somewhere in the field of view of the camera. The image should appear:

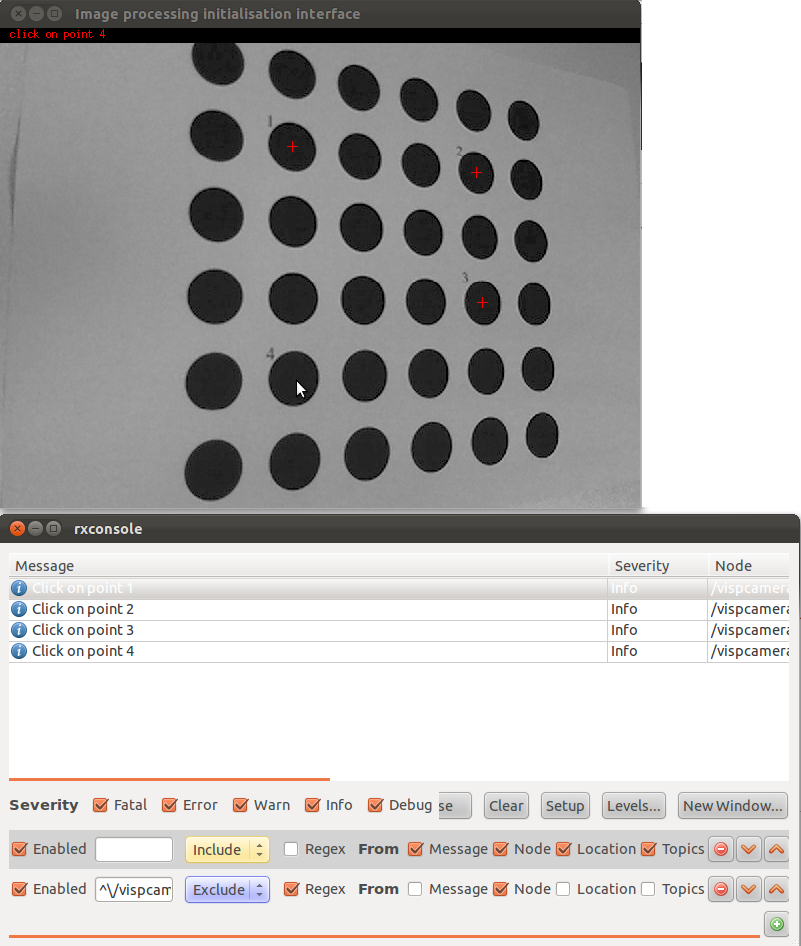

When you are ready, click anywhere on the window. Once you have clicked, you need to select points in the window called "image processing initialisation interface". Select the special points you defined earlier in the xml file.

When the four points are selected, the image processing interface will try to detect the rest of the points. Your grid should look like this:  Then, click on the interface window again and repeat the process until you have processed enough samples.

Then, click on the interface window again and repeat the process until you have processed enough samples.

The calibrator node now has enough information to compute the new camera parameters. You are now ready to call the calibration service.

Calibrating

In a new terminal type:

rosservice call visp_camera_calibration/calibrate 2 640 480

Those parameters may not seem intuitive to you, here is a quick overview:

- 2 is a code refering to a calibration model with distorsion. You can also set 1 to estimate a model without distorsion.

- 640 and 480 are the width and height of the image.

Calibration Results

You should have the following output:

stdDevErrs: [0.43714855418957455, 0.5039971167151152, 0.4991662359530044, 0.4800084572634955]

The quantities are expressed in pixels and should be reasonably low.

The node will then attempt to set the calculated camera info to the calibrated camera. If possible, it will also write a calibration.ini file (in your $HOME/.ros directory) containing the calibration parameters in the INI format:

# Camera intrinsics [image] width 640 height 480 [image_raw] camera matrix 548.83913 0.00000 309.68288 0.00000 541.05367 246.39086 0.00000 0.00000 1.00000 distortion -0.01019 0.00000 0.00000 0.00000 0.00000 rectification 1.00000 0.00000 0.00000 0.00000 1.00000 0.00000 0.00000 0.00000 1.00000 projection 548.83913 0.00000 309.68288 0.00000 0.00000 541.05367 246.39086 0.00000 0.00000 0.00000 1.00000 0.00000

Links to go further

Once you've obtained the camera parameters, you can load them into your camera and use them to rectify your image. Standard ROS cameras are able to load yml or ini calibration files by using the camera_info_manager package. This may be simplified with a graphical user interface. Check the camera1394 package and the dynamic reconfigure package to see how to graphically load camera parameters into the camera node.

Once the camera is configured you should use the image_proc package to rectify the image, as indicated in the following tutorial.