| |

Calculating a NextBestView

Description: In this tutorial we will set the robot position and determine a NextBestViewTutorial Level: BEGINNER

Contents

Description

In this tutorial we will set the robot position and determine a NextBestView

Setup

Do the previous tutorial (http://wiki.ros.org/asr_next_best_view/AsrNextBestViewSetPointCloud)

Tutorial

First we set the robot position with:

rostopic pub /initialpose geometry_msgs/PoseWithCovarianceStamped "header:

seq: 0

stamp:

secs: 0

nsecs: 0

frame_id: 'map'

pose:

pose:

position: {x: -0.52, y: -0.39, z: 0.0}

orientation: {x: 0.0, y: 0.0, z: 0.17919106, w: 0.98381429}

covariance: [0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0,

0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0,

0.0, 0.0, 0.0, 0.0, 0.0, 0.0]" --oncethe position is the center of the robot base, while the orientation is the rotation of the robot base (in this case it's ~20 degree).

Then we send the current robot pose to the next_best_view node:

rosservice call /nbv/set_init_robot_state "robotState: {pan: 0.0, tilt: 0.0, rotation: 0.35, x: -0.52, y: -0.39}"We didn't modify the ptu, so the ptu position is (0, 0)

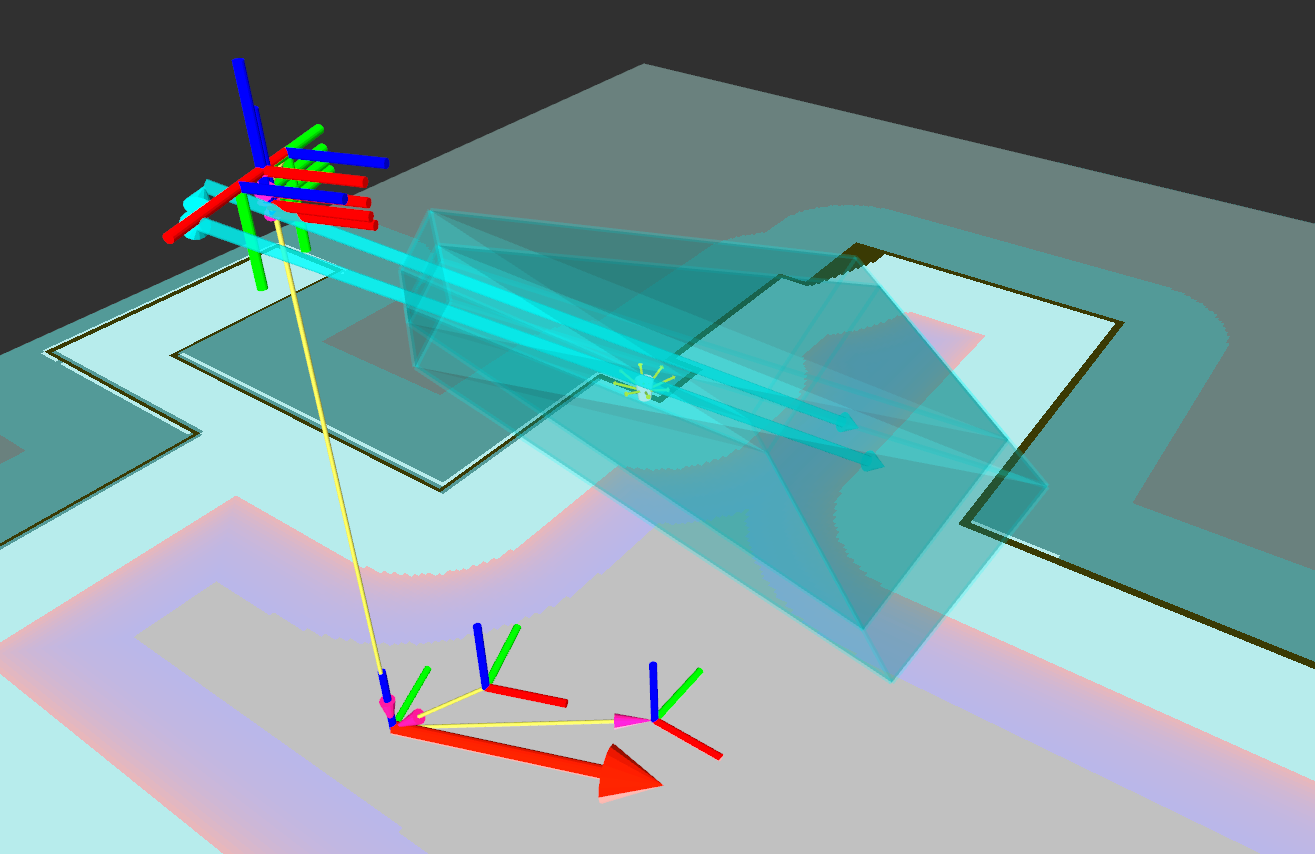

Now we get our current camera pose by calling the robot model service to display the current camera frustum:

rosservice call /asr_robot_model_services/GetCameraPose "{}"

pose:

position:

x: -0.505544160413

y: -0.428367026231

z: 1.47388

orientation:

x: 0.0

y: 0.0

z: 0.17919106061

w: 0.983814293349Now we send this pose back to the next_best_view node to visualize the frustum (keep in mind we didn't modify the PTU):

rosservice call /nbv/trigger_frustum_visualization "current_pose:

position:

x: -0.50

y: -0.42

z: 1.47

orientation:

x: 0.0

y: 0.0

z: 0.179

w: 0.983"

Finally we do the GetNextBestView call using the camera pose

rosservice call /nbv/next_best_view "current_pose:

position:

x: -0.50

y: -0.42

z: 1.47

orientation:

x: 0.0

y: 0.0

z: 0.179

w: 0.983"

found: True

object_type_name_list: ['Cup']

resulting_pose:

position:

x: -0.5

y: -0.585767805576

z: 1.47000002861

orientation:

x: -0.115216434002

y: 0.243114843965

z: 0.412469029427

w: 0.870338857174

robot_state:

pan: 0.331612557173

tilt: -0.599535286427

rotation: 0.553517401218

x: -0.464413404465

y: -0.59638440609

utility: 0.857888042927

utility_normalization: 0.857888042927

inverse_costs: 138.182449341

base_translation_inverse_costs: 0.998762726784

base_rotation_inverse_costs: 0.327448785305

ptu_movement_inverse_costs: 0.0749999880791

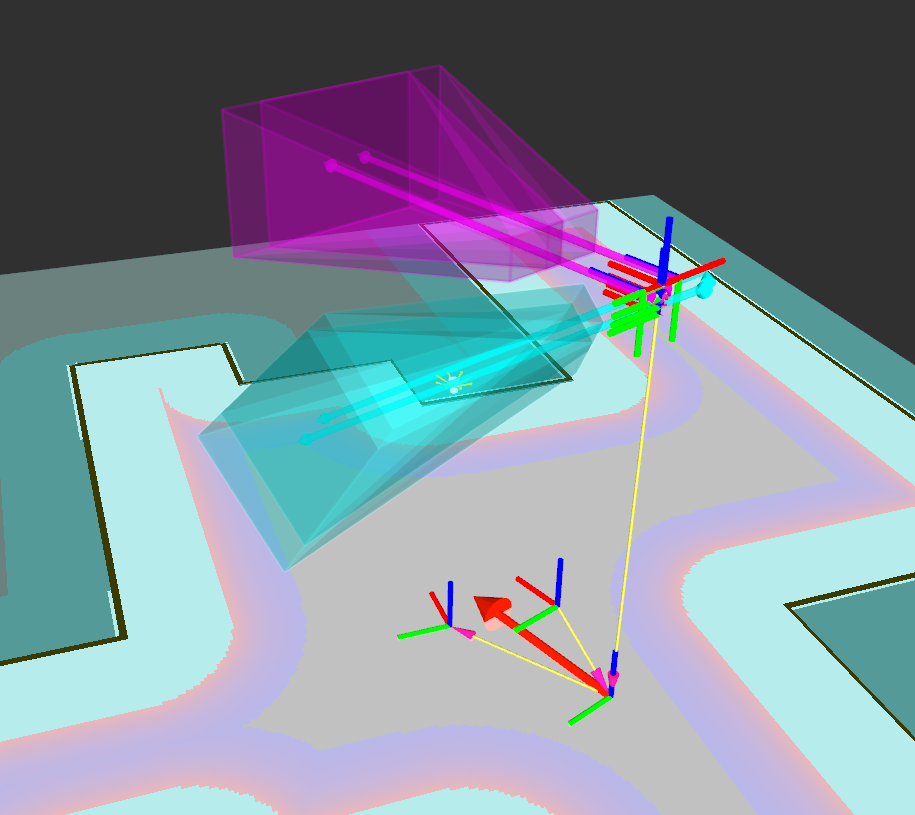

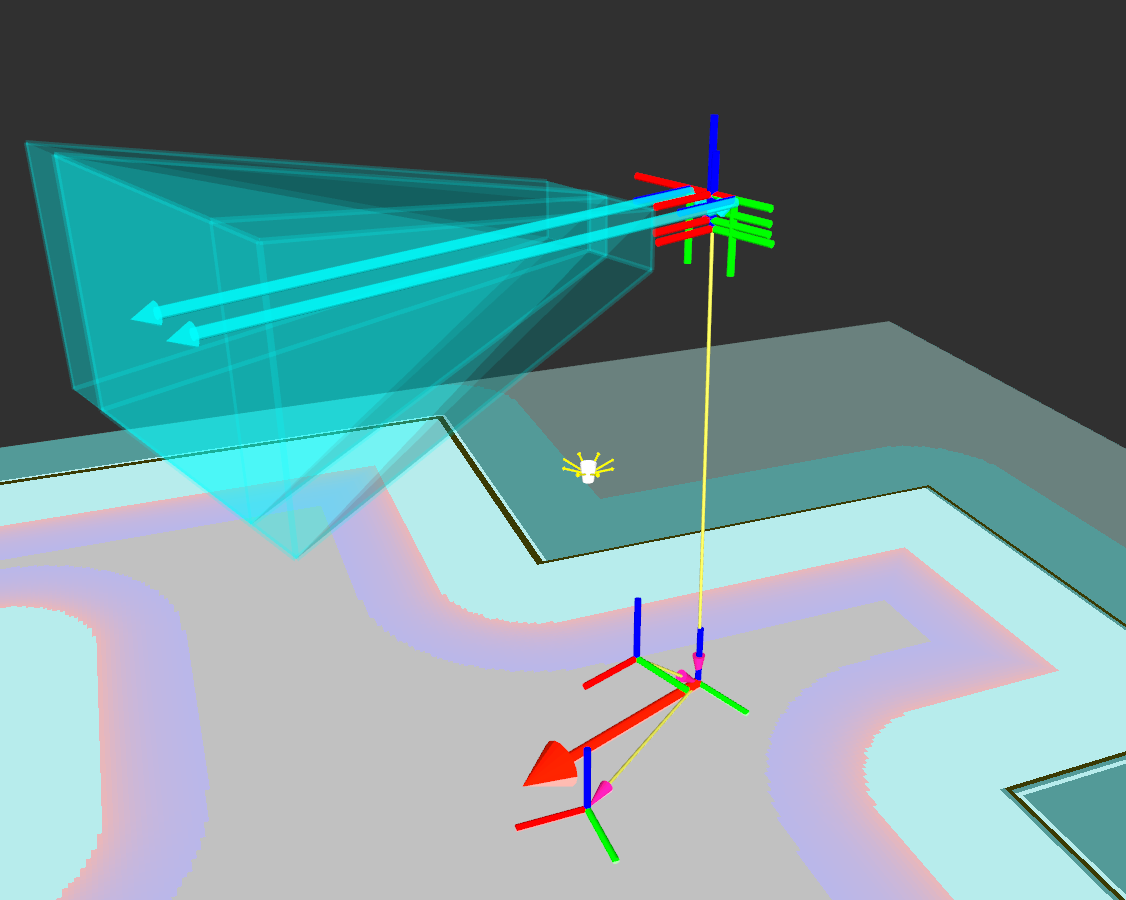

recognition_inverse_costs: 0.0The result contains the camera pose and robot state of the NextBestView and some stats about the view rating. We can now display the found view using:

rosservice call /nbv/trigger_frustum_visualization "current_pose:

position:

x: -0.50

y: -0.585

z: 1.47

orientation:

x: -0.115

y: 0.243

z: 0.412

w: 0.870"

the old view/start view can be shown using:

rosservice call /nbv/trigger_old_frustum_visualization "current_pose:

position:

x: -0.50

y: -0.42

z: 1.47

orientation:

x: 0.0

y: 0.0

z: 0.179

w: 0.983"