Contents

visual_odometry is an application available in ccny_rgbd for estimating the motion of a moving RGBD camera. It requires a stream of registered depth and rgb images, as well as a CameraInfo matrix. These topics can be generated using rgbd_image_proc in conjunctions with ccny_openni_launch, or any compatible driver.

visual_odometry is a real-time application with relatively low computational requirements. It does not output a map, and does not perform SLAM in the sense of closing large loops in the trajectory. For a graph-based SLAM solution, visual_odometry can be used in conjuntion with keyframe_mapper.

ROS API

Subscribed Topics

/rgbd/rgb (sensor_msgs/Image)

- Input RGB image

- Input Depth image (registered in the RGB frame)

- CameraInfo, applies to both images.

Published Topics

odom (nav_msgs/Odometry)

- Pose of the base frame in relation to the fixed frame, as an odometry message.

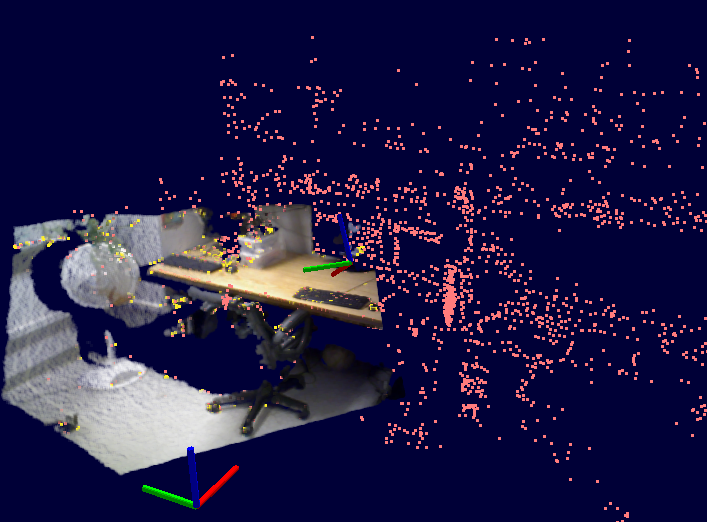

- Point cloud of the detected features

- Point cloud of the sparse model maintained for registration.

- Covariance matrices of the sparse model features. The 3x3 cov. matrix is visualized by the 3 principal components, each with a length of +/- 3 sigmas.

Parameters

General parameters

~queue_size (int, default: 5)

- input and output queue sizes

- The moving frame

- The fixed frame

- Whether to publish a tf transform between the fixed and moving frames.

Feature parameters

~feature/detector_type (string, default: "GFT")

- Type of feature detector. Available types: "GFT", "SURF", "STAR", "ORB"

- Whether to publish the point cloud of 3D features

- Half-size of the window for the Gaussian smoothing kernel. 0 = no smoothing

- Maximum z-range, in meters, allowed for valid features

- (GFT detector only) Number of desired features

- (GFT detector only) Minimum distance, in pixels, between detected features

- (ORB detector only) Number of desired features

- (ORB detector only) Detection threshold

- (STAR detector only) Minimum distance, in pixels, between detected features

- (STAR detector only) Detection threshold

- (SURF detector only) Detection threshold

Registration parameters

~reg/reg_type (string, default: "ICPProbModel")

- Registration type. Available options: "ICPProbModel", "ICP" (deprecated)

- Dimensions which are constrained (no motion allowed). NONE = 0, ROLL_PITCH = 1, ROLL_PITCH_Z = 2. A ground robot, for example, will usually have ROLL_PITCH_Z constraints.

- Maximum ICP iterations convergence criteria for ICP

- Minimum required correspondences for ICP

- Maximum number of features in the sparse model. Once saturated, new points will overwrite old points.

- Maximum distance (in meters) for ICP correspondences

- Maximum Mahalanobis distance (unitless) for feature-to-model association in Kalman Filter

- Number of Euclidean candidates to consider for finding best Mahalanobis nearest neighbor for Kalman Filter

- Whether to publish the sparse model as a point cloud

- Whether to publish the sparse model covariances as a visualization markers. Markers will display the 3 principal axes of the 3x3 covariance matrix, with a length of +/- 3 sigmas

- Linear convergence criteria for ICP

- Angular convergence criteria for ICP

Transforms

Required tf Transforms

camera_link → [incoming image frame]- Transform from the moving frame to the optical camera frame.

Provided tf Transforms

odom → camera_link- The pose of the moving frame in the fixed frame. Only provided when publish_tf is enabled.

Services

save_sparse_map (ccny_rgbd/Save)

- Saves the sparse feature model as a pcd point cloud.

Usage

First, launch the device and image processing via ccny_openni_launch.

Note: You can use any launcher, as long as it outputs a registered depth and rgb image, and the corresponding CameraInfo (see API above).

roslaunch ccny_openni_launch openni.launch

Next, launch the visual_odometry.launch:

roslaunch ccny_rgbd visual_odometry.launch