Automatic Calibration of Extrinsic Parameters

Description: Automatic Calibration of the 3D pose of the Kinect with respect to the robot base. Uses fast ICP to track the 3D pose of the Kinect, then uses a global optimization to retrieve the static transformation.Submitted By: François Pomerleau, Francis Colas and Stéphane Magnenat - ETHZ ASL

Contents

Video

Summary of Contributions

This entry provides the following open-source contributions to the community, all released under a BSD license:

libnabo, a fast K Nearset Neighbor library for low-dimensional spaces. libnabo employs the algorithm of ANN, but we optimized its implementation which makes it 30% faster than ANN (unit testing included).

libpointmatcher, a modular ICP library with a clean pipeline-based architecture. Currently based on Eigen 2, but will switch to Eigen 3 once it is released, to ease integration with pcl.

modular_cloud_matcher, a 3D point-cloud matcher that provides a ROS interface to libpointmatcher. modular_cloud_matcher allows a complete configuration of the ICP pipeline through parameters.

extrinsic_calibration, a tool performing global optimization to retrieve the static transformation between the robot base and the Kinect.

Description of the entry

Our entry has two aspects:

a tracker using a modular and state-of-the-art ICP algorithm that computes online the motion of the kinect (modular_cloud_matcher),

a calibration algorithm that computes the transformation between the kinect and a robot based on their motions (extrinsic_calibration).

Tracker

When observing the same visual scene from two different points of view, it is possible to estimate the displacement between the two points of view. To achieve this, we align both visual scenes using the Iterative Closest Point registration algorithm.

This algorithm is provided by modular_cloud_matcher. It takes two point clouds, matches each point of one cloud to the nearest one in the other cloud, rejects outliers, and then computes the transformation that minimizes the error between the points. These matching, rejection and minimization steps are done several times until the algorithm converges to a local minimum (see figure below) and outputs a transformation from one cloud to the other.

Computing the transformation in this way between each pair of frames allows us to have a kind of visual odometry. However, as for a robot odometry, accumulating a lot of small and slightly noisy displacements creates drift in the position estimation. Therefore, we use keyframes as reference instead of the previous point cloud. We create a new keyframe each time the estimated overlap between the point clouds is too low.

Indeed if the two observations are too different, the ICP algorithm may not converge. Another case of failure can be caused by dynamic objects. This is why we filter some outliers which are points that are too different between the two point clouds. Without this filter, the tracker would try to compensate both for the motion of the kinect and the motion of the dynamic objects.

With this (see figure below), we obtain an estimate of the motion of the Kinect which is fast (10-30 Hz on a laptop), reliable, and with only minimal drift. This motion estimate can afterwards be used for calibration (as demonstrated in this entry), environment reconstruction, or simply to have a cool flashlight effect under rviz.

![]()

Calibration

When mounting a sensor on a robot using ROS, an important problem is to define its position and orientation with respect to the robot. The accuracy is important as all the readings of this sensor are affected by this transformation. Unfortunately, this requires, in practice, to measure inconvenient distances and angles. We propose a calibration procedure, implemented in extrinsic_calibration, that allows to automatically find the transformation without any manual measurement.

In this entry, we take the example of the kinect mounted on a ground robot but the module is generic and can work with a different robot as far as some kind of odometry is available, and with a different sensor, as far as its pose is tracked and published in tf.

You can see in the picture above that precise measurement of the position and orientation of the center of the kinect with respect to the middle between the wheels can be difficult and uncertain.

The calibration requires first to log the motion made by each reference frame while the robot is moving using tf_logger. Then optimizer can be run to get the parameters. It outputs the command line to run a static_transform_publisher and an equivalent entry that you can directly cut and paste into a launch file.

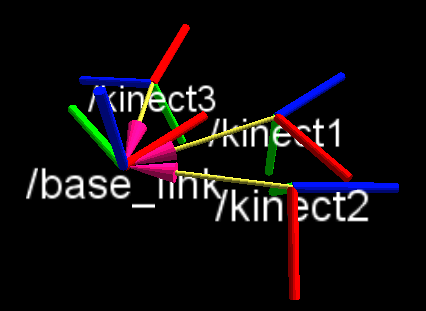

The figure below presents the results of three different runs using the kinect on the robot in three different positions.

|

|

|

|

How to Reproduce

Show EOL distros:

This entry depends on the modular_cloud_matcher and on the ni driver. To test the extrinsic_calibration a robot with odometry published in tf is also needed.

Boxturtle is not supported.

The following step-by-step procedure will install the proper setup to test this entry on cturtle:

- Create a working directory:

mkdir test-contest-entry && cd test-contest-entry

Install and setup the ni stack, this requires overlaying pcl as explained in the ni page.

Install modular_cloud_matcher and extrinsic_calibration

git clone git://github.com/ethz-asl/ros-mapping.git ethzasl_mapping cd ethzasl_mapping git checkout ros_contest

- Build packages:

rosmake openni_camera modular_cloud_matcher extrinsic_calibration

- Run test code, do not forget to plug the kinect in:

roslaunch modular_cloud_matcher openni_kinect_tracker_viewer.launch

- If you have a robot with some odometry you can first run:

rosrun extrinsic_calibration tf_logger

while moving the robot, then run:rosrun extrinsic_calibration optimizer output.txt

which should give you a command line you can execute to display the transform in rviz.

Install, compile and setup the ni stack as explained in the ni page.

Install and compile the modular_cloud_matcher as explained in the modular_cloud_matcher page. This fetches the ethzasl_mapping as well.

- Compile the calibration package:

rosmake extrinsic_calibration

- If you have a robot with some odometry you can first run:

rosrun extrinsic_calibration tf_logger

while moving the robot, then run:rosrun extrinsic_calibration optimizer output.txt

which should give you a command line you can execute to display the transform in rviz.

Dependencies

Software:

point_cloud_converter (only for cturtle)

Hardware:

- any robot moving on the ground plane

- Kinect

If you do not own a Kinect

You can test the tracker using a dataset available at http://www.asl.ethz.ch/research/datasets. The latter provides bags of three different movements, at three different speeds, in environments of three different complexities.

Limitations

Without adding extra processing, such as ground extraction, it is not possible to retrieve the height of the Kinect solely using the difference in transforms with the robot only moving on the ground plane.

This is a limitation in the dataset as the optimizer computes the full transformation but the z component of the translation is given as 0 in the output.