Only released in EOL distros:

Package Summary

Construction and Use of a Recognition Database for Grasping Purposes

- Maintainer status: maintained

- Maintainer: David Kent <dekent AT gatech DOT edu>, Russell Toris <russell.toris AT gmail DOT com>

- Author: David Kent <dekent AT gatech DOT edu>, Russell Toris <russell.toris AT gmail DOT com>, bhetherman <bhetherman AT wpi DOT edu>

- License: BSD

- Bug / feature tracker: https://github.com/GT-RAIL/rail_pick_and_place/issues

- Source: git https://github.com/GT-RAIL/rail_pick_and_place.git (branch: master)

Package Summary

Construction and Use of a Recognition Database for Grasping Purposes

- Maintainer status: maintained

- Maintainer: David Kent <dekent AT gatech DOT edu>, Russell Toris <russell.toris AT gmail DOT com>

- Author: David Kent <dekent AT gatech DOT edu>, Russell Toris <russell.toris AT gmail DOT com>, bhetherman <bhetherman AT wpi DOT edu>

- License: BSD

- Bug / feature tracker: https://github.com/GT-RAIL/rail_pick_and_place/issues

- Source: git https://github.com/GT-RAIL/rail_pick_and_place.git (branch: master)

Contents

About

The rail_recognition package contains nodes for object recognition and demonstration grasp selection using models from a grasp database, handled by graspdb. The package also contains a node used for generating object models from grasp demonstrations collected with the rail_grasp_collection package. The recognizer has support for a 2D image recognizer to run first in the full recognition pipeline, significantly increasing the runtime of the point cloud recognizer by limiting the number of candidate classes to test with point cloud registration. This package also contains nodes for data collection, training, and testing of the 2D recognizer.

Nodes

model_generator

Generate object models for recognition and manipulation using demonstrations from the grasp database.Action Goal

model_generator/generate_models (rail_pick_and_place_msgs/GenerateModelsGoal)- Generate models from a set of grasp demonstrations or object models (specified by id in the grasp database), and store the result as new models in the database.

Action Result

model_generator/generate_models (rail_pick_and_place_msgs/GenerateModelsResult)- Model generation result.

Published Topics

model_generator/debug_pc (sensor_msgs/PointCloud2)- Point cloud resulting from registration, only published if the debug parameter is set to true.

- Grasp poses resulting from registration, only published if the debug parameter is set to true.

Parameters

/graspdb/host (string, default: "127.0.0.1")- Grasp database host ip.

- Grasp database port.

- Grasp database username.

- Grasp database password.

- Grasp database name.

- Debug flag, set to true to publish debugging information.

object_recognizer

Object recognition of passed-in segmented objects, returns the recognized object with name, database id, and grasps filled in.Action Goal

rail_recognition/recognize_object/goal (rail_manipulation_msgs/RecognizeObjectGoal)- Recognize a segmented object passed in as the goal.

Action Result

rail_recognition/recognize_object/result (rail_manipulation_msgs/RecognizeObjectResult)- The segmented object with recognized information (name, database id, and example grasps ordered by success rate) filled in, or simply the segmented object if recognition failed.

Parameters

/graspdb/host (string, default: "127.0.0.1")- Grasp database host ip.

- Grasp database port.

- Grasp database username.

- Grasp database password.

- Grasp database name.

object_recognition_listener

Object recognition of segmented objects, specified by object index in the segmented object list.Action Goal

rail_recognition/recognize (rail_manipulation_msgs/RecognizeGoal)- Recognize a segmented object passed in by index.

- Recognize all segmented objects.

Action Result

rail_recognition/recognize (rail_manipulation_msgs/RecognizeResult)- Object recognition success.

- Object recognition successes.

Subscribed Topics

object_topic param (rail_manipulation_msgs/SegmentedObjectList)- Incoming segmented object data.

Published Topics

object_recognition_listener/recognized_objects (rail_manipulation_msgs/SegmentedObjectList)- Outgoing segmented and recognized object data.

- Poses for most recently recognized object, only published if the debug flag is set.

Parameters

/graspdb/host (string, default: "127.0.0.1")- Grasp database host ip.

- Grasp database port.

- Grasp database username.

- Grasp database password.

- Grasp database name.

- Incoming segmented objects topic.

- Debug flag, publishes grasp poses on recognized objects when true.

- Enable/disable narrowing grasp models by first using the 2D image recognizer before performing point cloud recognition.

rail_grasp_model_retriever

Grasp model retrieval from the grasp database via action server.Action Goal

rail_grasp_model_retriever/retrieve_grasp_model/goal (rail_pick_and_place_msgs/RetrieveGraspModelGoal)- Grasp model to be retrieved from the database.

Action Result

rail_grasp_model_retriever/retrieve_grasp_model/result (rail_pick_and_place_msgs/RetrieveGraspModelResult)- The grasp model retrieved from the database. The point cloud and grasp poses will also be published on their respective topics when the result is sent.

Published Topics

rail_grasp_model_retriever/point_cloud (sensor_msgs/PoitnCloud2)- Retrieved point cloud.

- Retrieved grasp poses.

Parameters

/graspdb/host (string, default: "127.0.0.1")- Grasp database host ip.

- Grasp database port.

- Grasp database username.

- Grasp database password.

- Grasp database name.

metric_trainer

Generate data sets for training registration metric decision trees used in classifying pairwise point cloud registrations for the object model generation and recognition pipelines.Action Goal

metric_trainer/train_metrics/goal (rail_pick_and_place_msgs/TrainMetricsGoal)- Initiate metric training for all pairs of point clouds of a given type; requires user input for labeling whether each pairwise merge is a successful or not.

Action Result

metric_trainer/train_metrics/result (rail_pick_and_place_msgs/TrainMetricsResult)- Training result, true on success. The training data itself is saved to an output file in the .ros directory.

Actions Called

metric_trainer/get_yes_no_feedback (rail_pick_and_place_msgs/GetYesNoFeedback)- Action for collecting feedback for the success of a pairwise merge.

Published Topics

metric_trainer/base_pc (invalid message type for MsgLink(msg/type))- Base point cloud used for pairwise registration.

- Point cloud aligned to the base point cloud.

Parameters

/graspdb/host (string, default: "127.0.0.1")- Grasp database host ip.

- Grasp database port.

- Grasp database username.

- Grasp database password.

- Grasp database name.

collect_images

Run an instance of the 2D image recognizer to save 2D images as training/test data at every segmentation call.Subscribed Topics

rail_segmentation/segmented_objects (rail_manipulation_msgs/SegmentedObjectList)- Segmented objects topic from which to save images.

Parameters

collect_images/saved_data_dir (string, default: ros::package::getPath("rail_recognition")/data/saveddata)- Directory containing trained image recognizer data (not used by this node).

- Directory containing training images (not used by this node).

- Directory containing test images (not used by this node).

- Location at which to save new images.

- Flag for saving new images; if true each segmented object from the collect_images/segmented_objects_topic will be saved as a 2D image in the collect_images/newimages directory.

- Topic for incoming segmented objects, used only if collect_images/save_new_images is true.

save_image_recognizer_features

Run an instance of the 2D image recognizer to build the dictionaries for the recognizer, then exit.Subscribed Topics

rail_segmentation/segmented_objects (rail_manipulation_msgs/SegmentedObjectList)- Segmented objects topic from which to save images.

Parameters

save_image_recognizer_features/saved_data_dir (string, default: ros::package::getPath("rail_recognition")/data/saveddata)- Directory at which to save the dictionaries.

- Directory containing training images, organized by object class.

- Directory containing test images, organized by object class (not used by this node).

- Location at which to save new images (not used by this node).

- Flag for saving new images (not used by this node).

- Topic for incoming segmented objects (not used by this node).

train_image_recognizer

Run an instance of the 2D image recognizer to train the neural net on the training dataset, then exit.Subscribed Topics

rail_segmentation/segmented_objects (rail_manipulation_msgs/SegmentedObjectList)- Segmented objects topic from which to save images.

Parameters

train_image_recognizer/saved_data_dir (string, default: ros::package::getPath("rail_recognition")/data/saveddata)- Directory at which to save the dictionaries.

- Location of the training dataset, organized by object class.

- Directory containing test images, organized by object class (not used by this node).

- Location at which to save new images (not used by this node).

- Flag for saving new images (not used by this node).

- Topic for incoming segmented objects (not used by this node).

test_image_recognizer

Run an instance of the 2D image recognizer to test the trained neural net on the test dataset, then exit. Results of the test are printed to the terminal.Subscribed Topics

rail_segmentation/segmented_objects (rail_manipulation_msgs/SegmentedObjectList)- Segmented objects topic from which to save images.

Parameters

test_image_recognizer/saved_data_dir (string, default: ros::package::getPath("rail_recognition")/data/saveddata)- Directory at which to save the dictionaries.

- Directory containing training images, organized by object class (not used by this node).

- Location of the test dataset, organized by object class.

- Location at which to save new images (not used by this node).

- Flag for saving new images (not used by this node).

- Topic for incoming segmented objects (not used by this node).

The Recognition Pipeline

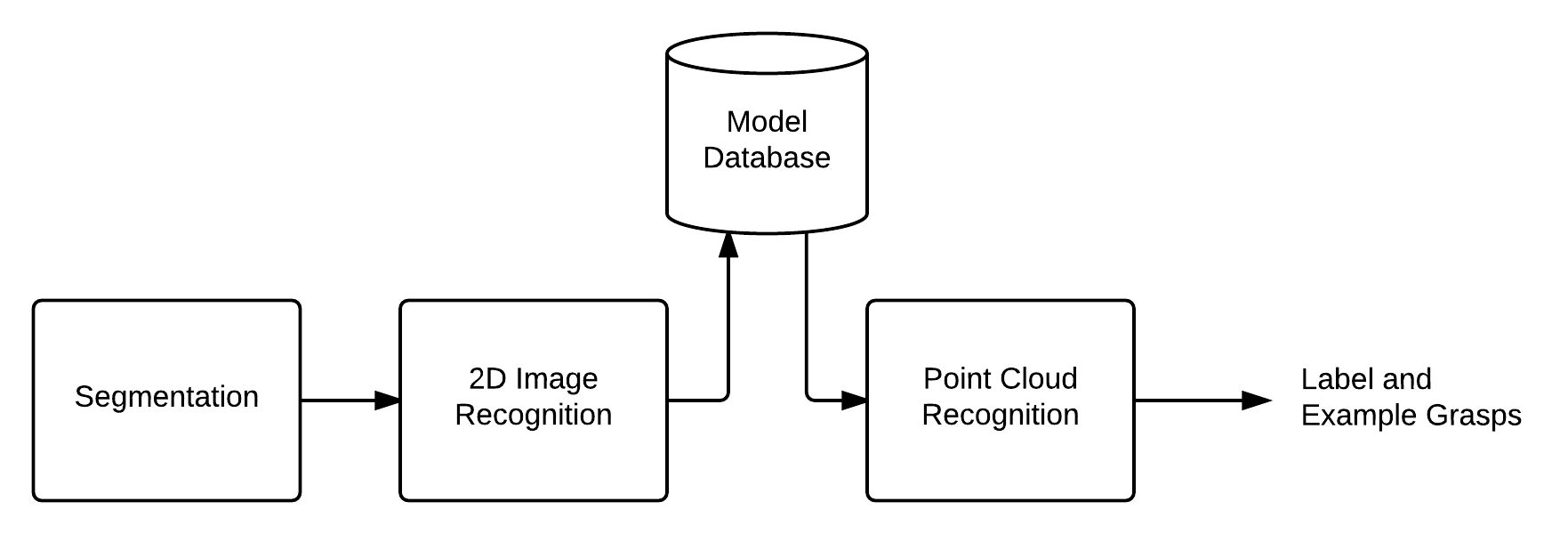

The full recognition pipeline takes in a list of unrecognized segmented objects, such as what the rail_segmentation package provides. Each unrecognized object will first be classified by the 2D image classifier to determine a set of candidate object classes of high probability. These candidate classes will then be retrieved from the object model database, handled by graspdb, which are then used by the point cloud recognizer to provide a final object label and set of example grasps for picking up the now-recognized object.

Note that the initial 2D image recognition is an optional step. It can be disabled by setting the use_object_recognition parameter of the object_recognition_listener node to false. Disabling this step will significantly lower the runtime of the algorithm, however, as every model in the database will be considered by the point cloud recognizer.

Setting up the 2D Image Recognizer

The 2D image recognizer can be trained in a few steps. First, an image set representative of the objects in the environment must be collected. This can be accomplished by running a segmentation node, such as the one found in rail_segmentation, and the collect_images node as follows:

roslaunch rail_recognition collect_images.launch

The images will be saved in the directory specified to the collect_images node. These must then be sorted by hand into a training dataset organized by each object class. See the data directory for an example. The collected images, or a subset of them, can also be sorted into the test dataset.

The recognizer uses a bag-of-words model with a neural network classifier. The next step is to train the dictionary for the bag-of-words, which can be done by running the node below. Note that if the recognizer is being retrained for added objects or data, the same dictionaries can be re-used if the dataset hasn't changed dramatically, and this step can be skipped.

rosrun rail_recognition save_image_recognizer_features

Next, the neural net itself needs to be trained:

rosrun rail_recognition train_image_recognizer

The 2D image recognizer is now trained and ready to use. For verification of its accuracy, the test dataset can be used for evaluation:

rosrun rail_recognition test_image_recognizer

Setting up the Point Cloud Recognizer

See the tutorials section of rail_pick_and_place for details on how to set up an object model database, provide grasp demonstrations, train new object models, and refine existing object models.

Installation

To install the rail_pick_and_place package, you can install from source with the following commands:

Startup

The rail_recognition package contains a launch file for launching the object_recognition_listener node with the various database, segmentation, and recognition parameters set, executed with:

roslaunch rail_recognition object_recognition_listener.launch

Other major nodes in the package contain similar launch files with parameters set, listed below, where each launch file corresponds to a node of the same name.

roslaunch rail_recognition collect_images.launch

roslaunch rail_recognition metric_trainer.launch

roslaunch rail_recognition model_generator.launch

roslaunch object_recognizer.launch

roslaunch rail_recognition rail_grasp_model_retriever.launch

The set of nodes used for training the 2D image recognizer can be run as follows:

rosrun rail_recognition save_image_recognizer_features rosrun rail_recognition train_image_recognizer rosrun rail_recognition test_image_recognizer

Model generation can be run with an rviz plugin for visualization found in rail_pick_and_place_tools.