Author: Sara Cooper < sara.cooper@pal-robotics.com >

Maintainer: Sara Cooper < sara.cooper@pal-robotics.com >

Support: ari-support@pal-robotics.com

Source: https://github.com/pal-robotics/ari_tutorials.git

| |

Localization and path planning

Description: Learn how to run laser-based localization and autonomous navigation avoiding obstacles by means of global and local path planning.Keywords: Localization, amcl, path planning, autonomous navigation

Tutorial Level: INTERMEDIATE

Purpose

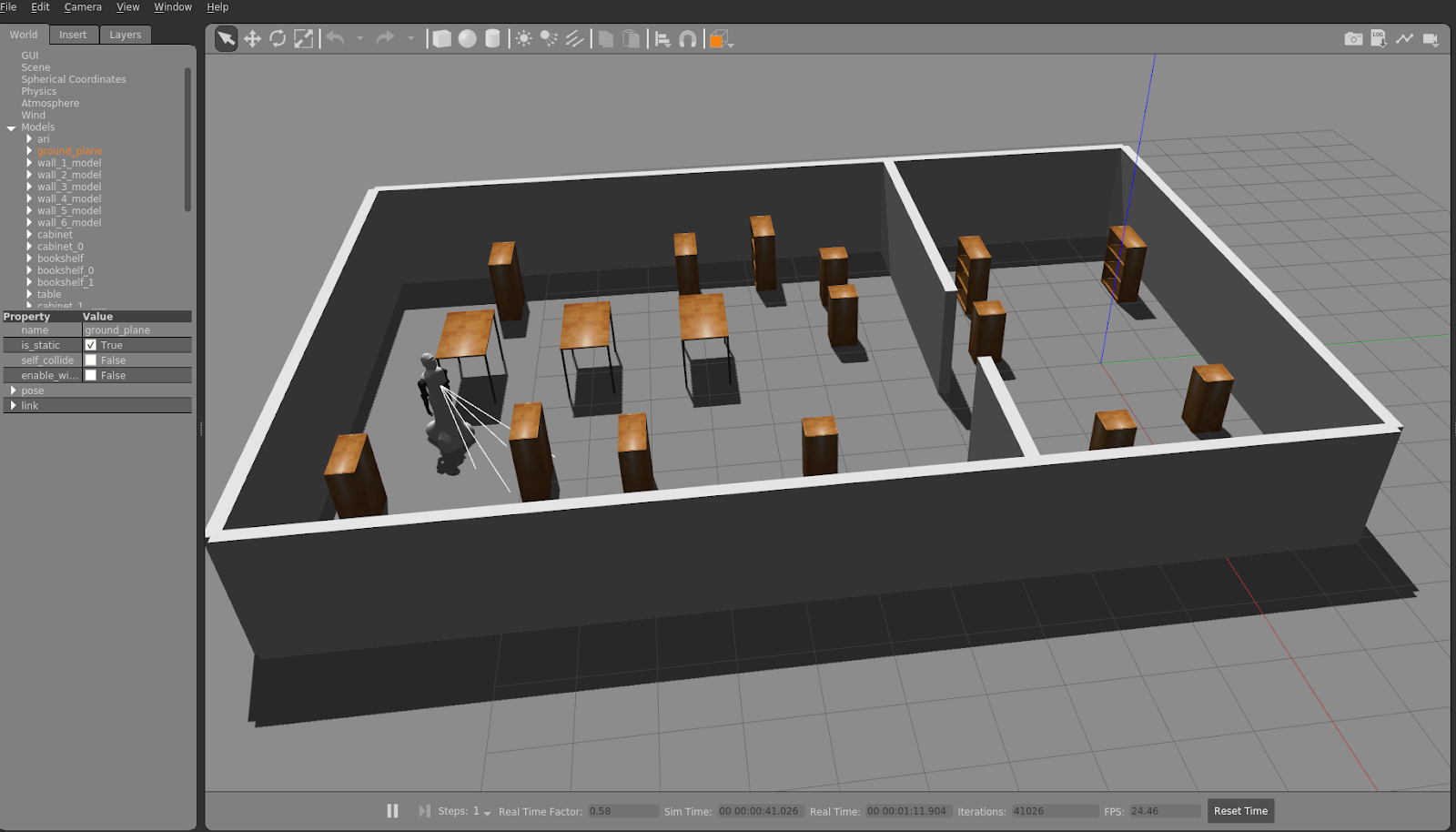

This tutorial shows how to make ARI navigate autonomously provided a map build up of laser scans and taking into account the torso RGB-D camera in order to avoid obstacles. For this point cloud data is transformed into laser scans by pointcloud_to_laserscan package, which is used by teb_local_planner, in order to implement an online optimal local trajectory plan. It then uses amcl, adaptive Monte Carlo localization, to track the pose of ARI against the given map using a particle filter.

Pre-Requisites

First make sure that the tutorials are properly installed along with the ARI simulation, as shown in the Tutorials Installation Section. Then, follow the instructions in the Create a map with slam_toolbox tutorial in order to create and save a map.

Execution

First of all open two consoles and source ARI's public simulation workspace in each one

cd ~/ari_public_ws source ./devel/setup.bash

In the first console launch the simulation of ARI with all the required nodes for running the autonomous navigation

roslaunch ari_2dnav_gazebo ari_navigation.launch public_sim:=true lost:=true

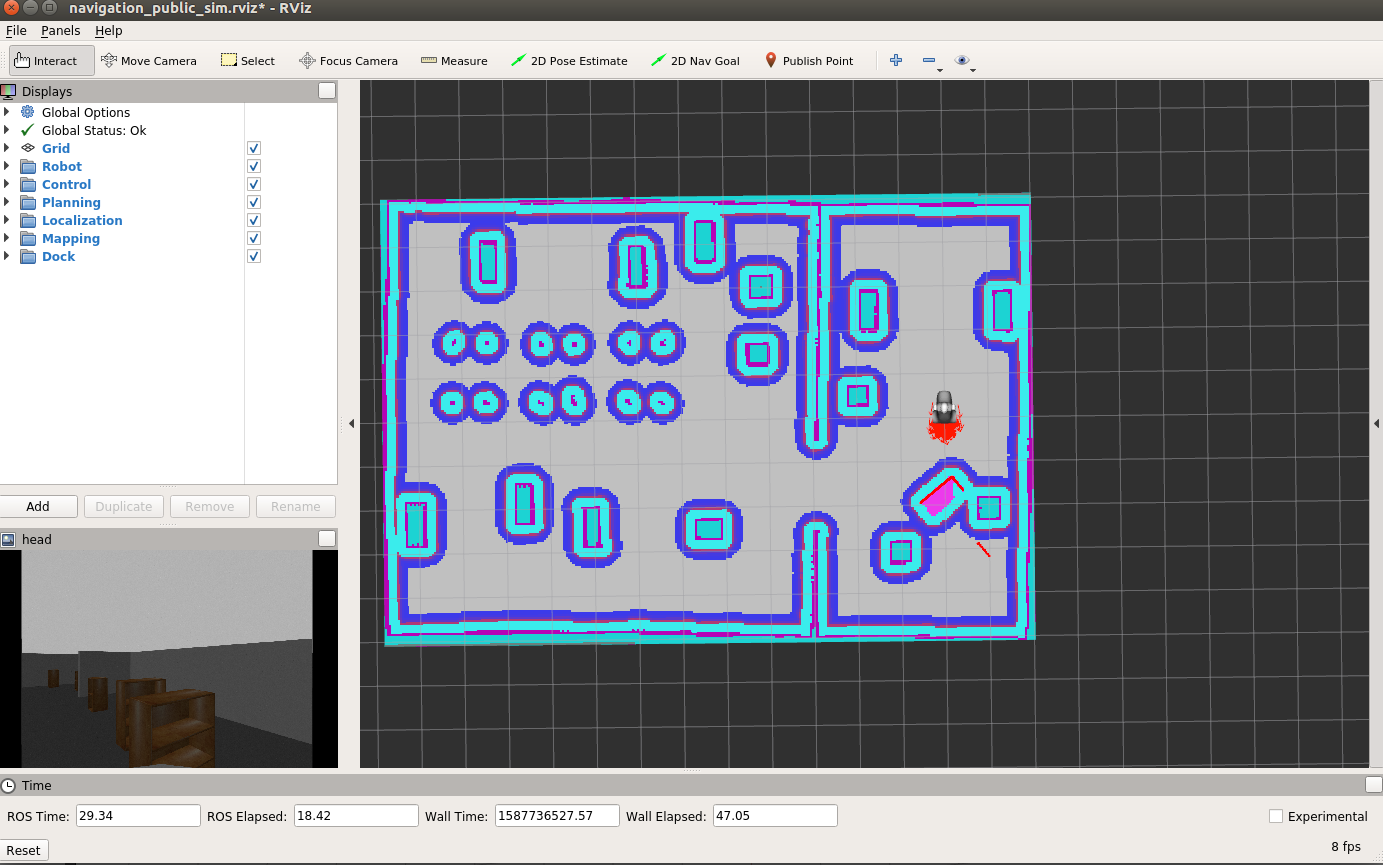

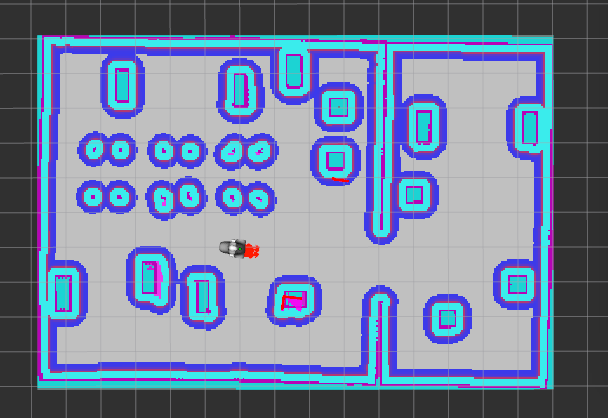

The following information is overlaid on top of the map:

Particle cloud: a cloud of small red arrows representing the amcl filter particles, which spreads around the robot. A concentration of the particles indicates a growing confidence on its position estimate.

Global costmap: regions around obstacles which are used by the global planner in order to compute paths to navigate from one point of the map to another without getting too close to the static obstacles registered during mapping. More details can be found here: http://wiki.ros.org/costmap_2d

Local costmap: similar to the global costmap, but it is smaller and moves with the robot, it is used to take into account new features that were not present in the original map. It is used by the teb_local_planner to avoid obstacles, both static and dynamic, while trying to follow the global path computed by the global planner. More details can be found here: http://wiki.ros.org/costmap_2d

RGBD scan: lines in dark blue represent the points produced by the pointcloud_to_laserscan node. This artificial scan is also used to add/remove obstacles to the global and local costmaps.

Localization

As can be seen in the pictures below the robot wakes up in a position of the world which does not correspond to the map origin, which is the pose where the localization system assumes the robot is and where particles are spread representing possible poses of the robot.

In order to localize the robot run the following instruction in the second console:

rosservice call /global_localization "{}"

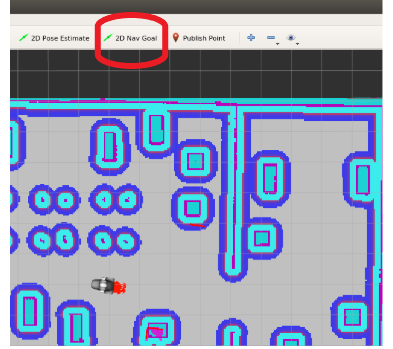

This causes the amcl probabilistic localization system to spread particles all over the map as shown in the picture below.

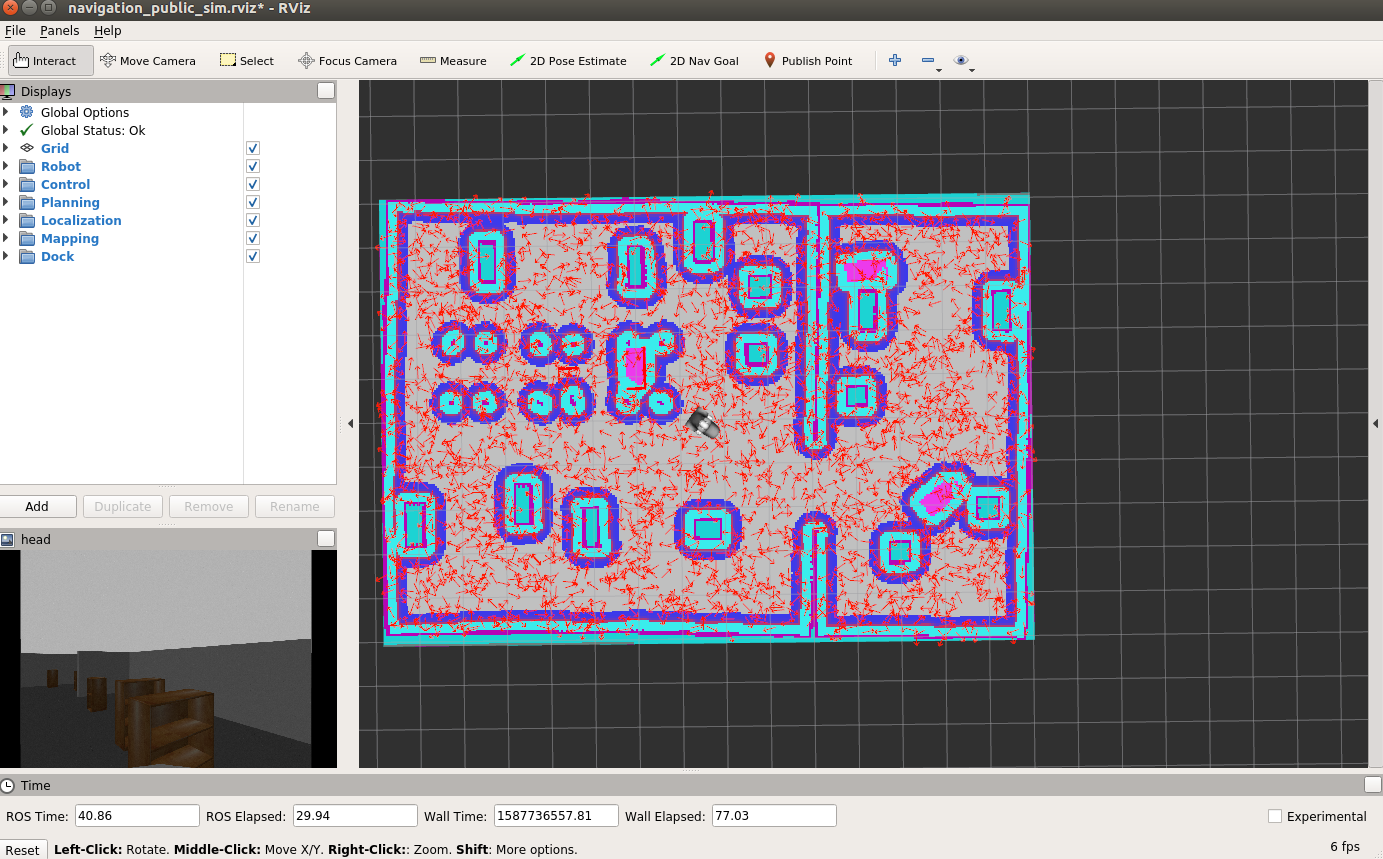

Now a good way to help the particle filter to converge to the right pose estimate is to move the robot, taking care not to hit any obstacles. You may use the left and right arrows of the key_teleop in order to do so.

rosrun key_teleop key_teleop.py

Instead of running the key_teleop node, you can also send 2D Nav Goal through Rviz, as explained in section 4.1.

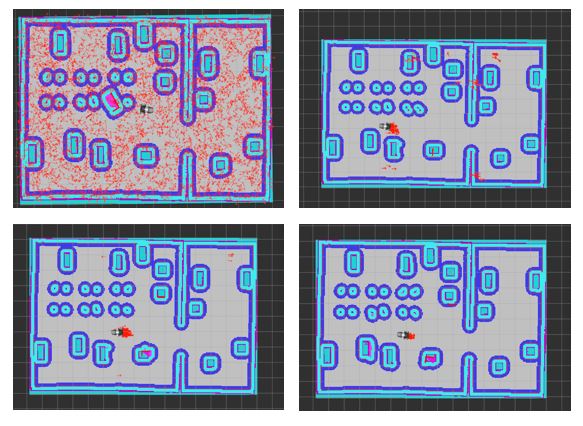

The result is shown in the following pictures, where invalid particles are removed as more laser scans can be matched with the map and the localization converges eventually to the correct pose.

Now, it is preferable to clear the costmaps as it contains erroneous data due to the bad localization of the robot:

rosservice call /move_base/clear_costmaps "{}"

The costmap now only takes into account obstacles in the static map and the system is ready to perform navigation.

Autonomous navigation with rviz

First of all make sure to kill the key_teleop node. Otherwise the robot will not move autonomously as key_teleop takes higher priority.

In order to send the robot to another location of the map the button 2D Nav Goal can be pressed in rviz.

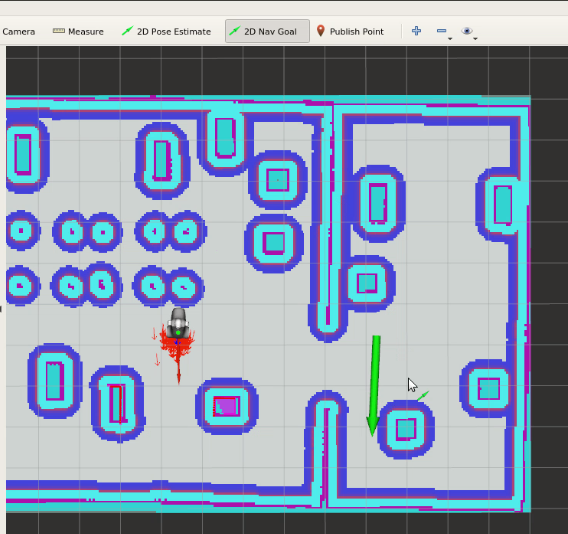

Then, by clicking and holding the left button of the mouse over the point of the map at which we want to send the robot a green arrow will appear. By dragging the mouse around the point the orientation will change. This arrow represents the target position and orientation that the robot needs to reach.

When releasing the mouse button the global planner will compute a path, which will appear as a blue line starting at the current position of the robot and ending with a red arrow representing the desired target orientation.

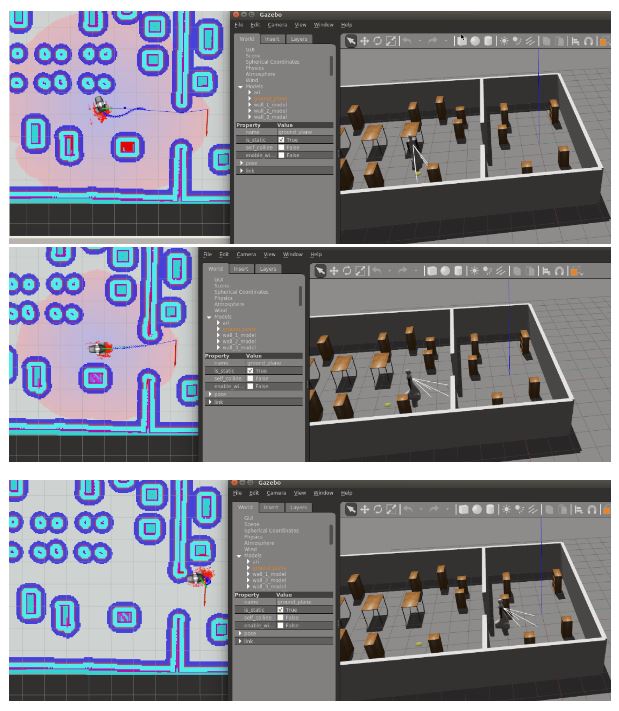

Then, the robot will start moving following the trajectory, which will automatically rearrange when getting too close to unexpected obstacles. The pictures below show different snapshots of rviz and gazebo during the navigation toward the selected goal.

Finally, the robot stops when the target goal pose have been reached up to a user-defined tolerance.