Show EOL distros:

Package Summary

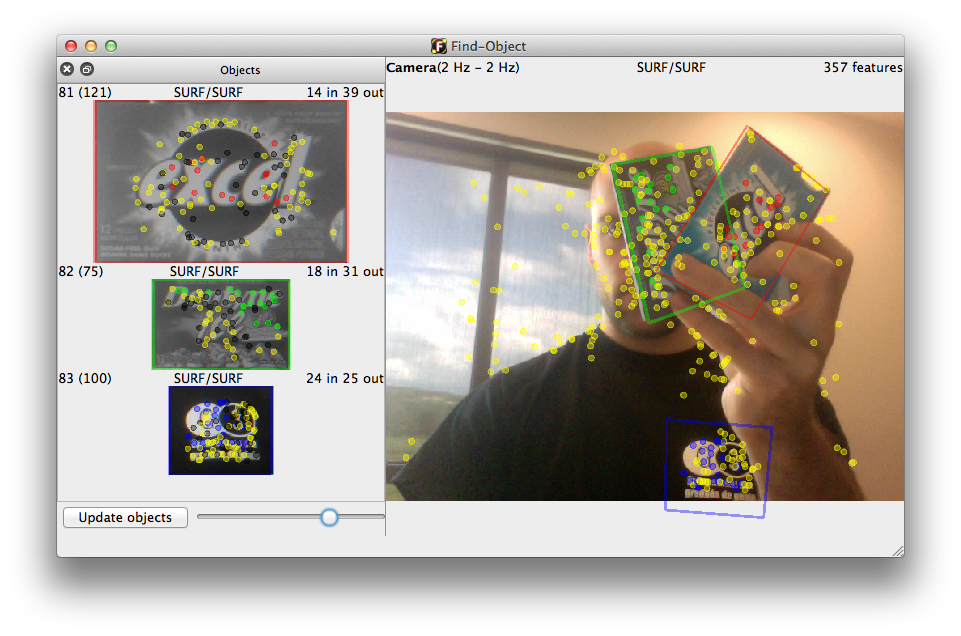

Find-Object's ROS package. Find-Object is a simple Qt interface to try OpenCV implementations of SIFT, SURF, FAST, BRIEF and other feature detectors and descriptors for objects recognition.

- Maintainer status: maintained

- Maintainer: Mathieu Labbe <matlabbe AT gmail DOT com>

- Author: Mathieu Labbe <matlabbe AT gmail DOT com>

- License: BSD

- External website: http://find-object.googlecode.com

- Source: svn https://find-object.googlecode.com/svn/trunk/ros-pkg/find_object_2d

Package Summary

Find-Object's ROS package. Find-Object is a simple Qt interface to try OpenCV implementations of SIFT, SURF, FAST, BRIEF and other feature detectors and descriptors for objects recognition.

- Maintainer status: maintained

- Maintainer: Mathieu Labbe <matlabbe AT gmail DOT com>

- Author: Mathieu Labbe <matlabbe AT gmail DOT com>

- License: BSD

- External website: http://find-object.googlecode.com

- Source: svn https://find-object.googlecode.com/svn/trunk/ros-pkg/find_object_2d

Package Summary

Find-Object's ROS package. Find-Object is a simple Qt interface to try OpenCV implementations of SIFT, SURF, FAST, BRIEF and other feature detectors and descriptors for objects recognition.

- Maintainer status: maintained

- Maintainer: Mathieu Labbe <matlabbe AT gmail DOT com>

- Author: Mathieu Labbe <matlabbe AT gmail DOT com>

- License: BSD

- External website: http://find-object.googlecode.com

- Source: svn https://find-object.googlecode.com/svn/trunk/ros-pkg/find_object_2d

Package Summary

The find_object_2d package

- Maintainer status: maintained

- Maintainer: Mathieu Labbe <matlabbe AT gmail DOT com>

- Author: Mathieu Labbe <matlabbe AT gmail DOT com>

- License: BSD

- External website: http://find-object.googlecode.com

- Source: git https://github.com/introlab/find-object.git (branch: kinetic-devel)

Package Summary

The find_object_2d package

- Maintainer status: maintained

- Maintainer: Mathieu Labbe <matlabbe AT gmail DOT com>

- Author: Mathieu Labbe <matlabbe AT gmail DOT com>

- License: BSD

- External website: http://find-object.googlecode.com

- Source: git https://github.com/introlab/find-object.git (branch: lunar-devel)

Package Summary

The find_object_2d package

- Maintainer status: maintained

- Maintainer: Mathieu Labbe <matlabbe AT gmail DOT com>

- Author: Mathieu Labbe

- License: BSD

- Bug / feature tracker: https://github.com/introlab/find-object/issues

- Source: git https://github.com/introlab/find-object.git (branch: melodic-devel)

Package Summary

The find_object_2d package

- Maintainer status: maintained

- Maintainer: Mathieu Labbe <matlabbe AT gmail DOT com>

- Author: Mathieu Labbe

- License: BSD

- Bug / feature tracker: https://github.com/introlab/find-object/issues

- Source: git https://github.com/introlab/find-object.git (branch: noetic-devel)

Contents

Overview

Simple Qt interface to try OpenCV implementations of SIFT, SURF, FAST, BRIEF and other feature detectors and descriptors. Using a webcam, objects can be detected and published on a ROS topic with ID and position (pixels in the image). This package is a ROS integration of the Find-Object application.

Citing

@misc{labbe11findobject,

Author = {{Labb\'{e}, M.}},

Howpublished = {\url{http://introlab.github.io/find-object}},

Note = {accessed YYYY-MM-DD},

Title = {{Find-Object}},

Year = 2011

}

Quick start

$ roscore & # Launch your preferred usb camera driver $ rosrun uvc_camera uvc_camera_node & $ rosrun find_object_2d find_object_2d image:=image_raw

Visit Find-Object on GitHub for some tutorials.

Description

Subscribe to an image topic /image (sensor_msgs/Image )

Publish detected objects (with position, rotation, scale and shear) as topic "objects":

$ rosrun find_object_2d print_objects_detected (...) --- Object 6 detected at (271.389282,138.113373) Object 7 detected at (115.537971,151.215271) --- Object 6 detected at (271.389862,138.345398) Object 7 detected at (115.422760,163.439835) (...)

Note that parameter Homography/homographyComputed must be true (default true) in order to publish the topic.

Parameter General/mirrorView would be false in order to visualize correctly the homography values returned.

Take a look at the sample launch file find_object_2d.launch to know how to start the node without the GUI and with pre-loaded objects (from previously saved objects using the gui File->"save objects").

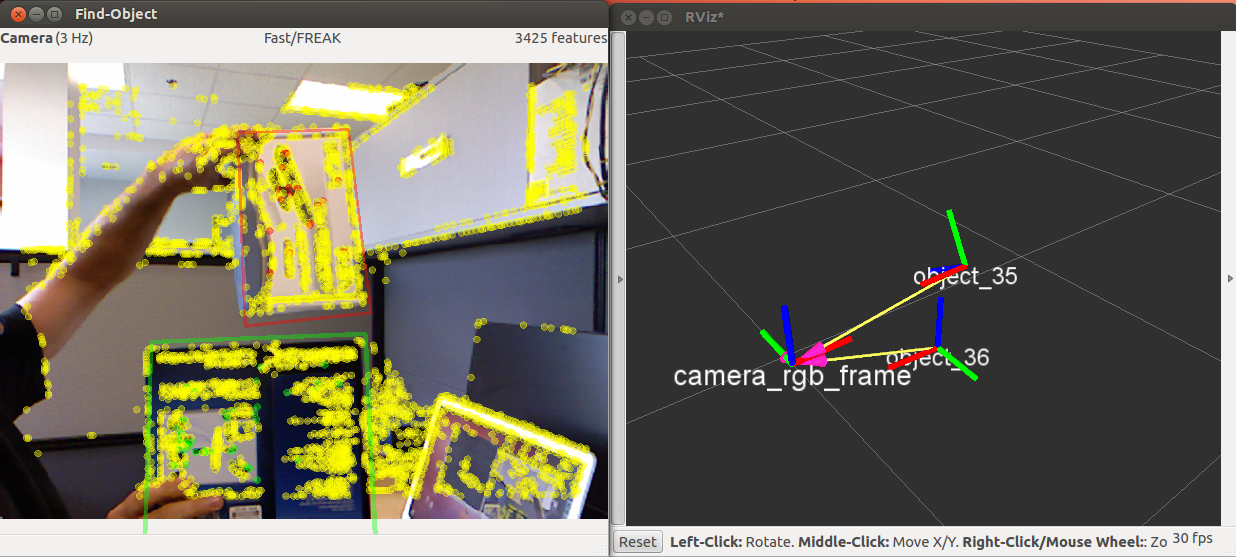

3D position of the objects

When using Kinect-like sensors, 3D position of the objects can be computed in Find-Object ros-pkg.

$ roslaunch openni_launch openni.launch depth_registration:=true $ roslaunch find_object_2d find_object_3d.launch $ rosrun rviz rviz (for visualisation purpose: set "fixed_frame" to "/camera_link" and add TF display)

Kinect v2 example (you will need iai_kinect2 package and find_object_3d_kinect2.launch):

$ roslaunch kinect2_bridge kinect2_bridge.launch publish_tf:=true $ roslaunch find_object_2d find_object_3d_kinect2.launch

Take a look at the sample launch file find_object_3d.launch to know how to start 3D mode. Kinect-like sensors required! ROS parameter subscribe_depth must be also set to true. Subscriptions:

/rgb/image_rect_color (sensor_msgs/Image )

/depth_registered/image_raw (sensor_msgs/Image )

/depth_registered/camera_info (sensor_msgs/CameraInfo )

Position of the objects are published over TF (center of the object with rotation). You can set ROS parameter object_prefix to change the prefix used on TF (default is "object" which gives "/object_1" and "/object_2" for objects 1 and 2 respectively).

If you are on a robot, you may want to have the pose of the objects in the /map frame. Take a look at this example.

- Example on a robot:

Nodes

find_object_2d

Subscribed Topics

image (sensor_msgs/Image)- RGB/Mono image. Used only if subscribe_depth is false.

- RGB/Mono image. Required if subscribe_depth is true.

- RGB camera metadata. Required if subscribe_depth is true.

- Registered depth image. Required if subscribe_depth is true.

Published Topics

objects (std_msgs/Float32MultiArray)- Objects detected formatted as [objectId1, objectWidth, objectHeight, h11, h12, h13, h21, h22, h23, h31, h32, h33, objectId2...] where h## is a 3x3 homography matrix (h31 = dx and h32 = dy, see QTransform). Example handling the message (source here)

- Objects detected with stamp (useful to sync with corresponding TF).

Parameters

~subscribe_depth (bool, default: "true")- Subscribe to depth image. tf will be published for each object detected (if the depth values are valid inside the object's region of the color image).

- Launch the GUI

- Path to folder containing objects to detect.

- Path to a session file to load. objects_path ignored if set.

- Path to settings file (*.ini).

- Object's prefix for tf.